I’m using the cycles x build from yesterday.

I can confirm that the version from 2 Jul had the overexposed problem and the 3 Jul version got it fixed.

Now with overexposed ODIN finally fixed, Volume and AO/bevel rendering in CyclesX only the annoying strange motion blur problem with OptiX to remains to be solved and CyclesX is almost production ready. Still have it with the Jul 3 version on Win 10 and RTX 3070.

I know that Cycles X does not accept bug reports now and the devs probably already know this, but I still want to share this bug with you guys because it is hilarious.

When you put 0 in the viewport sample count, it should go up infinitely. Cycles X has always just shown a very large number but since that number is oftenly unachieveable, it does not really matter. But in this bug, when you have the sample count first set to 32 then turn on adaptive sampling, wait til it says done, change it to 0, it would suddenly grow incredibly fast and reach the very large number in no time. I laughed so hard when I first saw that, almost like the software suddenly freak out or something. It’s so funny that I want to share with you guys.

I’m really happy with the new rendering performance of volumes in Cycles-X!

But there is also a bit of a memory spike that might be a tradeoff for rendering speed or it is just a bug.

I found an issue when you go inside an object with a volume material everything disappears.

I wouldn’t be surprised if the devs are already aware of this and are already planning ways to fix this. love the performance improvements!

Yes being inside a volume object with the camera and the objects volume shader / contribution disappear is annoying. Hopefully devs fixing it together with the motion blur on rotating objects bug where OpticX renders strange empty portions in the motion blur.

Tested latest build with fixed overexposed oidn, renders losing their quality with adaptive sampling + oidn but the issue gone without adaptive sampling.

This shouldn’t happened with normal cycles or very old version of cycles x build 1dea1d93d39a.

If I combine adaptive sampling with OIDN, no adaptive sampling is performed at all, because the rendering takes exactly as long as if I had not activated adaptive sampling at all.

I think it’s because of adaptive sampling. The faster sampling is just because less pixels are calculated, at an exponential speed. Makes sense.

Also looks like 0 is not infinite anymore: 16M samples sound “infinite enough” for devs

16 million (16,777,216 to be exact) is 2^24. I believe Cycles always had that value as the limit but it was hidden from the user unless you tried to type in a sample count higher than that or “infinitely render” to a sample count higher than it. The nature of the number being a power of 2 suggests it’s

not some random number picked by someone but instead a limit defined by how the value that describes the number of samples is stored.

Latest Cycles X Build for Linux is slower than Cycles on Blender 2.93.2- Cycles-X

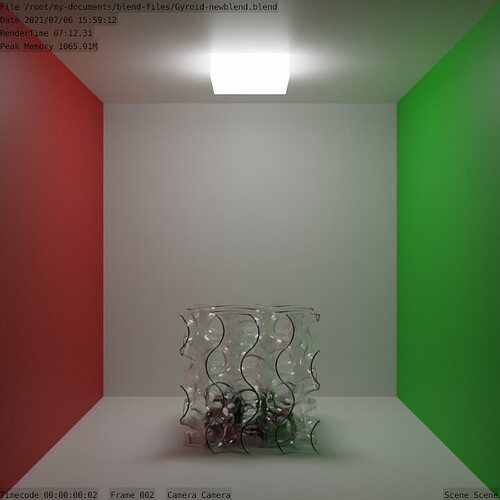

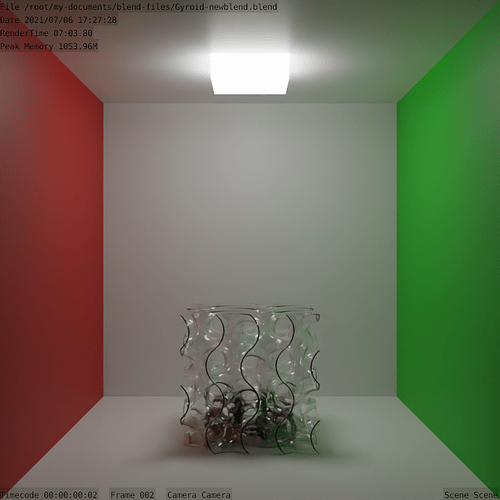

Below the renders Cycles X on my old Intel CPU 07:12.31 and Blender 293.2 07:03.80

Cycles X 07:12.31

Blender 293.2 07:03.80

At the moment improving performance for CPU rendering hasn’t really been a focus for performance improvements. As such that’s probably the reason you’re seeing this kind of performance.

CPU rendering performance is approximately the same as before at this point, but the new architecture opens up new possibilities there as well.

Source: Cycles X — Blender Developers Blog

Yes, this announcement was made a few months ago and a lot could change between now and then, but I personally haven’t seen many, if any, changes focused on improving CPU performance.

Also, in the same blog post, it’s stated that the performance improvements is mainly aimed towards modern devices. You say you have a really old CPU, as such you MAY not see the same sort of increases to performance that other users see with more modern CPUs.

Early versions of Cycles X had a noticeable boost on my CPU as well over 30%, and my CPU is old but not so old that it justifies such a regression in performance, and at least not for the worse.

Also here they talk about regression even with modern GPU Cycles-X - #470 by razin - Blender and CG Discussions - Blender Artists Community

With my very old CPU I’ve got a boost for about 24-29% on Cycles-X. I’m using a core-i-3-560 (about 11 years old)) together with a NVIDIA GT-710.

Some tests for render 300 samples

GPU - 28 sek

CPU - 2min 16 sek

GPU+CPU 1min 45sek

build Cycles-X (…c1802) 7 july

Anyone able to render any resolution close to 4k?

No cycles x build I knew was able to do it.

"Invalid value in cuMemcpyDtoH_v2( (char *)mem.host_pointer + offset, (CUdeviceptr)mem.device_pointer + offset, size) (C:\Users\blender\git\blender-vexp\blender.git\intern\cycles\device\cuda\device_impl.cpp:862)

"

I am forced to go back to 2.93 every time 4k render is needed.

I’ve checked it right now with System Core-i-3-560 + GT 710. Rendersize 4000x4000 is working fine for me

Edit: As I can see it grabs 4GB RAM even after rendering is finished => Memory-leak?

In Cycles the larger the tile size, the greater the memory usage. In Cycles-X we are using a single full size tile, the higher the output resolution the higher the memory usage.

Nothing has been implemented yet to solve that problem in Cycles-X, but that is surely in the plans.

Yes that is what I tought.

Here I have GTX1080 8GB and 32GB System Ram.

Rendering Mem:4060.03M and Peak 4060.03M as well.