Well… this is a compositor improvments thread… cycles/baking ideas are probably better off in a different thread

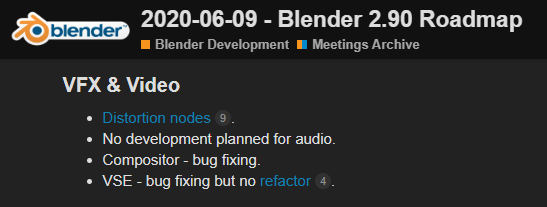

It’s from 2020-06-09 - Blender 2.90 Roadmap

Maybe the refactor can be done for 2.91? It’s not clear in this protocol. It already would makes sense to think about a 2.9+ and 3.0 roadmap at this point.

You mean like AOV? (Not sure if I understand correctly.)

Long time vfx feature film compositor here (shake,nuke and fusion background).

Wanted to chime in as I’ve been using blender for a good while and the compositor has never been up to snuff for anything but slap-comps. I really want to support an opensource compositor. Bummed out by Natrons demise.

In a feature film pipeline, a compositing app is at the very center for anything that will end up in an image. From ingest, to vfx conform, to texture creation and conversion, to dailies QT and slate generation, to export and packaging to client, to render targets, to tracking, to roto, to QC and to compositing.

A comp app no-longer sits at the end of a render pipe, its pretty fluid in its place and most companies have built their pipes around compositing apps.

Tools like NukeStudio and DavinciResolve tries to take it a step further with encompassing the vfx editorial portion of it too but that’s beyond the scope for the compositor in Blender. But Blender does have a potential framework to compete here. Id love for the VSE to be my nr1 vfx conform tool. But I digress.

For individuals and small teams, I see requests on blender.today about adding bloom, lens distortion, tone mapping etc straight to the render. But the whole concept of a post-processing stack should happen in the compositor anyway. Its the framework to develop anything that deals and manipulates images.

For texture artists and lookdev, its all images and procedurals anyway, why not tie in the concepts of image manipulation and the toolset of the compositor into the texture generation process ? Substance anyone?

For vfx compositors, we’ll we need fast, interactive and flexible tools. Where every operation can be a node.

But if we’re talking concrete examples, here why Im not able to use the compositor for any film work right now:

Missing features

Deal breakers:

- Make masks nodes, they need to work in the same environment as comps. They are not just used for rotoscoping. Used all the time for integration, grads, color timing etc. Context is important!

- Interactive controls in the viewer (masks, transforms, widgets)

- Caching

- Tracking shouldn’t be a separate environment, we judge tracks (especially 2d) in context of images.

- Multichannel workflows

- Speed

- Scripting/expressions on knobs (expression based filepaths, knob linking etc)

- OCIO as nodes

- Custom image manipulation nodes (ie use OSL or something to manipulate images)

- Improvements to the viewer (gamma slamming, switchable LUTs in viewer, widgets etc)

Important features to have

- Multiple compositing graphs pr scene or have them independent of scenes.

- More granular/atomic nodes. (split nodes into more granular tasks, think POSIX)

- Read 3d data from blender. (ie import camera data, object position etc whatever value/property you want)

- Expose 2d and 3d transform matrices to user.

Nice to have

- Make VSE and compositor work together (send clip to comp, send comp to clip etc)

- Deep support (needs cycles to support it first tho)

- Oflow tools (openCV?)

Other notes

The OpenFX platform is stale, and should NOT be used as a target. Its original developers and maintainers (The Foundry) fled from their own standard when they acquired Nuke. While its nice to have OFX compatibility that should not be looked at as a potential framework.

Im not interested in Blender just copying existing programs, but keep in mind that compositing as a platform is very much a stable and mature concept. The only innovation seen in Nuke and Fusion are in minor UI/UX improvements and in the fields of AI/ML.

There’s a lot of potential in making the compositor a non-stop-shop for anything image related.

Thanks for reading

I’d add easy use of compositor for image editor filters to that list, but the image editor needs to be improved to be a real image editor first. Get proper support for multilayered images and utility layers and such. Overall blender could use more integration between it’s editors.

All in all totally agree with your post.

Another major issue that is a deal breaker for serious composite work is the lack of time related tools. Holding frames, referencing other frames in the tree for various temporal tasks is a must have.

++ Holding Frames — especially in the Loader nodes. Also setting them to loop would be nice

+1 for time related UI improvements. For example there are differences between the way the VSE represents shot start and end and the way the Image/movie node does. If we can’t “send” clips between VSE and Comp, perhaps we could more easily send Metadata like clip start/end, speed, reverse etc.?

I imagine at least a dynamic link between assets so that they play in a synchronised way. So you could have a VSE timeline view below the compositor, so that you could slide and trim the clips as required. You could visualise the active asset (strip) by isolating it in the VSE preview monitor. This is much quicker than cacheing from the Preview node, at least for line up and timing to sound.

Are you guys aware of this re-coding of the compositor project?

“This is an experimental and unofficial Blender branch (https://www.blender.org/). Its main goal is to improve the performance of the compositor. It may tackle some of the objectives described in this task: https://developer.blender.org/T74491 . But probably not in the same way as proposed.”

Experimental builds can be found at Graphicall ex. Win here:

Hi all,

Can you have a look at Compositor Improvement Plan: relative space ?

Need your feedback on this topic.

I am a bit late to the party and I am new here. Plz forgive me if my etiquette is not aligned with the community’s (feedback is much appreciated). I am relying on Blender heavily in my research. It is by far the “BEST” tool ever. The one thing I desperately need in the compositor is having multiple viewer node output to different images. Currently (Blender 2.90) only the active viewer node outputs to the image editor. I use multiple AOVs and wish to access their output from memory or at least from the image editor.

Great community.

Thanks.

I don’t know if this thread is still active, but just in case I wanted to point out one of the main issues I have when rendering from the compositor, which is that the file output node does not output cryptomatte metadata in a useful way (it all gets mushed together into a single channel, and becomes unusable in 3rd party programs such as nuke).

I also wanted to point out to this thread that I opened a while ago about how to make the passes’ naming more flexible and practical (in my view), that I think may be of some use here.

Anyway, I hope the compositor gets some love! It has a huge potential, but unfortunately it lacks a lot of optimizations (and features that are mentioned here) to be as a production-level tool.

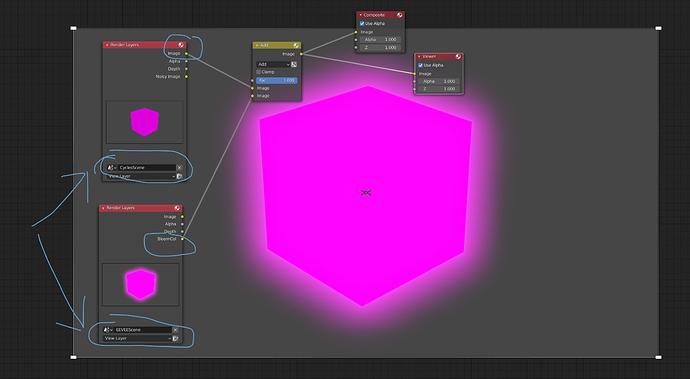

This sounds like using a second “Render Layer” node and setting the second one to a another scene. This is possible right now. And of course you can use different render engines, for example to get the EEVEE bloom on top of a Cycles Render:

There are new builds up of the Compositor Up fork which includes many improvements. The latest adds a Camera View node and Auto-Compositing.

Download: GraphicAll — Blender Community

wow. A Camera View Node is fantastic news.

whaaaat?

This are amazing news!

Just bumping this thread to express my hopes to have all these improvements into master.

Any chance?

If this could be reviewed and put to master would be awesome. He has done some great work.