Spectral rendering is clearly the future.

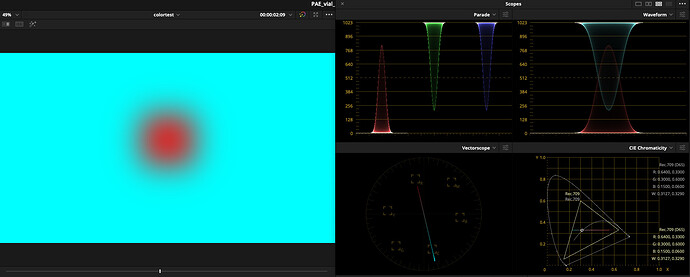

However, getting light data out and into an image is an even larger problem there, one that has yet to be “solved” with even BT.709 / sRGB based lights! It’s an open field seeking solutions. Nothing has “solved” this yet, with most approaches stuck in the bog of digital RGB gaudy looking output.

This is a fantastic observation that leads to a subsequent one; digital cameras are vastly different to what we had with film cameras. Digital cameras capture light data. Film cameras formed imagery.

That might seem like a foolish semantic twist at first, but once you dip your toe into the background, it’s sort of a mind ripping observation.

Light data is just what it says… it’s just boring emission levels. A digital sensor captures spectral light, and then some crappy math is applied to modify the levels so that they create a math-bogus-fit set of lights that sort of kind of almost will generate a stimulus of the light it actually captured.

But in the end, it’s just dull light data. It’s worse than a render using RGB light!

The problem is still turning that light data into an image. And again, this is where literally all digital approach absolutely suck, and are virtually all similar to identical.

Creative negative print film on the other hand, took spectral light data and transformed it into a fully fledged image. Spectral light would degrade the dye layers along the entire range of the medium, leading to tremendous tonality rendering. This is the unicorn I’ve been chasing for years!

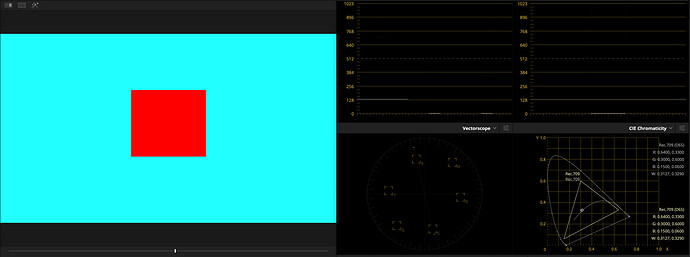

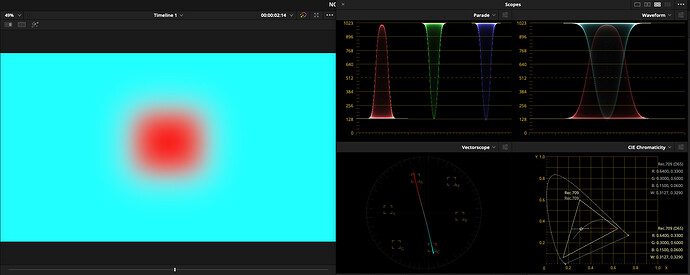

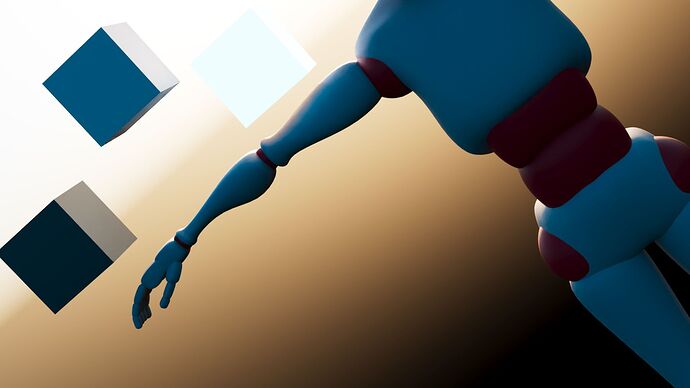

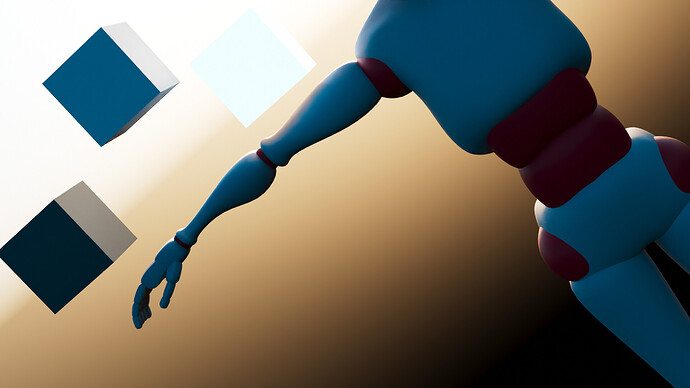

Compare the two stills from the How Film Works short, which is a pretty solid little introduction.

Ignore the boring “hue” differences, and pay close attention to tonality.

Notice how in the digital RGB sensor capture, all we have is a varying of the emission strength, and the chroma ends up just a massive wash of … similar chroma. Compared to the colour film, where the chroma is degrading along the entire run of the stock, we get huge tonality due to the ability to degraded to greater levels of “brightness”.

Look at the helmet! The differences are striking!

Which “image” shows the detail and nuance of the helmet surface better?

So in the end, we have two radically different mediums, one of which, to this day, simply destroys our current digital mediums because of a lack of focus of engineering of light data to an image:

- A _fixed_filter set of varying emission levels.

- A variable filter set with fixed emission. The variable filters vary emission and chroma.

We should have been looking at image formation, not colour management.

I’d argue the opposite; trading off the physical facets for a high quality image.

Nowhere in that disengenuous document is anything to do with image formation, which is unsurprising why after a decade, it is a mess. M. Uchida’s early work from Fuji has long since been left behind. It’s embarrassing.

Much of the grandiose claims made have been countered rather strongly by industry veterans. Color_Management_TCAM - Google Präsentationen