But you can still do that by setting the OCIO config to point to the official OCIO/ACES config file no ? Thats how we do it. We can do all the ACES transform just fine, including float images.

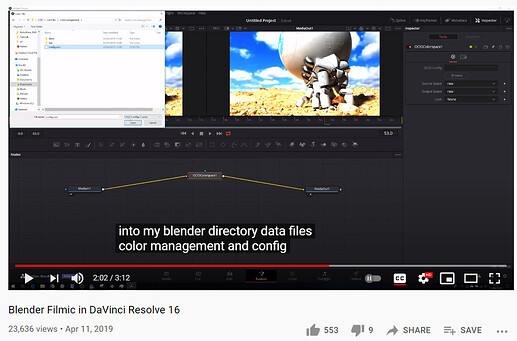

But it breaks many Blender’s own system if you use the official config. He told me that many variables in Blender’s OCIO script was not used in ACES, and many things in ACES script was not macthing with what Blender’s OCIO was doing, that result in the situation that you need to manually pick Util sRGB and Util Linear every time from a long long list upon importing images, but after you make sure ACES scrpt is aware of what Blender is doing, images would be automatically assign sRGB or Linear depend on how many bit they have. He mentioned in his video that using the official ACES config would also make the color wheel go crazy when adjusting saturation, the hue value would move on its own. He said the problem is fixed after he make sure ACES script matches what Blender is doing.

He made it very clear that the official ACES config was not made for Blender, therefore it does not automatically fit in when you simply replace Blender’s config, some code refactoring needs to happen, that was excatly what he did.

Have you tried the config I posted? You should try it before comment on it, really, I posted a llnk here:

This is absolutely incorrect. And your friend has an error already on nearly the first line.

This is exactly why it’s a bad idea.

Read the part where I point out over and over and over again that Blender isn’t properly managed, and it simply isn’t a priority. This isn’t knocking Blender specifically either – there are plenty of DCCs that completely poop the bed.

But in the end, this ain’t a good idea. It ends up being these half baked hacked configurations that have issues.

If it assumes colorspace based on file extension or just bit depth then count me out.

Come on, that’s what the official Blender is doing. Try importing an HDRI, you would see it is automatically flagged as Linear. Importing a JPG image, it would be automatically flagged as sRGB. Like I said, he did not modify anything, he just made sure ACES config uses Blender’s own system!!!

I am not 100% sure about my knowledge, but here is what I think it is. Blender uses scene-referred Linear workflow by default, therefore all images that are marked sRGB would be linearized. But if you import HDRI and mark it sRGB, the HDRI is already Linear, linearizing it again would not be good, so HDRIs needs to be flagged as Linear upon importing, to tell Blender that this image is already Linear (This is done automatically in the official Blender). And Raw and Non-Color are basically the same thing, it tells Blender not to do anything to the image, and it needs manual assignment.

assumes colorspace based on file extension or just bit depth

So basically that was what Blender has been doing

What he meant was that, the images shoot by different cameras have different profiles, but they are all automatically marked as sRGB in Blender, without the camera profiles, the images are not displaying what they should look like.

I am basically on your side when it comes to ACES @troy_s but I cannot find a word against his opinions either. That’s why I am asking for your opinion. I mentioned he is very influential in China, he is like the Chinese Blender Guru. He released this config and a lot of people decide to use it. I think this might be a problem, but I failed to convince him.

Let it rest. It’s a broken configuration.

I could not think of something of smaller importance here in big picture. It really is so insignificant, and addressed with some OpenColorIO V2 functionality that it is not worth wasting keystrokes over.

Again, for those at the back, and ignoring the aesthetic asstastic handcuffs that come with ACES:

- Blender is not pixel managed enough to deal with wider gamuts.

- Blender still contains legacy code that inhibits proper manipulation of radiometric-RGB values.

- Blender does not have the proper code in place to encode file formats correctly.

This is a shortlist of but three of many, many, many issues here.

Stop screaming. Do your homework. Learn what the issues are. Work to put pressure on Blender for proper management throughout. It could lead the way here, but is choosing not to because the general mean audience member simply does not care.

If this is what your friend thinks is an issue, you should stop listening to your friend.

Find people who know their shit.

I’m totally one of those who don’t understand enough about color and rendering to even discuss about it. I think I understand what you post here - to some extent- yet I’m not at ease enough to argue about it, just giving my poor outsider’s point of view for what it’s worth. I guess a lot of users are somewhat like me, they do care about the subject in the sense that they are affected by it but won’t spend the time and effort to get a real expertise about it. (If they are even capable of.)

The only thing I can tell, is that people need to render stuff on Blender and send the result somewhere else and it doesn’t look right from one software to the other. And to my poor understanding, for the same reason that it’s a good idea for a 3D software to be able to have an FBX I/O, Blender could use a color management that other softwares often use, and ACES is one of them.

I think I understand that Blender does not have issues only with exporting its renders but also how to deal with input images and rendering itself?

Hello, I just came across this thread while looking for solutions.

I’d thought I’d just throw my 2 cents in on this as it affects my workflow and wallet.

I create VFX and motion graphics for fortune 500 companies for mostly commercial advertising and I run on contracts from one agency to the next or multiple at once. While I’ve put aces on one version of blender and keep another stock to go back and forth between colorspaces. Some productions are an absolute aces only, so many people touch these projects and all the other software support aces, is really is the back bone of the color sector for the entire industry. The colorist will grad in aces and pass plates to me which then I have match for 3d, then pass it back as exr’s with the same linear space in mind, and then it goes off to the compositors for nuke or fusion that all need to the same space then back to the colorist for final touches.

For smaller projects I typically send out cyrptomattes or I will render out the Aces myself now that I found out you can put it in blender. The big problem is when I need to farm out. When that happens I can no longer use blender and I have to purchase a subscription to a third party renderer and set my scene up for something a farm can do. Having aces built into the system like the other 3d programs would save me money and many hours of work.

Just thought I’d throw that out there for you guys to consider when talking about standards.

Edit: just to add, i see talks of hue skewing and gamut. Aces is doing what it’s suppose to in those areas, it’s set to mimik film with rec709 and others for kodac standards, it’s more appealing to the eye to shift the hues like film when more light is applied so those hue shifts are there on purpose.

I’m well aware of the history of ACES. Just saying something is shit does not make it true.

" * Colors - Colors will desaturate as they are lit by brighter and brighter lights sources, just like a you as the human eye would sees perceives them in real life."

https://acescolorspace.com/

You’ve explained that through a flaw, which I’ll remind you that every person in the the whole VFX professional industry has to deal with, that blender shouldn’t incorporate it. That’s a horrible argument.

Not to mention, a bad one if you actually look at how everyone gets around those flaws in all the other programs.

I am literally in Resolve 17 right now, grading in Aces that takes input device transform of the arri camera and wraps an output device set to rec2020 to it, in a major production, it looks fantastic with no luts added. Your statement again is just laughable.

@troy_s your post are being flagged because they clearly violate the forum guidelines (see name-calling and ad hominem attacks):

https://devtalk.blender.org/faq

Please participate in discussions more respectfully.

Bumping this thread in favor of having ACES, as an option.

Just because it’s standard.

Or at least to have SOME way to deal with color accuracy. Right now I’m exporting pngs and eyeballing colors by hand until they look acceptable. But not too much because they break…

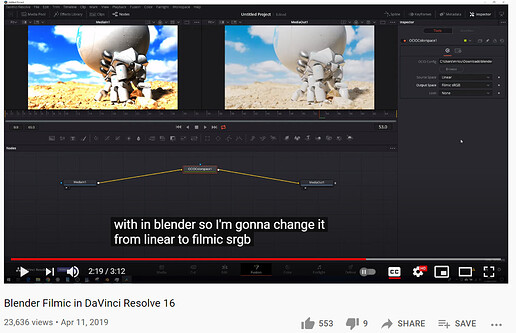

I use Resolve 17 for editing and coloring and you just can’t use Blender’s exrs because no color transform works and renders end up unusable.

To integrate Blender into wider pipelines we need to be able to export images in some wider colorspace and gamut, that leaves room for color grading, and that transforms to other colorspaces in a predictable and usable way.

I’m no color scientist, just a user with huge problems to use Blender renders outside Blender.

A suggestion would be try to use EXR as much as possible. EXR is one of those amazing formats that most people just don’t really think of using. People should really use it more.

Nope. Read my comment again. I did try to use EXRs, of course.

But EXRs in Filmic or Filmic log are unusable, at least in Resolve. They don’t respond to any color transform, because they don’t follow any known colorspace/gamut/gamma known to humanity outside of blender.

ACES may be imperfect like some said, but it gives you some known standard that enable accurate Color Transforms, and, match colors and integrate the renders better.

I ended up using PNGs (that break easily) because it’s the only way to have in Resolve what I see in Blender.

With EXRs, inserted into a Wide Gamut Color Managed pipeline, I get horribly clipped unusable garbage that cannot be brought not even near what I see in Blender. I tried to read them using almost all color profiles and there’s no match. All of them destroy the data.

I’ll try to come up with a few tests of what I’m saying.

I love Blender, and I love this community (you don’t get to express your opinions like this to aut*desk!), that’s why I switched many years ago (from Lightwave/Modo), but sometimes there’s some sort of closed-mindedness in the community, like a sort of “This Is How We Do Things Here And Shut Up” attitude, that doesn’t help Blender or the community. The aim of this is to improve Blender, always.

If Blender wants to be a player in the “industry” you need to integrate Blender renders into existing pipelines, and today, that means (at least) ACES color compatibility.

For me myself, I’d be happy to have ANY predictable Colorspace/Gamma (ARRI/Log-C, VGamut/V-log, whatever) as long as I can be able to transform it the right way in Resolve. ACES has the bonus of opening the whole wide world of VFX to Blender users.

![]() Peace

Peace

This sounds strange. EXR saves scene referred linear data, which means no transform has ever applied to it. If you import it to another software that can also apply the filmic transform, your result should look identical to that in Blender.

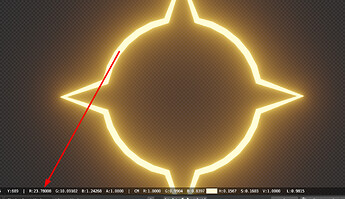

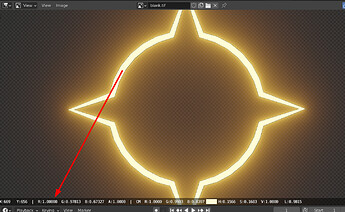

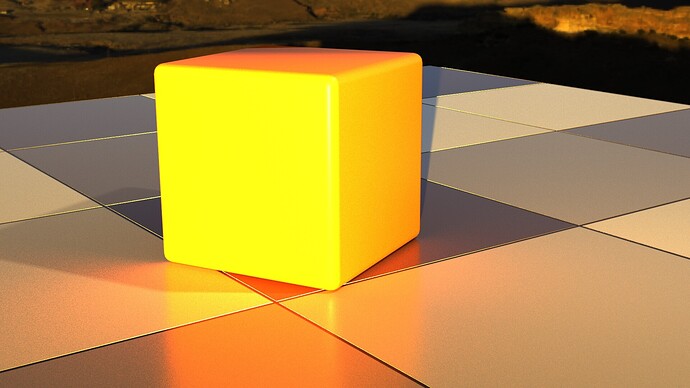

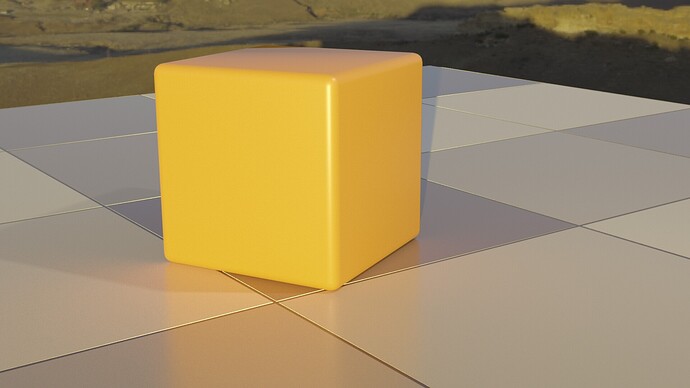

This is the data saved by EXR (filmic transform is done in the software but not in the image file itself, kind of like a modifier on an object, non destructive):

VS the data post transform (filmic transform applied to the image data itself, like the modifier has been applied, destructive. The view transform in Blender needs to be “Standard” to avoid applying the same thing twice):

That’s Blenders view of the data. And the point with ACES is not what Blenders view, but what all other steps in the pipeline CAN see and understand.

And Resolve is not being able to understand linear or log EXRs.

Here are a few tests:

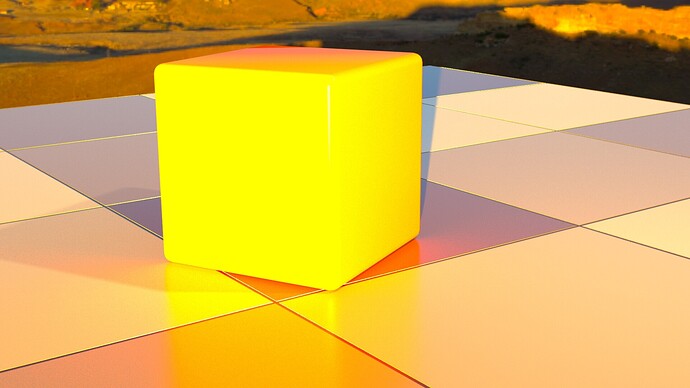

Original image exported from blender in PNG

Same file in PNG placed in Davinci Resolve, no transforms or grading.

So far so good. This looks right, but its grading capabilities are veeery limited, and breaks at the slightest color push.

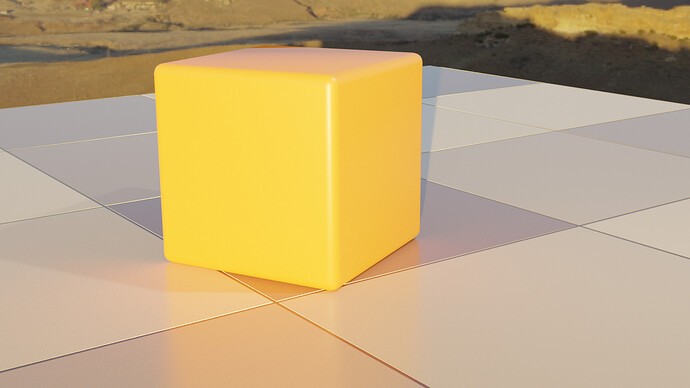

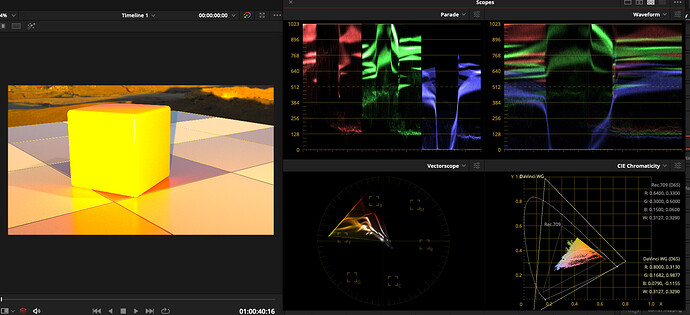

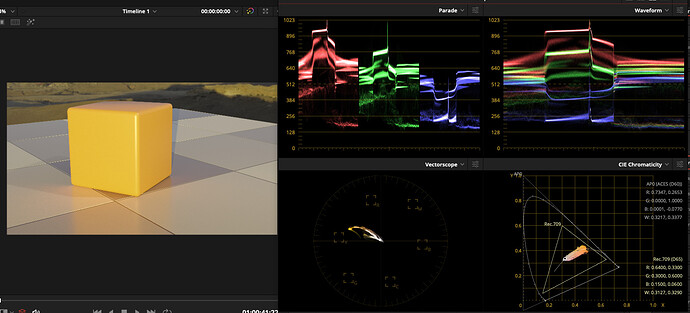

Filmic EXR, placed in Resolve, input as Linear, no grading.

This is the same file with the scopes. The clipping is visible, not only in latitude, but in color gamut as well.

If I try to recover the clipping by pushing the grade, the image breaks, the data is unrecoverable:

(latitude compressed in curves)

(and saturation reduced

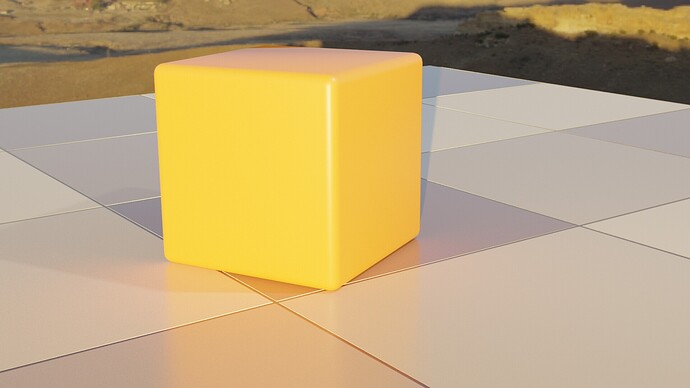

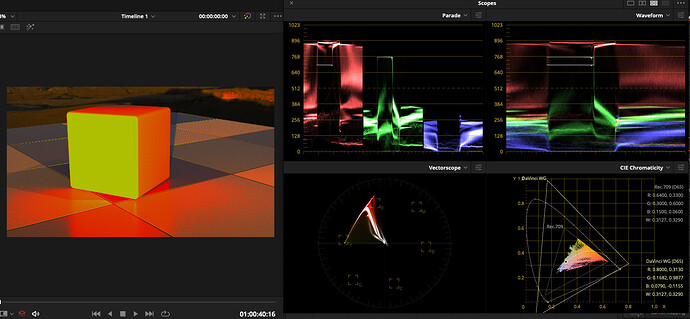

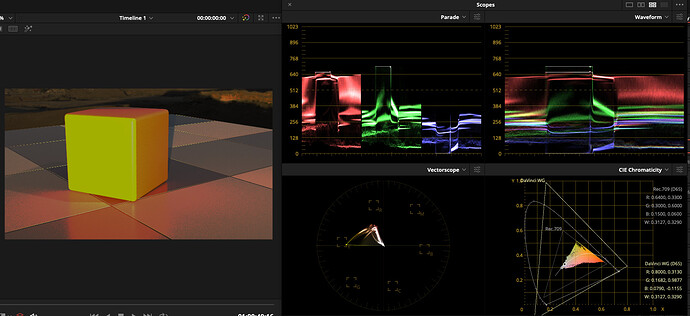

And this is the same scene, exported to EXR from Blender with the ACES patched OCIOs:

this is the ACES EXR, uncorrected, as it’s placed in Resolve)

And this is the same image, with the highlights and saturation corrected manually.

It can be clearly seen that no data is clipping neither vertically or out of gamut. All pixels fit gracefully inside Rec709.

I think this should be reason enough to take ACES seriously. I think it should be available as an option for those who need it.

If there’s some input profile that handles the default Blender EXRs nicely, I couldn’t find it. Maybe it’s simply not documented. But being a growing standard, I think it’s the way to go.

Netflix is switching it’s pipeline to ACES…

EXR is scene referred linear while PNG is display referred and not linear. When using PNG, the filmic transform is baked in the file so no need to apply any more transform.

This is because it is already transform from scene referred (not limited to 1) to display referred (maximum 1), a lot of information is lost, making it not suitable for grading manipulation.

It is of course outside of the display range, Filmic transform is not baked into the file therefore you need the software to apply the transform for it to make it not clip.

I might be wrong but I don’t think EXR cares about whether you use ACES or Filmic. It saves the scene linear data that was before the ACES or Filmic transform. I might be wrong though.

I don’t understand why this would be the case. I think I need to ask @troy_s . If I understand correctly, EXR saves the scene linear data before the color transform so whether you use Filmic or ACES should not matter, right? But why is it different here?

Of course, that’s what I’m saying.

And how you do that?

Resolve is designed to do grading. It works in the biggest Gamut existing, bigger than xyz. If there’s data, resolve is able to pull it out.

Remember that this EXR is the same frame from the same scene rendered with the same setting as the ACES one, only difference is the OCIO profile, Filmic vs ACES… no magic or tricks there.

Eppur si muove…

I’m just illustrating what happens in the real world, with “allmighty-BlenderFilmic-EXRs” vs the “all-awful-broken-unusable-deprecated-outdated-and-hateful-ACES-EXRs”. ACES’s works, Filmic’s don’t.

I don’t have stocks in ACES, I don’t earn money by making all these tests, I’m just pointing out the problems in native Blender Filmic EXRs.

I’m also not a developer, nor a color scientist, I can’t say why this happens, just that it happens, against all theory.

And I provided the data I have, screen captures and scopes. There’s not much more I can do.

If someone knows how to make Blender EXRs behave properly outside blender, just tell us the proper workflow.

![]()

[edit: typo]