Might be that the reason… specially the graphics card… Next may be using sone things that are calculated faster on the RTX series ![]()

We have RTX 4090 with 128G of ram and depending on the scenes, we do experience significant lag time and render time compared to legacy. So for us it’s not the graphics card. The same scene for us has increased lag time in next (1 to 2 seconds in legacy vs 5 - 8 seconds in next), and renders slower, much slower (we have a scene that renders in 39 seconds in legacy but 2min57sec in next).

This seems to be scene dependent though. We do have scenes of course that respond better than others (i.e. render time is 29 seconds in legacy vs 49 seconds, with lags times about 1 to 2 sec difference), so cases like this the increase in render is not so bad. The main offenders for us are scenes with larger and more detailed backgrounds. These scenes already render slower than others in legacy as expected, but their render times in next seems to be exponentially longer. We have adjusted the various settings as suggested by helpful tips on this thread, and we even have new lighting, but it did not help for us. We work with characters that have a lot of fur / hair and sss (e.g. animal characters) so we are not sure if this is a factor or not. More experiments is needed by us of course. Regardless the increase in visual quality does not justify the huge increase in render times for our use at the moment.

For now, our studio is okay as we are on 4.1 and we will probably stay on this safe buffer zone for the next few productions. But eventually we will all need to move to 4.2 and above to take advantage of Blender’s other improvements (and there are lots and lots of improvements coming it seems). Also this is Eevee-next’ first release so things will still improve I’m sure…for now we are playing it safe by downloading all the betas and experimenting/saving our files in eevee-next format. In this way, we won’t get left behind to deal with backward compatibility issues later.

…And I that was thinking on upgrading my machine… perhaps there’s no point them.

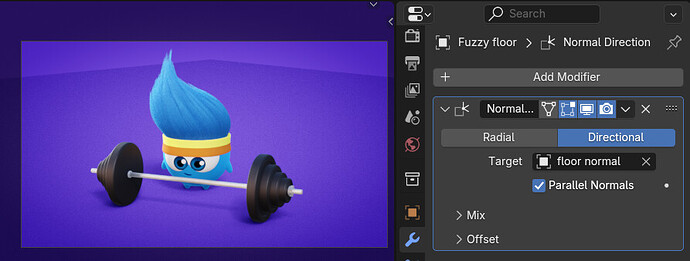

I don’t work much with hair and fur, I mostly do this type of things: https://youtu.be/ytDlfkc911M?si=qQ_drIgxcrKO_8WO this one for exemple took from 1 minute to about 1,5 minutes per frame on Legacy… I do use many volumes and geometry nodes crowds, but always on cartoonist style.

This one was made almost 2 years ago and it’s publicly released, so perhaps tomorrow I’ll give it a look and do a side by side comparison to see how the speeds go ![]()

OMG. The video. it’s so cute.

Please do a comparison if you have a chance. That will be helpful. You mentioned in your case that sometimes next is faster…interesting. We are too deep into production using legacy so I don’t think the team will change anytime soon, but we will need to prepare for eevee-next sooner or later…so any info will be helpful.

This is the oficial video: https://youtu.be/JfXOpDYPn3M?si=hc_6nM6brDrR59UF and it’s property of BoomLand Games. The frame is at about 0:42.

When rendered in:

Eevee Legacy (0:42):

Eevee Next (0:36):

So… faster you see… the exact same file, did nothing, just pressed render.

Edit:

There’s a bug in the memory counter ![]()

Oh…so no change in setting. Just hit render… and your next render seems a lot more vivid too…

What kind of lighting are you using? Are there lots of lights?

The set looks big…so I guess it can’t be the size. But ours is indoors, large indoor structures (I wonder if this will a factor).

You have particles happening there it seems too.

So many things to figure out…I don’t know what we have to do with our scenes…but I’m sure we’ll figure it out…

We have never had a next scene render faster than legacy so I’m surprised…

I have everything on it, from particles to mist to sand, transparency (several layers), geometry nodes scorpions, etc … everything except hair particles actually ![]()

The terrain has about 1Km, 4 lamps on it, 3.5 million triangles.

… I mostly have similar times on Next, when not faster.

I do know exactly what blender likes tho… I started in 2000 with the version 2.01

Yeah… all that vividity I don’t make a clue were it comes from ![]() It’s a lot sharper and saturated

It’s a lot sharper and saturated ![]()

Thanks for the info and comparison.

Your next render is more vivid. Depends on what you prefer, but the next render looks really good for this style. Our next renders somehow have less contrast and appears lighter overall. Not worse, not better, just different. A few members mentioned the contact shadows somewhere earlier in the thread…we are trying to see if we can achieve something similar to contact shadows in next. That makes a big difference sometimes with hair/fur it seems, and we have lots of it in our projects.

We switched to Blender around 2015ish, so we are a relatively new user, still lots of figuring out to do.

And I thought I badly needed the old Bloom options… Yours is od’d on bloom ![]()

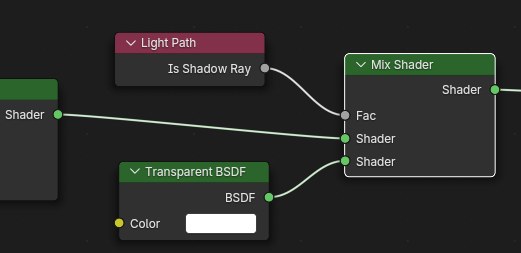

![]() I just love Bloom… Specially Legacy Bloom… but on my recent animations I’ve been using the compositor one and looks reasonable… a bit too much high quality to my taste, don’t know how to explain it but it definitely doesn’t Bloom like Legacy… or I’m not using the correct nodes.

I just love Bloom… Specially Legacy Bloom… but on my recent animations I’ve been using the compositor one and looks reasonable… a bit too much high quality to my taste, don’t know how to explain it but it definitely doesn’t Bloom like Legacy… or I’m not using the correct nodes.

Bloom Legacy is artist friendly, works great out-of-the-box, is easy to understand and easily accessible. Let’s hope its replacement will be worthy.

My worry is the possible need of the Compositor for Bloom. I’ve scripted am operator which sets up all the render layers with a Denoise node in compositing. I never have to open the compositor, nor enable it in viewport (Denoise makes it too slow). And most of the time I have to work with Bloom enabled.

If I cannot work with Bloom while Compositor is disabled, I would have to script something that mutes everything except Bloom.

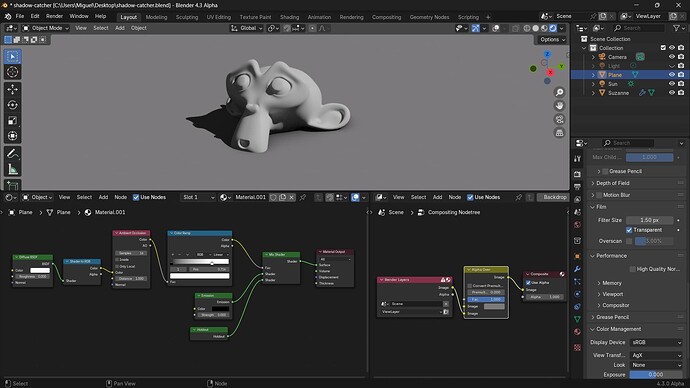

Thanks! I was looking for AO in the Shader nodes, so much for using the Search function…

With this, I can use either Dithered or Blended, depending on the desired result.

I only had to add the AO and Multiply node. The rest is meant to adjust value and contrast (can be done in the Floor Shadow panel).

For some reason, this ‘Shadow Catcher’ acts differently with a NormalEdit modifier, which I use to remove backlight shadows. You can still faintly see the floor in image above, but more clear below:

Since this behavior is different from Cycles and Legacy, I’ll see if I should do a bug report.

EDIT:

Above issue can be fixed by adding the nodes below and enabling Transparent Shadows.

I still did a bug report on the noise with Normal Edit Modifier.

I am not sure if this is reported but it looks like E-N messes up the shadow render pass if the shader passes through the Shader to RGB node (with or without the raytracing ).

You just describe my experience with Next!, it’s scene dependent

I’m having a bad time with a complex scene with ton of lights (10 point lights,3 plane lights, and 1 sun light). 13 of these light managed to gave me “too many shadows,” and “Shadows buffer is full” error lol. Disabling the shadows does improve the performance tho.

Scene with a few light and less complex (2 plane light and 1 sun light) runs the same and looks better than legacy ,plus it doesn’t throw me a ton off error about “Shader buffer is full” or “too many shadows”.

I really wished that they postponed Eevee Next to 4.3

Yes. The main thing is, we have already built up a pipeline with Eevee legacy that is based on “faking things”. Fake raytracing, fake this and that. The very useful contact shadows and the bloom mentioned in earlier posts is an awesome example of “faking” things. Which worked mostly for what we do and it’s faster (because there is no heavy calculation involved in this case).

Now Eevee-next with ray-tracing is getting closer to the “non-fake” way of doing it (although it is not the same as in Cycles of course). This means we will need to revamp everything to make it work. So to us, Next is basically a real bridge between Legacy and the Cycles way of doing things. It has a lot of potential really for our future work, maybe it will yield better results in scenes with water. But for now we are not ready for it.

In our tests, we try to “fake” it again like Legacy does, because it is faster. For some of our larger scenes, we end up turning off the raytracing option just to see, and it speeds up the render by about 30%. In some scenes the render results are different with vs without raytracing, but in some scenes we may be able to get away without it. We are happy that there is at least an option to turn if off. Of course in this case, we will have to reset the lights and things to “fake” it again as much as possible. It is a balancing act at the cost of more realistic lighting/reflection. And also we noticed that in some scenes with raytracing the reflection and highlights gives some speckling effect that needs to be dealt with as well with more adjustments.

I already had a success case, a 1 week animation, simple stuff, nothing too heavy. Rendered fine, looked great and was approved.

The last creative I did (a 2 weeks job, 30 seconds animation, very heavy stuff) I started it on Next but, more or less at the middle of it, I noticed that wouldn’t make the cut (successive crashes while rendering and crashes while animating (mostly in solid/wireframe mode so unrelated to Eevee)… as such I reverted everything to Legacy and completed the task.

This is a bit worrying as we are at 3 weeks or so to the release date…

One thing impressive tho is that going back from Next to Legacy didn’t required any work… looked great without doing a thing ![]() So that alone is Gold!

So that alone is Gold!

Yea,I also noticed that Eevee Next can be very noisy compared to Legacy.

The one thing I like about Next compared to Legacy is that the light probe is far superior and looks very detailed. It’s almost looks like lightmap texture

We’re jealous. In our case we need to have different shaders (mainly for characters with hair and sss) to make them work well with next. So going back to legacy is a whole lot of switching again. Not as painless as yours. For now we keep two sets of scenes. Legacy set for our main production. And the Next set, to play around and keep updated as things change.