I am about to build my next generation computer, focused around Blender. I am wondering if Blender 3.0 will support using dual NVidia 3080 GPUs given the change (eventually) to Cycles X. I am trying to decide between building the system with dual 3080 GPUs or one 3090. I know that NVidia no longer supports NVLink. However that is a non-issue in 2.9.3. Given that Cycles X renders progressively rather than in tiles, I just want to make sure dual NVidia RTX 3000 series cards will be recognized and used to speed up Cycles X.

I think my question really is about Cycles X being able to leverage 2 NVidia 3080 GPUs that are not linked by NVlink (which 3080s can’t be).

It will leverage them as always.

NVLink is supported, but just in the 3090, the 3080 lacks the required hardware.

If you have any more questions, I’m currently running Cycles X with 2 FE 3090s. I am not using NVLink, and I understand the only benefit to using the bridge would be combining the two GPUs vrame for a total of 48gb of Vram.

I understand this would not be possible on the 3080, as only the 3090 has an nvlink connector support.

Cycles X seems to leverage both GPUs almost perfectly cutting my render times in half over a single card. In the viewport, results are impressive however there is currently a bug while using Optix that seems to slow down the viewport rendering to worse than single card speed.

Thank you for your response and guidance here. I am planning now to place my order this weekend for a system with 2 3080s. I can’t afford 2 3090s in this current chip shortage environment. I am so excited to go from my 2013 iMac to a modern computer with a Ryzen 9 microprocessor. I’ll let you know how it goes. My scenes are normally way below the 10 GB limit of the 3080, so I am not too concerned.

I downloaded and tried out the alpha of Cycles X. Even on my ancient iMac the render times were 64% faster. I am so pumped to try this out with 2 modern GPUs, a modern CPU, and more physical RAM. Your reassurance that this will all work in 3.0 is extremely helpful. THANKS!

You’re very welcome!

Please make sure you click reply so I get a notification if you have any more questions.

Cycles-X improvements really are amazing, I’ve been using it for 90% of my Blender work lately.

You’re going to have a better CPU than me, I got a great deal on a 5800X so that’s what i’ll be using for a while.

ALSO, you will need to think about how you are going to cool your duel GPUs if you are building the system yourself, I ended up buying new fans and filling my case with them, so now my computer is very loud.

Hey @Nuzzlet, how does 2 3090s compare to one? Do you get double the performance? Also, did you happen to get an NVlink on your hands? I am thinking of buying either 2 3090s (with NVlink for the extra total VRAM, because without NVlink the scene won’t be split on both GPU’s memory, and each gpu will have a full copy of the scene in memory), or an RTX A6000 that has 48 GB of VRAM. I read in some sites and answers in forums that NVlink on 3090s doesn’t actually combine the VRAM, but only combines the performance. Is that accurate? Also, I read the VRAM only combines with workstation GPUs.

So, with cycles X I’ve seen up a twice the render performance using duel 3090s over a single.

Some builds have been better than others. Recently, ice been having issues with newer version of blender on windows 11 utilizing both GPUs. I think there are some bugs still.

With non X cycles, the performance gain is seen in having multiple render threads, effectively doubling render speeds.

I’ve had issues with the viewport all around while using duel GPUs. There seems to be alot of room for optimization, specifically when it comes to denoising as that seems to actually be slower with duel GPUs, even though the samples are faster.

Also, you need to remember that especially for scene that render very quick, as in under 20 seconds, the initial loading of the scene can take many seconds & that will not be effected by gpu performance. I’ve done 20 second renders where 6 seconds were just loading to scene, meaning doubling the GPU power would only save around ~6.5 seconds because the initial 6 seconds is CPU bound.

As for NVLINK, Ive never tried it. I’ve never needed the whole 24gb, so it hasn’t been worth the investment for me. However, Ive talked to people who have used NVlink with blender to speed up render performance on scenes that use over 24gb of vram. What I do know for a fact is that NVlink will not “double” your vram, it just creates a faster link that allows memory sharing where necessary in applications that are specifically written to support it. It’s much faster than going through the CPU to use system memory, but it’s not as fast as having the 48gb of ram onboard like with the A6000.

Happy to answer more questions you have.

I have a Windows 11 machine with Dual 3080s. I have tested with a variety of my files and versions to 3.2 beta and have been unable to get the system to really use the second card. It is checked ion preferences and does see a “blip” periodically when rendering in cycles but in general is only uses a single card.

What do you mean by “blip?”

And how are you measuring how your cards are performing?

I am willed to provide benchmarks for multi-GPU operation. I’m currently trying to enable two RTX 3090 Ti GPUs connected via Nvlink in blender, but I can’t find an option for this. does anyone know where to put a tick?

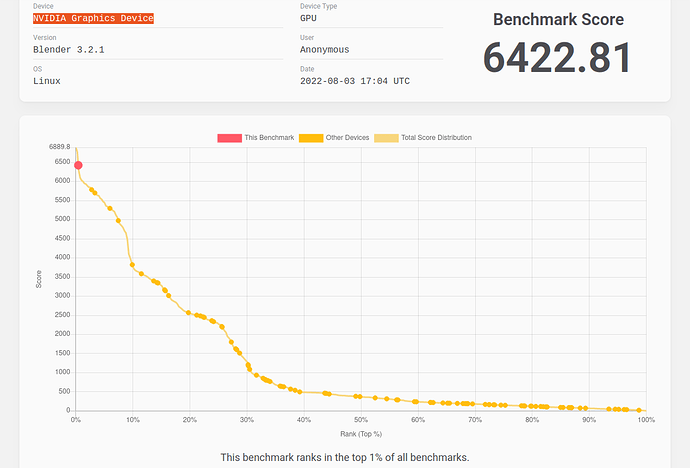

OK, here comes the benchmark. I wanted to find out the impact of using NVLink in a multi-GPU setup. So I startet with an AIME T502 workstation, using 2x RTX 3090Ti with NVLink and the benchmark result was impressive:

But I’m surprised that the GPU type was not recognized by the benchmark tool. Also it was not visible within Blenders if the NVLink is active (according to the system console it was activated). I would have expected that the VRAM would then be displayed twice as large, which unfortunately was not the case.

Does anyone know whether you can/must activate the NVLink adapter (possibly by a tick) in Blender? And is the display of the video memory with two coupled GPUs maybe just not implemented yet?

edit: I just found out that the benchmark tool only uses one GPU, so the upper result is NOT for 2x 3090Ti, but only ONE.

Any updates on how the viewport you’re experiencing is going these days? Any change in 3.4?

Thinking of putting another 3090 in my system to speed up the renders a little bit. Is everything rendering on auto or do you have to manually select both gpus when you press F12? Or does the system already know what to do so to say.

Also regarding the first 6sec there. CPU wise. I know CGGeek made a video comparing real world CPU benchmarks in blender, where a smaller faster cpu can be better than a more core slower guy.

Like does that example 6 seconds help with multiple cores or is that all raw cpu speed?

Thanks, this thread has been very useful!