Hi,

I’ve been running some tests to try and find what might be causing unexpectedly high RAM usage with adaptive subdiv.

I’ve noticed some constraints with the current system:

-

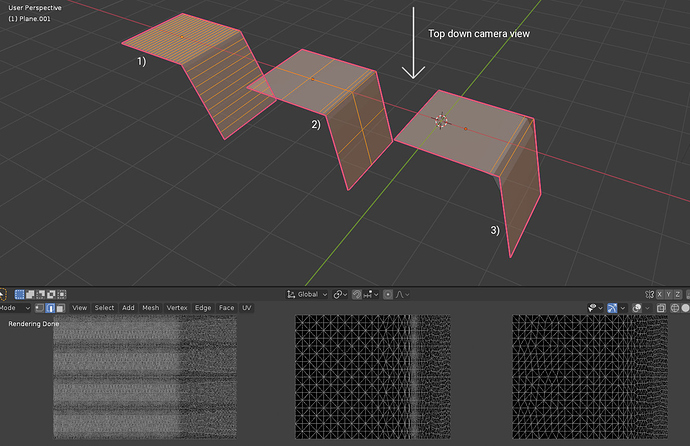

The dicing is restricted by the original mesh faces (see 1. and 2. below). Small faces on the base mesh force the dicing to be finer than it might otherwise be, especially in off-screen areas where it would typically be quite coarse. Could tris be made to span faces in future?

-

The dicing isn’t purely camera-based; oblique faces have much denser subdivision from the camera PoV (see 3.). Perhaps this is done to avoid almost infinitely long tris for faces that are almost parallel to the camera, but this increases subdivision a lot. Maybe a hybrid method would work better - maintaining density for most angles except for faces that are almost parallel.

Ideally the tri density would be consistent across all three examples above.

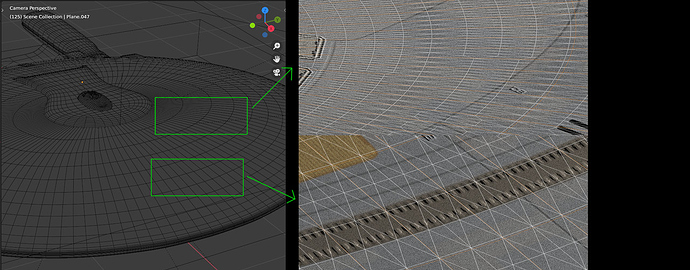

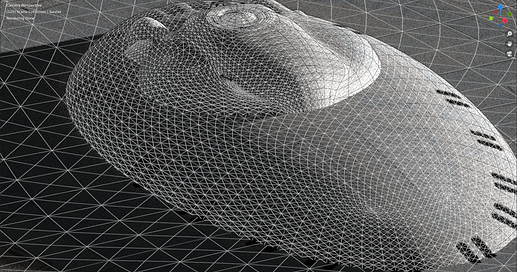

Here’s a real-world example with a combination of the issues:

I can’t reliably render that model at 1920x1080 with 0.5 subdivision level and stay within an 8GB VRAM limit (some camera positions cause spikes in RAM).

A quick test in a new scene on a subdivided plane with 2 tris per pixel filling a 1920x1080 frame uses about 1.5GB VRAM. Of course, in practice a mesh will have geometry beyond the edge of the frame. But even so the actual RAM use is much higher with adaptive subdiv enabled in a typical scene.

Could anyone with knowledge of the code explain if these issues might be worked on at some point? Development looks to be paused right now which is a shame as this feature has a huge amount of potential.

Thanks.