Present: Dalai Felinto, Jaques Lucke

Meeting to assess the current state of the particles system, and agree on next steps.

The meeting covered the current implementation with three demos, and went over the designs to be explored before the coding continue for too long. This will be the scope of the work presented and developed in a workshop in September.

Current status / pending design

- Multiple inputs for the same socket (needs design solution)

- How to do specific simulations (scattering, spray, …)

- What is the particles unit (VSE is the shot, compositor is the frame)

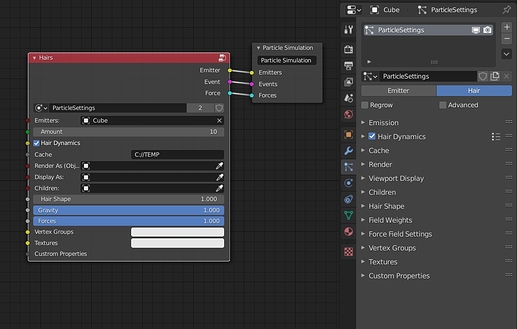

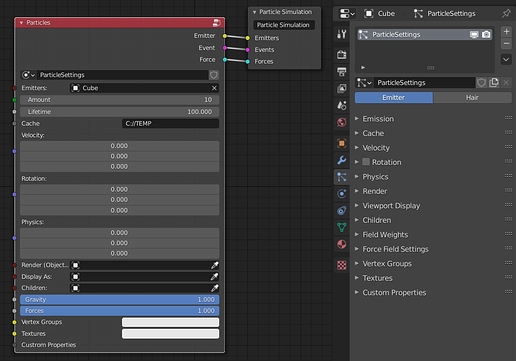

Nodetree describes the states you want to simulate and the behaviour

Type of nodes:

- Data Processing (blue)

- Behavior (red)

- Particle Simulation: contain state

“Not sure about the separation between Emitters / Events / Forces” - Jacques Lucke

Demo

Which questions we still need to answer?

- Are we building the right product? Is a product still worth building?

- Is this design compatible with the other node projects? (hair, modifier, …)

- Can we design the effects required for scattering of objects as well as particle effects? How? How would the node tree look like?

- How would this system make previous open projects easier (or better) to work? (e.g., pebbles scattered in Spring, hair spray on Agent, tornado scene on Cosmos, grass field interacting with moving character in BBB, …)

Use cases for the particles simulation

- Scattering of objects

- Particle effects

Design > Mockups > Storyboard > Prototype > Implementation

Before the project advance much more is important to get buy-in and feedback for all of the current iteration design stages.

The project had a working prototype early on, but not for its latest versions. Specially not since the UI workshop in February. Also, at the workshop some UI ideas were discussed, yet no final solution has been approved.

- Design: Mental model - node types, the relation between particle simulation and point cloud object, what is a node group, state, behaviours

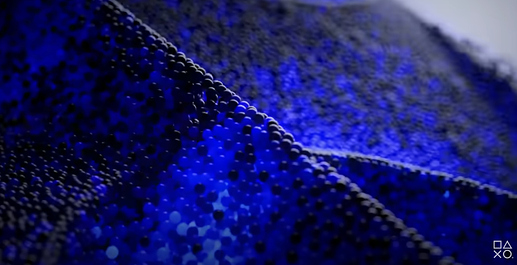

- Mockups: wireframes or final screenshots of node trees for existing problems (torch, rain, tornado, PS5, shoes, …)

- Storyboard: what steps one goes to create different simulations

- Prototypes: Functional (limited) working system (Python? C? Mixed?) where users can actually use the system.

- Implementation