Those are some pretty nice examples

@brecht @lukasstockner97 how is this going?

I know that you are super busy, but this could be a great thing to add during beta, so people is more conscious of it and things can change faster  (also it will help a lot in Spring… I´m´sure about that XD and it will help us in archviz too… I´m´also sure about that hehe)

(also it will help a lot in Spring… I´m´sure about that XD and it will help us in archviz too… I´m´also sure about that hehe)

Cheers!

Good

It´s a pity not having it right now… but it´s logical since there is a ton of work over the devs for the Beta

Thanks for the heads up @SimonStorl-Schulke !!

Hey, any chance that someone can make new linux build with current newest 2.8?

Oh, I didn’t saw this question, you can find our build in graphicall, both for windows and linux, the linux build is published by @NiCapp so thank him

Is Scrambling Distance ever going to make it into Blender?

I see it’s for advanced users but it would only be available after you change Sampling Pattern to “Dithered Sobol” and even then you still have to lower the Scrambling Distance from 1.00 for it to have any effect.

I would love to use it on my scenes to get that 15-35% speedup.

@brecht has it under his scope, to review and test it, last time he tested it he was not too convinced, but he tested it without dithered sobol, and that’s where the result is the best, so will see what he thinks about it, or if he has another option to properly accelerate Blender, because in the end what scramble distance in BoneMaster or other custom builds does is to “break” the render and introduce A LOT of bias

BTW you can use it with BoneMaster without a problem

This sounds good:

Thanks Metin for posting it here.

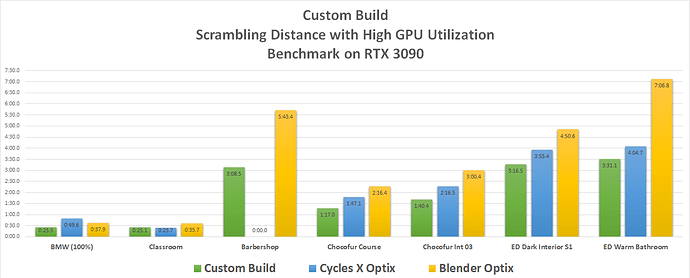

I did the custom build with the patch for scrambling distance and high tile sampling and then benchmarks to validate the benefits. Speed improvements of up to 2x compare to the Blender master without noticeable visually image quality degradation as long the scrambling distance value was not to low.

Scrambling distance alone won’t do much in performance unless the tile sampling is increase for optix. I was only getting at best 10% speed improvement without high tile sampling. The GPU was underutilized once it was added it went up to 100% improvement.

Hello @eklein, what do you mean with “high tile sampling”?

Is it the number of samples? The tile size? Something else?

In the Optix device the “step_samples” per tile is kept at relative high safe value for final render of 32. This is done to avoid potential GPU crash due to Timeout Detection and Recovery (TDR). Lowering this value to 1 will maximize the GPU utilization. This change when combine with scrambling distance gave big boost in performance improvement and in some scenes up to 100% faster compare with the default value which maximum improvements was only 10%.

With my Nvidia 30x series I have not encounter the issue with TDR. Other GPU might have an issue. This issue can be avoided by increasing “TdrDelay” value in the register. Other application due check for this value and if is too low the warn the user and recommend changing it.

One side effect of this change is that tile size of 256x256 become slower for rendering. Check my link a couple messages above for detail analysis. Best tile size for performance now becomes something around 1000x1000.

please, where is that located? Is it in the interface? or you mean in the code?

From what I have gathered:

- On windows it’s called “TdrDelay”, it is set to 2 seconds by default, it can be modified by altering it’s value in the register. If you have multiple GPUs, they all get the same 2 seconds delay.

- On Linux it’s called “Watch Dog”, it is set to 10 seconds by default, it can be modified by altering it’s value in the xorg.config file. If you have multiple GPUs, the one connected to the display gets the 10 seconds delay, the others are considered compute units and don’t have such delay.

Correct me if I’m wrong.

lsscpp, It’s located in code in the optix device part of the work done by Patrick Mours from Nvidia. That’s why custom builds are necessary.

Voidium, Looks correct information on TDR.

I run test that I did before (see above) now with Cycles X comparing with the scrambling distance with high GPU utilization build.

Cycles X is definitely an improvement over blender Cycles. Biggest issue are scenes like BMW it is at least 30% slower than Cycles. Babershop scene renders incorrectly. It is early and a great start and would expect improvements in the next coming months.

Over all using scrambling distance custom build is almost always still faster and in scenes like the BMW it is 2x than Cycles X.

The changes in the render architecture for Cycles X now means that the GPU work load and utilization is control mainly by the Cycles new path tracing code. So using scrambling distance with Cycles X will be more difficult to get performance boost like it was possible before by increasing the Optix device GPU utilization.

See chart below:

Eric

For barbershop scene to render in cyclesX you should hide volumetrics stuff

Note the cycles-x builds on builder.blender.org are made with an older CUDA toolkit version. We got better performance with CUDA toolkit 11.3, and that’s how both master and cycles-x were built for the graphs in the blog post. We’ll upgrade the buildbot CUDA toolkit to fix this.

I don’t know how big the differential is exactly, I didn’t test recently.

The barbershop can be compared by removing the ENV-Fog.001 object.

I saw also that AO and Bevel nodes are not yet supported, or at least Cycles is giving warnings like some time ago

Anyways, my tests left me amazed, the viewport smoothness difference is SO BIG, and OIDN works SO SO WELL, that it’s amazing.

In final render speed I have noticed some improvement, not measured yet, but it’s faster, congratulations, this will become in something amazing

Those are the more complex nodes that depend on ray-tracing. They were also added last in the OptiX implementation of the current Cycles.

![]()

![]() Looking forward to checking out Cycles-X this weekend!

Looking forward to checking out Cycles-X this weekend!