LINK to all renders in .png: Microsoft OneDrive

So I’ve been trying the build for the last 3 days for test renders and from what I’ve seen it works… just great.

There is just one setting really and I found a key to understanding what to expect at least in my cases which is texture heavy scenes lit by HDRs (or sun, spot lights and point lights) and lots of indirect light.

Tbh I still am unable to set simplify AO consistently correctly so I just leave it be. Compared to this it’s so much harder to set simplify AO right.

Set correctlty this literally makes 1070 into 1080ti

I’ve been trying 3 different scenes so far with DS and SD.

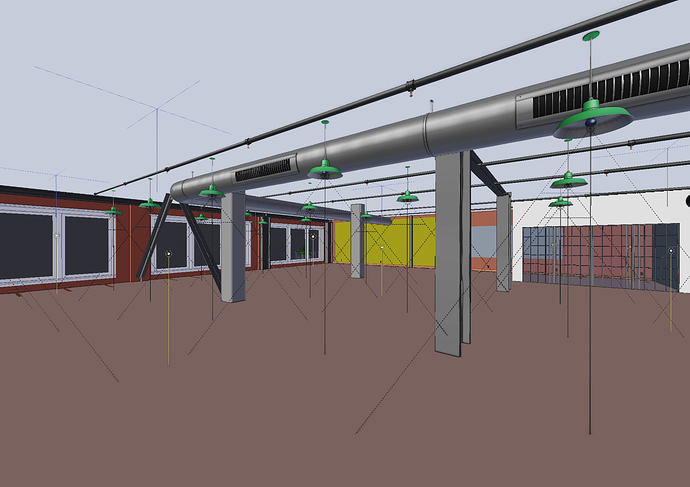

Here I present the first one. It’s rather simple geometry with 4k textures and 4k HDR. I have one much heavier I will post later.

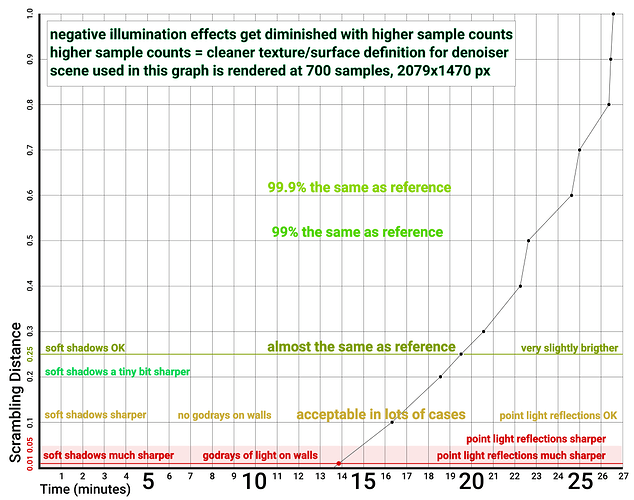

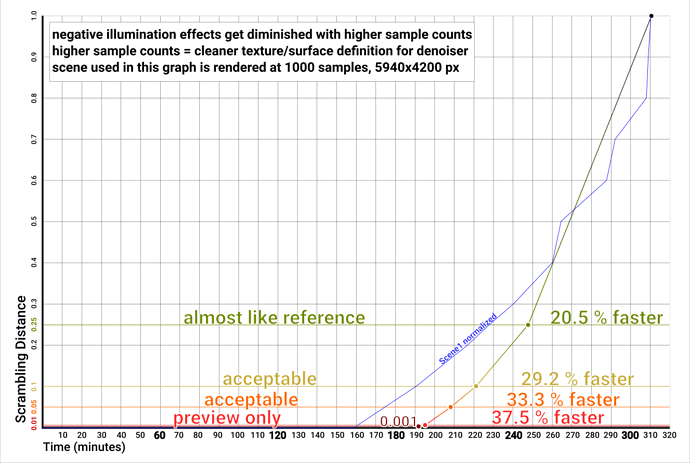

I have a lot of findings I put into a graph to better understand and get some conculsions. It’s rather interesting:)

Graph:

Reference render 10000 samples, Dithered Sobol:

Scrambling Distance from 0.01 to 1.0

Scrambling Distance divided by reference

700 samples vs 1400 samples at Scrambling Distance 0.01 (probably should’ve done 0.1 instead because 0.01 is really asking for trouble anyways)

Lastly this is Correlated Multi Jitter vs Dithered Sobol.

When it comes to Dithered Sobol I see little difference at least in texture heavy scenes. But maybe with some change to the denoiser it could potentially work better.

In conclusion I will from now on always use Scrambling Distance builds and would be super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super super happy to see it in Master (experimental) because the feature deserves it 100%.

For archviz I am sure it can be in Supported feature set too but I am not able to test any variable scenario alone and there have been talks it lacks in some very specific stuff like Hair.

0.25 almost the same = 27% faster

0.2 pretty great already = 30% faster

0.1 good preview / often acceptable = 40% faster

0.01 asking for trouble! = 48% faster