Is this adding back the spectral switch checkbox in render properties panel? I am curious why it was removed in the first place.

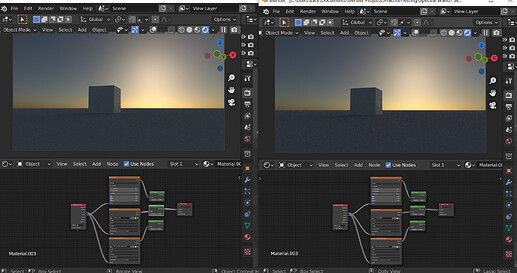

That’s right, I’m working on adding it back.

The reason is I had started the cycles-x-spectral-rendering branch from scratch based on cycles-x branch and now adding back the features one by one. Some of them can be easily ported, almost copy-pasted, and others must be adapted to the changes in code.

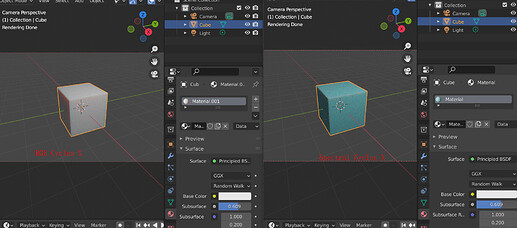

I decided to ask this since it’s not listed in the known bugs and limitations, why is subsurface scattering looking like it’s broken?

Also regarding to an earlier discussion of Nishita being too greenish and blueish in Spectral Cycles, I want to share some of my latest findings. I know you guys told me that it was something about shifting the white point from Iluminant E to D65 and it is what should happen so it is not an issue. But I could not get my head over it and continued testing. I am not here to argue anything, just sharing my findings.

So finding number one, this does not have to do with the intensities. This was because of @nacioss suggesting this:

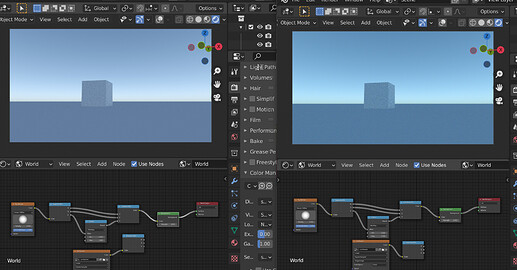

So I did the testing by simply clamping the brightness

And I also tried to use the brighness of some HDRIs and the color of the clamped Nishita:

Difference still very visible, so it is not about the brighness of the texture.

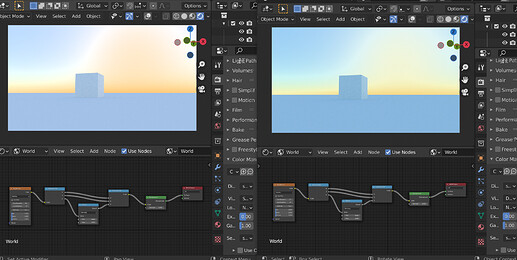

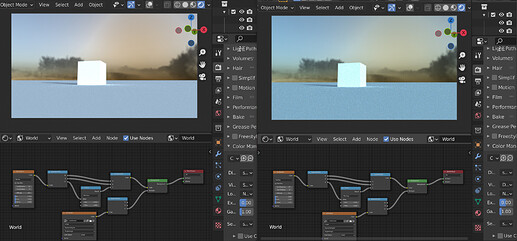

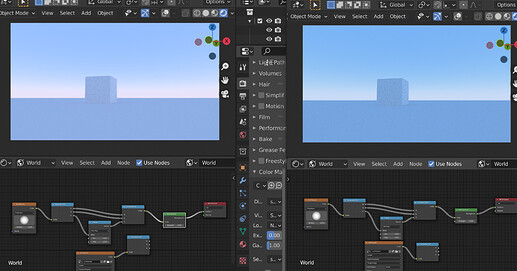

Finding number two, this is not just Nishita, all three sky textures have the same situation. But HDRIs do not have the same issue. Here is the comparison for the other 2 textures plus an HDRI:

The HDRI is almost identical at least for the first glance, while the other 2 textures are far more obvious, therefore I conclude that rather than Nishita, something is with the Sky Texture node here.

Finding number 3, Nishita baked into HDRI comparison. After concluding that HDRIs does not have the same situation, I tried slapping the Nishita texture onto a UV Sphere and bake it into exr texture. The result is rather surprising.

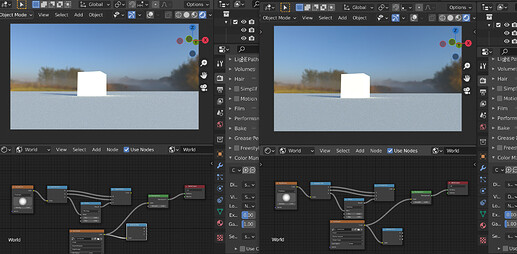

So start with good old Sky Texture:

Expected difference.

Now let’s see the second emission node, which was baked in RGB Cycles:

Kind of surprising, they are almost identical.

Let’s see the third emission node, with the exr texture baked in Spectral Cycles:

The two branches are still identical, but… isn’t it greener and bluer than the second one?

I think this is an interesting finding, and I still think there is something going on with the Sky Texture node. The exr connected to the second emission node is basically the data outputed by the Sky Texture node in RGB Cycles, and the exr connected to the third emission node is the data of the Spectral branch’s Sky Texture. The fact that the two EXRs are different, but using the same EXR in both branches make the two branches identical, I think is enough to prove that something is going on with the Sky Texture node that is causing this phenomenon.

I just want to share my finding, if you guys still think this is not an issue, then it’s fine then. You guys are the experts after all, not me.

And here is the link of the blend file with the baked Nishita EXRs:

I haven’t done any extensive testing yet, thanks for reporting this.

You’re almost right, that’s not a problem with Nishita nor Sky Texture. This happens with all nodes that generate their output in XYZ color space. As far as I understand, there’s an assumption everywhere in the code that XYZ uses D65 white point, which is not the case here.

This does not apply to images and RGB sockets because those values are interpreted as having IE white point throughout all the color pipeline and this doesn’t cause any issues.

Oh so that’s not the spectral branch’s issue. So this is more like an issue from the master of having assumptions in the code?

So… to solve this problem in the future, some refactor in color management regarding XYZ space needs to be done? Am I understanding this correctly?

EDIT: Now brecht’s post final make some sense to me

But did he just said the bug was fixed in a commit? And did he just said XYZ using Illuminant E is an “incorrect” assumption?

I am confused again

EDIT: I guess it doesn’t matter at least for the initial merge, so I will probably just stop thinking about it, lol

EDIT:

I think I finally fully understand the situation. Now in Spectral Cycles, in order for the output display to be D65, the light needs to be Iluminant E (to prevent RGB defined white to appear pinkish), using D65 will become a colder color. This can be comfirmed with the previous build of Spectral Cycles with the Spectral Blackbody node. I am curious how other spectral renders handle this, and one of my friends tells me Octane’s light would also be bluish by default and they need to set the light to “texture mode” (which I guess means switch to RGB color) to make it white. I guess this is also the case for other renderers, so that’s why it’s not really a problem. For the sky texture, I guess there is a work around of having a vector math node after it, set it to multiply, and values set to x:1.25 y:1 z:1 (probably not accurate) to roughly make the white point get closer to Iluminant E. So I guess it’s fine then.TBH I am kind of glad that it is actually not a problem, so that it would not be blocking issue for merging.

EDIT: I actually have an idea about this. I am not going to start the discussion about this because I don’t want to distract the people working on preparing for the merge. I will bring this back up after Spectral Cycles officially merges to the master. For now I will briefly talk about it so we can have more context when I bring this up again later.

So my idea is basically, having a check box in the color management panel, that says “Optimize White Point (or white balance?) for RGB color input” or something, specific name can be discussed later on after the merge. It is going to be checked by default, and when checked, it will fix the pinkish RGB white problem by converting the rendered image from Illuminant E to D65, exactly what the current branch is doing (if I understand correctly). But in the future when we eventually bring back the Spectral Nodes, when users can define the light source spectrally, it would be awkward for the users to define the light as having 6500k D65, but seeing a colder light color on the screen. In this case, they can just uncheck the check box, then the spectrally defined light will be displayed normally, then the user would just put the vector math node behind the image textures and set it to divide (1.25, 1, 1) or something to make sure the RGB colors display normally (not even sure whether this is necessary, don’t know whether this only affects light source). I think it make sense to have this option because having the user being able to define a D65 light source with Spectral Blackbody node, but not able to see D65 color on the screen will be extremely weird on the user’s end.

Again, this probably does not matter for the initial merge so I am just recording my thoughts here for later reference. I will bring this back up after the merge, and for now I don’t want to distract the people working on merging so I am writting in edit.

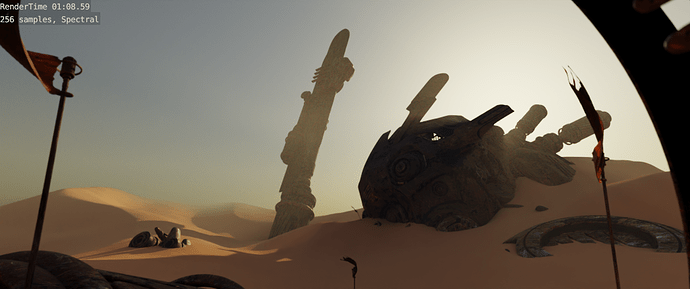

A new build is up on GraphicAll. Changelog:

- Compiling Blender with OSL now works

- Background MIS is now properly implemented and works on CPU and GPU both

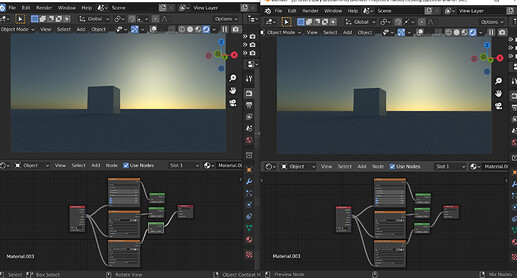

- Added back RGB/Spectral mode switch (switching modes on GPU still requires going out of rendered view)

- Fixed subsurface scattering

- Temporarily added D65 to E conversion for nodes that use XYZ colorspace to fix greenish tint

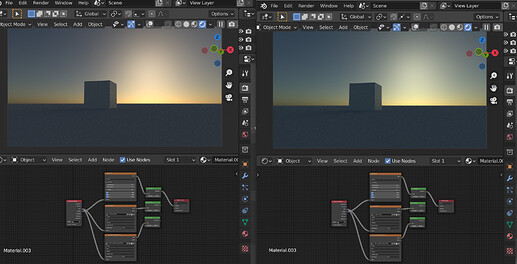

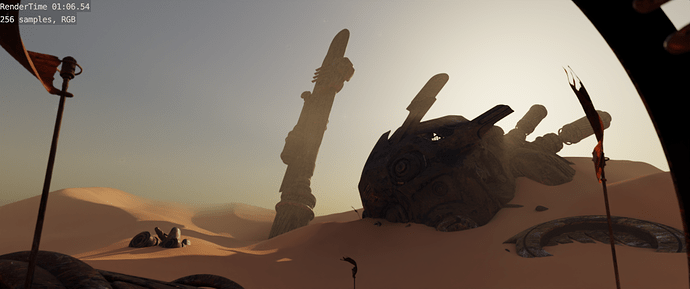

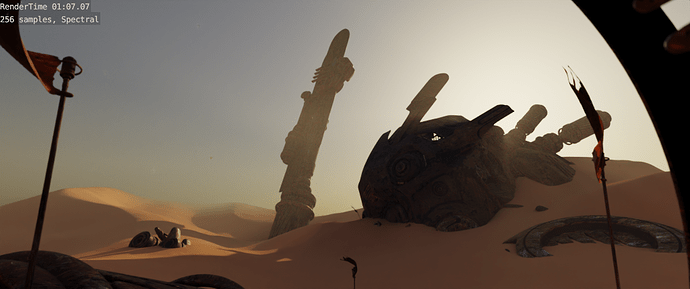

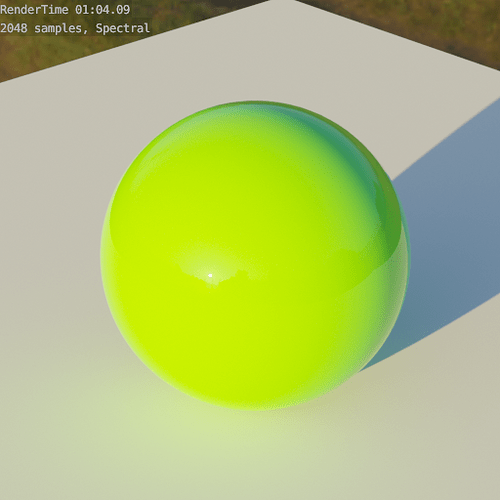

I did some testing and it turns out there’s almost no performance overhead on complex scenes, at least when rendering on GPU. I guess changing 8 to 4 wavelengths per ray is the reason since it uses the same data type as RGB values. I haven’t tested CPU rendering yet.

On simple scenes performance hit is more noticable.

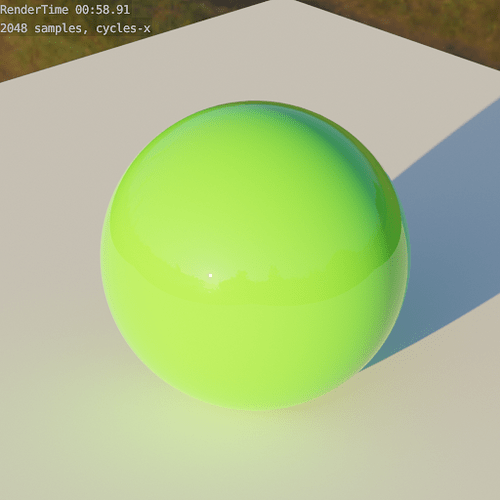

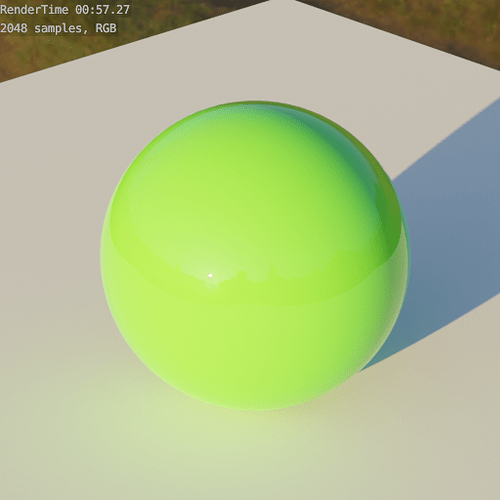

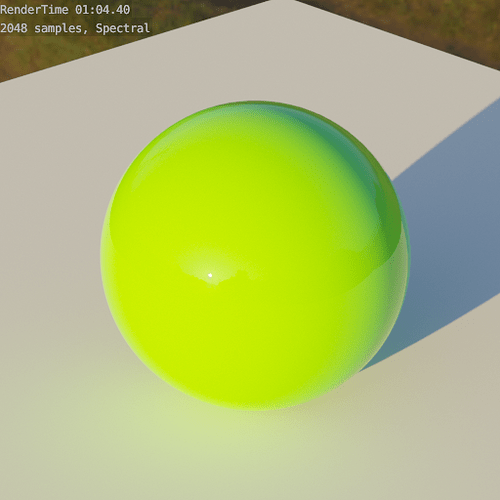

Note that render time with cycles-x is slower because I used older build. With the XYZ D65 to E hack the renders are really close with the only differences being the effects of spectral rendering and slightly darker image (not sure why). Also, the last test clearly demonstrates how spectral rendering can drastically change results even in simple cases.

That’s right, everything must be correctly color managed or it will start falling apart at some point.

I was thinking about adding a new xyz_d65 role to the OCIO config so that the nodes can safely generate the result in the XYZ D65 colorspace and it’ll later be properly converted by OCIO. That’s pretty much what I’ve done in this build, but it’s hard-coded right now.

Sometimes it can get really confusing ![]() . It is incorrect assumption because in this case Cycles uses XYZ with D65 white point for some nodes. But from the other point of view, those nodes always generate colors in XYZ D65 colorspace, which means they always assume that XYZ colorspace always has D65 white point, which is not always correct.

. It is incorrect assumption because in this case Cycles uses XYZ with D65 white point for some nodes. But from the other point of view, those nodes always generate colors in XYZ D65 colorspace, which means they always assume that XYZ colorspace always has D65 white point, which is not always correct.

I’m not sure what can be done when the spectral nodes will be added. It won’t be possible to change the white point of a continuous spectrum, at least not in the same way how it’s happening right now with XYZ values.

I think it’s important to discuss and make all important decisions about color management before the initial merge. It is supposed to be a foundation for all future spectral functionality and changing it later might be more complicated.

Yeah you are right. What I meant by does not matter was mostly about the fact that the spectral nodes is not back yet so the users would not be able to define a D65 light source so it would not be a noticable thing on the user’s end yet.

It is nice that nodes that use XYZ space now output consistent white point with rest of the system, I just hope that Brecht is ok with this solution.

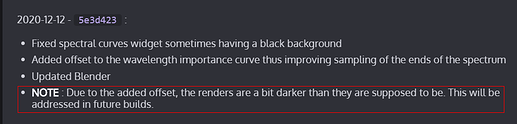

I remember this was because of this?

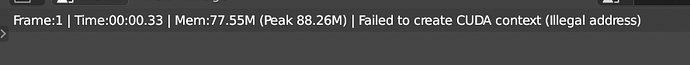

Also, Cuda fails every time I try to use it, don’t know how you got it to work

Oh thanks, you’re right, I completely forgot about the wavelength importance sampling. I’ve uploaded a new build with a few fixes. Updated tests:

That’s probably the issue described in the feedback thread:

I did my previous tests before that commit. Try disabling adaptive sampling, for me the error appears when it’s enabled.

Nice to see the changes here. Is there more color noise now? - Previously, despite that being something to expect, it basically was a non-factor but with the new build it seems more pronounced at least based on your examples. Maybe that’s due to using only four wavelengths.

It’s not terrible amounts of noise, to be clear. About on the same level as the RGB version’s except in hue instead of lightness. But it feels quite obvious.

I also noticed on the babershop scene in particular, even in the RGB version there are surprisingly large discrepancies in terms of what caustics are visible. Did you use this with the same seed? Are the changes expected to change stuff there? (Clearly, if you do use spectral rendering, the RNG will end up giving different results as you are asking for extra random numbers. But for RGB I’d think it’d be the same)

– Probably doesn’t really matter either way but it just seems like somehow the non-spectral build you used might find a few more caustic light paths…

You guys mentioned this 4 wavelengths thing, I understand that we get better performance by using 4 insted of 8, but I am missing the part of what we lose by using less wavelengths. Judging from what you write here, probably the downside is more noticable color noise? Also do the 4 wavelengths include the hero wavelength?

Somehow I cannot notice that, can you help pointing out some specific areas I should zoom in?

Also speaking of caustics, I have been looking forward to the path guiding thing listed in new features of Cycles X, but so far not much discussion or information about it, I get excited when thinking the posiibility of path guiding combined with Spectral Cycles.

Yes, basically the Hero Wavelength algorithm picks and importance samples an entire collection of wavelengths (as many as you ask it to) per pass to figure out what color a pixel has. A naive algorithm only considers a single wavelength at a time and that causes a TON of color noise. I think if you use Hero Wavelengths with only a single wavelength sample, it reduces to that naive algorithm (as there is only a single light to consider and so importance sampling becomes a non-factor). The more wavelengths you add, the more it can nail the correct color in a single go. In practice, at 8 wavelengths, the discrepancy is really tiny. At I think it was 10 it basically is completely gone. But at 4 it still is quite noticeable.

That said, to be clear, this is just as all other noise. Even with the naive algorithm, eventually the correct color emerges. And with 4 wavelengths it can happen fairly quickly too. But clearly at 128 samples in the above scenes it’s still very noticeable

I mostly noticed on shiny surfaces when flicking back and forth. It might really just be a seed/RNG difference. But check out the sheen on the dark cupboard on the far left of the image in the foreground, as well as the caustic on on the bluish bottle on the far right sideof the image (top of the shelf) as well as the reflection on the arm of the light right next to it.

Looking at it again it’s also possible that it’s quite the opposite and those are things that would go away with more samples. At this point I’m not sure and it could go either way

Thats right. The tradeoff happening here is between noise (both colour and brightness, but primarily colour) and the cost of each sample. With 4 wavelengths per sample there is more noise per sample but they are slightly cheaper to compute so you can get more of them. With 8, there is very little colour variation making colour noise almost negligible, but the cost per sample is slightly higher.

Maybe this should become like an exposed-to-experimental-mode parameter or something. Definitely not a thing most people want to mess around with, but for the purpose of testing it out, hiding it behind the Experimental Features checkmark might be a good idea.

Would be fairly straight forward to explain too. The tooltip could just say “Tradeoff between color noise and speed”

Specifically, the expected colour noise from a single hero wavelength sample is directly tied to the average colour of x evenly spaced wavelengths within the sampling range.

For example, with 1 wavelength sample per path, you can only determine the amount of a single wavelength, the result of this is that each sample has the ‘colour’ of a pure wavelength. If you have the hero plus 1 other wavelength (always ‘180 degrees’ from the hero) then the expected colour variation per sample is based on the variation in the averages of the two. As you get up to 8 or more, it almost doesn’t matter what the hero wavelength is, the average is always going to be almost grey. This is why you don’t see much colour noise with 8 wavelength sampling.

Thanks for the clearification, now I am more clear about what it is about.

What I meant by “do 4 wavelengths include the hero wavelengh” was actually does hero wavelength plus 1 means 2 wavelengths, so is 4 here 1 hero + 3 or 1hero plus 4. After reading your posts I guess it is 1 hero +3 ?

I noticed these. I assumed they were caused by differences in the sampling used for cycles-x, unrelated to spectral, but made more obvious by the introduction of colour noise. I imagine they have the same ground truth result.

seeing as how illdefined/arbitrary Hue is, what do you actually mean by that? I’m guessing you just add half the width of the wavelength sample interval and then clip it to be back in the range if it goes out of range? - Obviously 2 samples is too little and thus not a great idea anyway, but I wonder if there are, in principle, smarter ways to do it in order to more strongly conform to perception. Like, I’m imagining stretching out the sample space according to how strongly we perceive it so going left/right on the spectrum is perceptually uniform (i.e. sorta like histogram equalization) and use that as your uniform sampling instead.

Might be complete nonsense though. I have no idea if that’d mess with the convergence guarantees

Yes, 4 wavelength is hero plus 3. The ‘hero’ is simply the first of n and is the one used for determining the path of the ray