Looks good, I think this is a great scene to test regressions, thanks!

Oh these are great! I love how comprehensive this is!

It’s neat how clearly visible the relative energy loss of the various glossy materials is. Beckman seems to come pretty close to correct, but without using a color picker to confirm that that isn’t just my eyes playing tricks to me, Multiscatter GCX comes much closer to the diffuse material’s performance still.

I’m not quite sure I understand how to interpret A and B yet, but if these are refractive spheres, it might be you see the light’s reflection distorted through the sphere and that’s what causes the outer ring? If so, this would change if you gave it different IORs.

Indeed it does. I will try to understand this one further and maybe improve the local context to improve others’ understanding before I finalize it. I will also add more parts this week like SSS which I totally forgot ![]() . Then I will add proper descriptions inside the file and some annotations. Thereafter, it should be stable.

. Then I will add proper descriptions inside the file and some annotations. Thereafter, it should be stable.

try reducing the bounce depth to literally 0 or 1 (whatever the minimum is to have these show up) - like, does that ring appear at the same time as the bottom or is it actually a bounce deeper?

Good idea, I will try.

I mentioned earlier that I expected the small spheres to be too small, but I found that the point-lights inside them were actually too large. I have reduced their size to zero which fixed that problem.

Here is a table of linear sRGB values for D65 lighting with 2° observer*, based on your spectral data:

| square | name | r | g | b |

|---|---|---|---|---|

| 1 | dark skin | 0.176171074 | 0.078205657 | 0.050325319 |

| 2 | light skin | 0.559418554 | 0.308706903 | 0.222877904 |

| 3 | blue sky | 0.113169101 | 0.199214111 | 0.336230423 |

| 4 | foliage | 0.094584966 | 0.148402603 | 0.049897263 |

| 5 | blue flower | 0.236292868 | 0.225854479 | 0.443939932 |

| 6 | bluish green | 0.133852136 | 0.517016744 | 0.402965831 |

| 7 | orange | 0.701383912 | 0.199180602 | 0.022910712 |

| 8 | purplish blue | 0.068398093 | 0.10599782 | 0.377212371 |

| 9 | moderate red | 0.558101901 | 0.090571004 | 0.122013939 |

| 10 | purple | 0.107792384 | 0.044337033 | 0.146859497 |

| 11 | yellow green | 0.348869354 | 0.501926846 | 0.047738753 |

| 12 | orange yellow | 0.791639614 | 0.364952173 | 0.026771391 |

| 13 | blue | 0.027232697 | 0.047814187 | 0.309225394 |

| 14 | green | 0.060132276 | 0.305583239 | 0.060632055 |

| 15 | red | 0.446096575 | 0.028451723 | 0.042072838 |

| 16 | yellow | 0.851849963 | 0.579526249 | 0.010510814 |

| 17 | magenta | 0.506080816 | 0.088937428 | 0.297279333 |

| 18 | cyan | -0.03336819 | 0.248814954 | 0.385518331 |

| 19 | white | 0.886877719 | 0.888596746 | 0.874818407 |

| 20 | neutral 8 | 0.586376076 | 0.583258989 | 0.58230797 |

| 21 | neutral 6.5 | 0.358274318 | 0.358076466 | 0.358818775 |

| 22 | neutral 5 | 0.20316114 | 0.202968908 | 0.203580283 |

| 23 | neutral 3.5 | 0.091064515 | 0.092873282 | 0.094267457 |

| 24 | black | 0.032665982 | 0.03363732 | 0.03527549 |

@kram1032: I haven’t tried in Spectral-Cycles yet, but maybe you can verify the values in your test-scene?

*) the observer should not matter too much, really.

Note: since the cyan spectral response is negative in R, I clipped it.

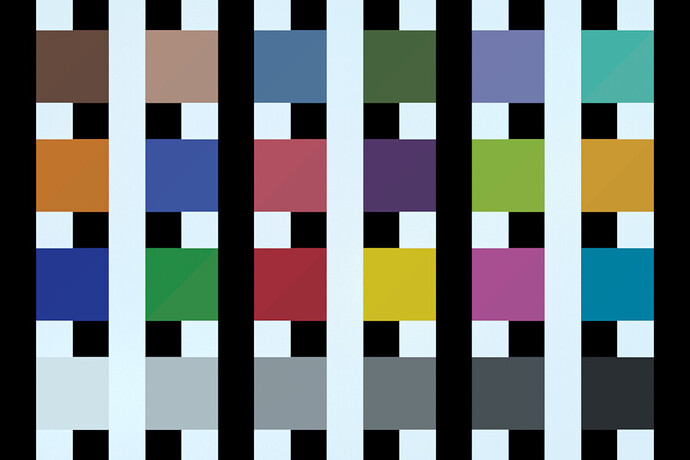

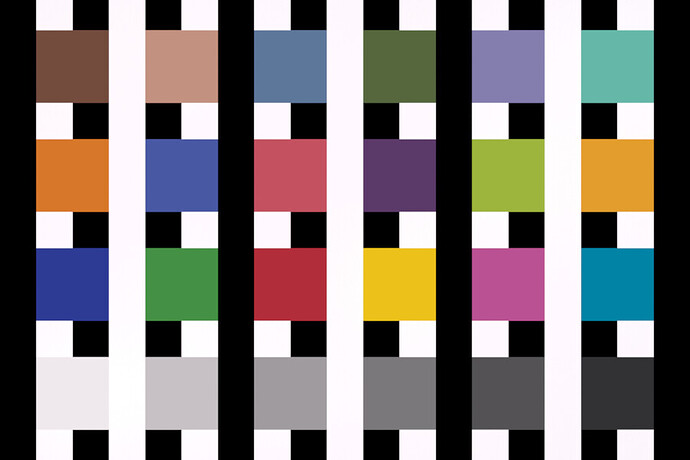

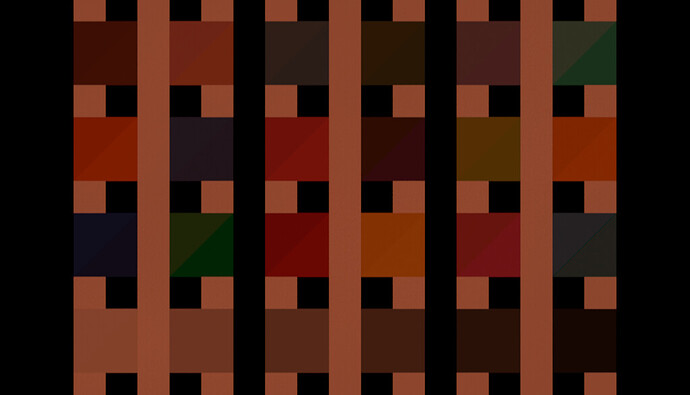

In the spectral and diff version, the top left corner of each swatch is spectral, the bottom right corner is RGB. In the RGB version the entire swatch is RGB

Updated MacBeth chart:

RGB version (rendered in regular Cycles, standard view transform for comparability):

Diff (not totally comparable because I used spectral blackbody lighting which RGB Cycles can’t do):

Spectral Exr:

https://www.dropbox.com/s/l49ge96sd34oytm/Spectral%20and%20RGB%20Colors%20white%20stripes%20linear%20sRGB.exr?dl=0

RGB Exr:

https://www.dropbox.com/s/5e0spgymfhvmm5f/RGB%20Colors%20white%20stripes%20linear%20sRGB.exr?dl=0

Updated Blend file:

https://www.dropbox.com/s/ob5q04t9bb2kuz2/split%20MacBeth%20Chart%20alternating%20background.blend?dl=0

Blendfile for RGB rendering:

https://www.dropbox.com/s/kemtbal1dn99sug/MacBeth%20Chart%20alternating%20background.blend?dl=0

@kram1032: I think you accidentally uploaded the exr for spectral twice and forgot the blend-file.

But the exr’s look not too different, apart from the lighting type.

Interestingly, the pink and red triangles at positions 9 and 15 have a discrepancy in the spectral version: spectral vs RGB - which is odd because I computed the RGB from the same spectra! How accurate is the RGB-to-Spectral conversion inside Spectral-Cycles anyway? I’ll try adding a red-gradient, green-gradient, and blue-gradient area in my test scene to see whether that conversion is at least reversible (inputted RGB = (via spectral) = outputted RGB).

fixed, thanks!

Yes, I believe that points to the same discrepancy that we see here:

In that it’s probably a matter of the Spectral Upsampling being off rather than anything else.

You can see that red is too dark and green is too yellowish.

If you want, you could just copy those two triangles from my scene. Three way RGB gradient. In fact, for the CMY version, you could use both versions. (One mixes RGB colors, the other mixes spectra)

It’d also be possible to expand at least the spectral CYM version beyond its edges to check out how the colors behave out of gamut (but still with all-positive spectra)

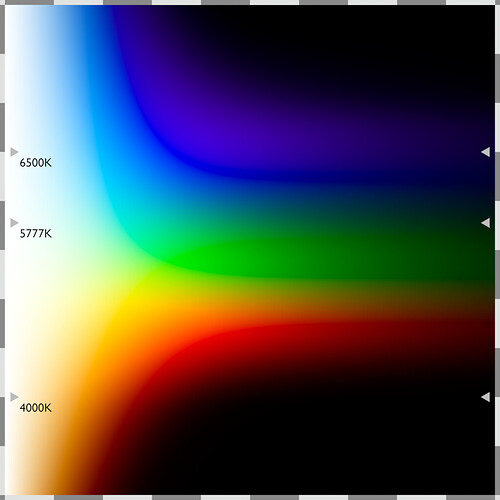

And while you’re at gradients, it might be worth to have two strips that are the blackbody colors.

The spectral version of that could also be taken through a power transform to show how it becomes spectral. There’s very early tests I did to this effect:

Blackbody spectra:

Exr:

https://www.dropbox.com/s/f30n469ka71pi2u/Spectral%20Blackbody.exr?dl=0

Blend File:

https://www.dropbox.com/s/2cv91e64gdlex4f/blackbody%20emission%20grid.blend?dl=0

This kind of setup could be neatly modified to fit whatever

Also this test suggests the “whitest”-to-us blackbody color would be at around 5500K (in the sense that it takes deeper bounces on a surface that coincides with a blackbody spectrum to make it appear saturated and non-white)

I’ll try to incorporate all these and more tomorrow. One thing though, the scene is getting slow to render, so I am thinking of splitting it up into multiple scenes in the same blend. Is that a practical way-of-work?

I’m afraid so. But that means that all the red-is-somehow-wrong remarks above here are possibly at least partially caused by that effect. So in reality, one cannot compare RGB with spectral color-fidelity, merely approximate one by the other.

It’s definitely better to have separate renders for separate situations. You could, however, still have it all in one scene but, like, put different cameras for different parts. Or separate by collections or something.

So then, if you wanted to do a full test, you’d use the camera that tests literally everything. Otherwise, you pick a camera aimed at some subset of tests.

Like, the RGB and Blackbody gradients and really also the MacBeth chart are each aimed at testing colors. So you could put them all together into some corner that a single camera can look at. Those pieces also are gonna render extremely quickly.

Missed this bit before. I wouldn’t say that. Rather, it means the current spectral upsampling just is wrong. It needs fixing to account for the semi-recent changes to the spectral sampling and such. RGB colors you put in should definitely match as closely as possible output RGB colors at least when hit with white light (more precisely a 6500K spectrum after regular sRGB view transform) of energy 1. The current discrepancy, especially in the red channel, is rather massive.

Should really be an easy fix. Probably amounts to replacing one LUT by another. @smilebags already mentioned it’s on his radar though.

Bear in mind that all of the examples are getting rather mangled up thanks to per channel. So using analytic comparisons is void at the onset. It’s broken fundamentally via the output image forming.

Just worth keeping in mind how fundamentally broken per channel lookups are.

Sure but, like, at the very least, if I pick a color that’s supposed to be sRGB, if I use a directly seen mesh emitter with corresponding color at energy 1 with EV 0 and “Standard” tonemapping, I’d hope to closely recover that color.

Does it have to be exact? No.

Are the differences we have uncovered above reasonable discrepancies?

I honestly feel like they aren’t. We can at the very least do better than that.

IMO the blue channel’s differences are small enough. Green is quite a bit too yellow and red quite a bit too dark. If we got that down to whatever the level of difference with blue is, that’d be alright to me.

You might be surprised just how wonky the results are actually. Hence anything beyond “looks OK” such as using charts and such is simply not useful.

Even this level of inspection is somewhat betrayed by per channel mangling.

I mean I know there’s a ton of mangling. And I’d honestly expect some differences for some random colors that are far from pure, given how the current spectral upsampling is performed. But pure Red, Green, or Blue spectra should be pretty darn spot on by construction.

IIRC all Spectral Cycles is doing right now is to take a linear combination of three spectra made to match the following constraints:

- non-negative

- at most 1

- the three spectra together at a weight of 1 add up to the uniform spectrum

- each spectrum on its own ends up after all conversions of Standard transform as, respectively, pure red, pure green, or pure blue at 100% brightness.

Right? That’s what we were doing? Or did I misunderstand something there?

Constraints 1 and 2 should ensure energy conservation. - Very extreme colors seem to sometimes slightly fail this

Constraint 3 should make neutral (grey) colors appear neutral - this part appears to work out pretty much perfectly

Constraint 4 should ensure that pure Red, Green, and Blue should match perfectly. Something there seems to fail right now.

In the spectral space yes, but remember that no display can display them, so the working space ends up in some mixture of RGB lights, and that’s where the per channel mangles; per channel never delivers the chromaticity of the light mixture in the working space. Ever. It’s just accidental random output on some level.

Is there a way to compare working spaces then? 'cause I’m pretty sure the result would be that the issue is already present at that level.

If you stick solely to display linear output with no compression scheme, the results should be correctly output.

That’d be the “Raw” output, right? (Currently not in Spectral Cycles but I could use the same trick I use to get Filmic

Also should be the thing you get when you do MultiLayer .exrs saved As Renders, right? In which case, the diffs I’m taking are diffs of the exrs, so I already showed that this is a real effect even in the working space.

I also deliberately forewent denoising most of the time for the sake of better comparability)