Hello, i’m not a technical person, i don’t understand what you peoples talk about ![]() , i just converted a scene to work with standard view transform ( and not filmic )

, i just converted a scene to work with standard view transform ( and not filmic )

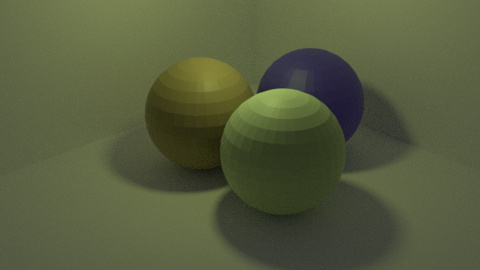

The 3 spheres have 1 to R, G and B

RGB

Spectral

RGB Filmic

Hello, i’m not a technical person, i don’t understand what you peoples talk about ![]() , i just converted a scene to work with standard view transform ( and not filmic )

, i just converted a scene to work with standard view transform ( and not filmic )

The 3 spheres have 1 to R, G and B

RGB

Spectral

RGB Filmic

The fact that I can’t tell the difference whatsoever is testament to the math working out!

Thanks for the great (and beautiful) demo, this shows how little most scenes with very tame lighting will change.

Hopefully we can get something similar to Filmic working again in the spectral version, because then I suspect you might be able to find some more differences with very strongly lit scenes or very saturated lights.

That being said, it’s great to see that spectral rendering isn’t going to completely change the appearance of every scene, and I think that’s a good thing. Once/if it gets merged I think the best thing is if people initially just think ‘Oh, I don’t remember cycles having coloured noise…’ and then they start to see slight differences in bright and saturated scenes, that’s all that should change initially.

Thanks again for the test @NizarAmous, it looks awesome in both engines.

In your builds cuda doesn’t work and optix isn’t even present.

Is this something that will require some sort of port on it’s own on top of your current work? Or is this a building issue? Some more difficult scenes are hard to test since cycles on cpu isn’t really that fast.

this is windows console output when switching to cuda - blender reports no cuda device

AFAIK this is hard to test for smilebags because he has access to neither a CUDA device, nor one with OptiX?

It’s a branch, it’s kinda expected that it only works on the hardware that the dev who is working on it has access to especially this early in the process.

That being said it is possible to build the cuda/optix kernels without having an nvidia card to at-least rule out basic build errors, actual testing won’t happen though.

If i have some time today i’ll take a quick look, but i make no guarantees that’ll actually happen.

I personally wouldn’t benefit either. But for those who do, that’s be very helpful, so thanks in advance if it happens

Nice render. If you put the balls closer together or make them larger, you may see greater differences. That is, using coloured lights on those balls, or letting the light bounce between them, will reveal the energy discrepancies much more visibly.

Getting to play with actual spectral rendering is key to understanding how everything connects together. To this end, you might find this goofy set of questions I’ve written up insightful here, and help out your authoring with spectral rendering.

Again, please keep the demos coming. They are invaluable, especially if you manage to exploit the spectral differences in an illustrative manner.

Am I correct that there are no direct spectral color inputs yet? Things like blackbody and wavelength nodes still translate to RGB as usual and then convert back to spectral? Also, if direct spectral inputs are added, some lamp spectra textures would be nice to accurately simulate poor-CRI bulbs like “cheap dingy bathroom fluorescents”

That is correct. As far as I’m aware, blackbody and wavelength nodes are not yet translated to properly provide spectra.

A pure wavelength node might not be super useful though. From what I’ve read, @smilebags is thinking about doing a gaussian spectrum instead of a perfectly sharp peak (which, I suspect, would not work very well for sampling)

I have a feeling implementing those two would actually be quite trivial (you’d literally just provide a normalized function of the spectrum in question instead of doing the conversion to RGB which currently is then pointlessly converted back into a different spectrum) but the priority appears to be to get the basic colors right for now. (Apparently specifically blues need more work still)

Though he has just asked Brecht about a function that would be very useful for implementing these functions so perhaps it’s not that far off. - Those two nodes are certainly among the lowest-hanging fruits of putting in actual spectra.

More complex spectral input is gonna be more work, possibly requiring new UI to make it workable. There was some talk of that in this thread before, but it was basically shelved as not as immediately important for this project. We need to background basics first. So that we then can take full advantage of those in what ever ways we might like

That’s right, I think it’s a combination of two problems, 1. I don’t have CUDA or Optix hardware to test on and 2. this might also impact building. If I can get building set up somewhere with the necessary configuration we should see the other render options come back.

Yep, that’s right, there’s one more milestone before I can start adding these in, but I do plan to do so eventually.

I’m hoping I can figure out a reasonably straightforward way to input any spectrum you want so this sort of thing should be relatively easy.

It might be possible down the track once I have much better sampling of wavelength, but it’ll mean scene-dependent PDF which I think might be unrealistic to expect for a while. Indeed a gaussian spectrum is looking like it might be an easier task for the mid-term.

I’m tossing up between working on 1. wavelength PDF for better noise performance, 2. making the change which is required for spectral inputs and 3. fixing SSS and volumes.

This will unlock wide-scale testing with existing scenes (because there will barely be any scene it can’t support).

My vote goes to 3.

Just tried out a new build of this repo, not sure which github is the main one being worked on now, there’s quite a few…

Old RGB:

ClassroomRGB|690x388

Spectral:

Still a marked difference in the overall colours of the image but looks pretty close! Not sure this scene would really change much from the spectral changes anyway.

Also, probably just an oddity in blender with OCIO but just after opening the file, all the colour space dropdowns for the textures are blank and it is rendering them without converting to linear sRGB into the shaders. after setting one texture to sRGB Colour it fixes them all and renders fine.

![]() I can only post 1 image.

I can only post 1 image.

Thats the right place to build from for now.

Your colour space bug is interesting, I’ll see if I can reproduce it later today.

It’s probably because it was saved with a colourspace that is not in the new OCIO configs, similar thing happens when I load in the ACES config.

That sounds about right. The OCIO config is just enough for testing at the moment.

I just tried building with CUDA and it’s not happy… seems float2,3,4 don’t have an array operator

kernel/kernel_color.h(45): error : no operator "[]" matches these operands 3> operand types are: float4 [ int ]

And the KernelGlobals object is not passed around (kernal_globals.h L127) so you’ll need to find a way of keeping the wavelengh_low_bound variables around

kernel/kernel_path_state.h(69): error : class "KernelGlobals" has no member "wavelength_low_bound"

I would try to fix it but I have no idea where to start.

From only causally visiting this thread it’s hard to keep up where the latest work is being done, i based my work off this commit which feels like the wrong one, but hey, it’s what i had.

The indexers on float4 are seemingly only meant for host code so i just replaced them with the field names and all seems well there.

diff --git a/intern/cycles/kernel/kernel_color.h b/intern/cycles/kernel/kernel_color.h

index 81aea060293..37f69031958 100644

--- a/intern/cycles/kernel/kernel_color.h

+++ b/intern/cycles/kernel/kernel_color.h

@@ -42,25 +42,25 @@ ccl_device float3 find_position_in_lookup_unit_step(float4 lookup[], float posit

{

if(position_to_find <= start) {

return make_float3(

- lookup[0][1],

- lookup[0][2],

- lookup[0][3]

+ lookup[0].y,

+ lookup[0].z,

+ lookup[0].w

);

}

if(position_to_find >= end) {

return make_float3(

- lookup[end][1],

- lookup[end][2],

- lookup[end][3]

+ lookup[end].y,

+ lookup[end].z,

+ lookup[end].w

);

}

int lower_bound = int(position_to_find) - start;

int upper_bound = lower_bound + 1;

float progress = position_to_find - int(position_to_find);

return make_float3(

- float_lerp(lookup[lower_bound][1], lookup[upper_bound][1], progress),

- float_lerp(lookup[lower_bound][2], lookup[upper_bound][2], progress),

- float_lerp(lookup[lower_bound][3], lookup[upper_bound][3], progress)

+ float_lerp(lookup[lower_bound].y, lookup[upper_bound].y, progress),

+ float_lerp(lookup[lower_bound].z, lookup[upper_bound].z, progress),

+ float_lerp(lookup[lower_bound].w, lookup[upper_bound].w, progress)

);

}

@@ -555,27 +555,27 @@ ccl_device float3 wavelength_intensities_to_linear(KernelGlobals *kg, float3 int

ccl_device float3 find_position_in_lookup(float4 lookup[], float wavelength)

{

int upper_bound = 0;

- while(lookup[upper_bound][0] < wavelength) {

+ while(lookup[upper_bound].x < wavelength) {

upper_bound++;

}

int lower_bound = upper_bound -1;

- float progress = (wavelength - lookup[lower_bound][0]) / (lookup[upper_bound][0] - lookup[lower_bound][0]);

+ float progress = (wavelength - lookup[lower_bound].x) / (lookup[upper_bound].x - lookup[lower_bound].x);

return make_float3(

- float_lerp(lookup[lower_bound][1], lookup[upper_bound][1], progress),

- float_lerp(lookup[lower_bound][2], lookup[upper_bound][2], progress),

- float_lerp(lookup[lower_bound][3], lookup[upper_bound][3], progress)

+ float_lerp(lookup[lower_bound].y, lookup[upper_bound].y, progress),

+ float_lerp(lookup[lower_bound].z, lookup[upper_bound].z, progress),

+ float_lerp(lookup[lower_bound].w, lookup[upper_bound].w, progress)

);

}

ccl_device float find_position_in_lookup_2d(float2 lookup[], float wavelength)

{

int upper_bound = 0;

- while(lookup[upper_bound][0] < wavelength) {

+ while(lookup[upper_bound].x < wavelength) {

upper_bound++;

}

int lower_bound = upper_bound -1;

- float progress = (wavelength - lookup[lower_bound][0]) / (lookup[upper_bound][0] - lookup[lower_bound][0]);

- return float_lerp(lookup[lower_bound][1], lookup[upper_bound][1], progress);

+ float progress = (wavelength - lookup[lower_bound].x) / (lookup[upper_bound].x - lookup[lower_bound].x);

+ return float_lerp(lookup[lower_bound].y, lookup[upper_bound].y, progress);

}

ccl_device float3 linear_to_wavelength_intensities(float3 rgb, float3 wavelengths)

@@ -678,20 +678,20 @@ ccl_device float3 linear_to_wavelength_intensities(float3 rgb, float3 wavelength

make_float4(830.0f, 0.989627951f, 0.000180551f, 0.010106495f)

};

// find position in lookup of first wavelength

- float3 wavelength_one_magnitudes = find_position_in_lookup(rec709_wavelength_lookup, wavelengths[0]);

+ float3 wavelength_one_magnitudes = find_position_in_lookup(rec709_wavelength_lookup, wavelengths.x);

// multiply the lookups by the RGB factors

float3 contributions_one = wavelength_one_magnitudes * rgb;

// add the three components

- float wavelength_one_brightness = contributions_one[0] + contributions_one[1] + contributions_one[2];

+ float wavelength_one_brightness = contributions_one.x + contributions_one.y + contributions_one.z;

// repeat for other two wavelengths

- float3 wavelength_two_magnitudes = find_position_in_lookup(rec709_wavelength_lookup, wavelengths[1]);

+ float3 wavelength_two_magnitudes = find_position_in_lookup(rec709_wavelength_lookup, wavelengths.y);

float3 contributions_two = wavelength_two_magnitudes * rgb;

- float wavelength_two_brightness = contributions_two[0] + contributions_two[1] + contributions_two[2];

+ float wavelength_two_brightness = contributions_two.x + contributions_two.y + contributions_two.z;

- float3 wavelength_three_magnitudes = find_position_in_lookup(rec709_wavelength_lookup, wavelengths[2]);

+ float3 wavelength_three_magnitudes = find_position_in_lookup(rec709_wavelength_lookup, wavelengths.z);

float3 contributions_three = wavelength_three_magnitudes * rgb;

- float wavelength_three_brightness = contributions_three[0] + contributions_three[1] + contributions_three[2];

+ float wavelength_three_brightness = contributions_three.x + contributions_three.y + contributions_three.z;

return make_float3(

wavelength_one_brightness,

Perf wise it’s about on par with cpu on my gtx1660/i7-3770 (not sure why, but all demos previously were either spheres or a bathroom and since i can’t model, spheres it is!)

00:25.22 CPU

00:21:45 GPU

I will apply that diff you generously provided, thank you. I wasn’t aware we couldn’t use index accessors on the GPU, interesting. Thanks for the insight and test. It seems like it’s outputting to XYZ in your image, if they’re meant to be red green and blue? Maybe there’s something funky with the OCIO config.

Have you cloned the branch from my repository or Troy’s? His is a bit more up to date and handles submodules correctly, which mine doesn’t. Either way, thanks for that, I’ll apply it and hopefully be able to support GPU rendering. You said it was possible for me to make a build with the GPU kernel, do you know how that can be done?

The biggest issue with my image is: I’m a compiler and tooling guy, given cpu and gpu gave identical images, whatever is wrong is my fault, (although i can’t tell you what i did wrong if i wanted to)

Just install the cuda toolkit, turn WITH_CYCLES_CUDA_BINARIES on in cmake and build the kernels, there’s not that much to it.

for dev work i’d keep them off (or limit it to a single architecture in CYCLES_CUDA_BINARIES_ARCH) though they are somewhat of a time vampire to build.