I suppose it’s worth pointing out that I believe we are talking about two different things, where “one” thing is being discussed.

Within the scene colourimetry, we could say that any number of arbitrary colours are adaptable. That’s not the real issue.

What the issue is, is being able to achieve colour constancy from the psychophysical side based on the output display colourimetry. It literally has nothing to do with the scene at this point. The psychophysical side is somewhat a separate mechanism and related to output contexts in this case.

So I believe it’s mixing apples and oranges to a degree, and even if the idea is trying to adapt spectral, good luck getting the spectral distribution of a random display’s colourimetry.

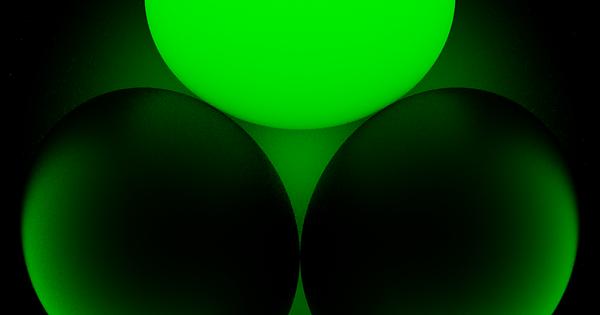

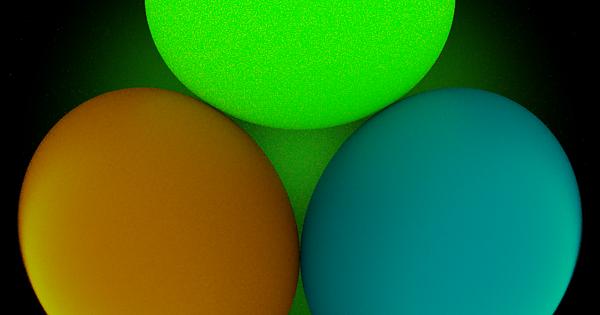

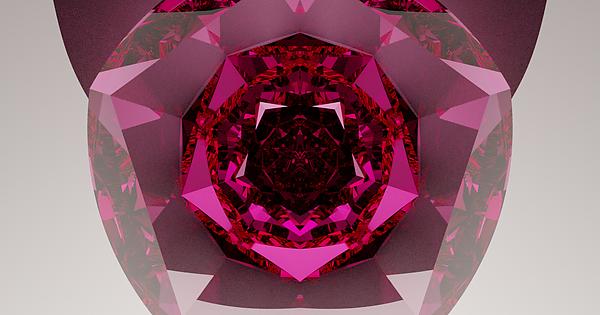

These are excellents tests.

The code is still subject to bugs. With that said, the last blue case you can imagine that the blue spectra is being hit with a blue light of the same SD. That is, this simulated indirect will tend to sharpen the spectral distribution I believe. In this case, the result could actually end up being outside of the source gamut using the reconstructed primaries. At the very least, the output result will be a different spectral composition, and based on the input, that has a good chance to change quite dramatically. As we increase saturation, we also get plenty of psychophysical oddities such as Abney effect, which is very noticeable in blues, so there’s another complicating axis to this mess.

And because it’s blue, and the whole pipeline is based on 1931 at this point, without the latter Judd Vos corrections etc. that end up in the 2006 CIE CMFs, blue wavelengths are subject to some rather unfortunate twists and tuns.

I’m not certain that that is what is happening here exactly, but the moment we are in spectral, all sorts of things get trickier. This ends up wrapping in gamut mapping and other things. Right now though, there are quite a few larger fish to fry to make sure things are working within the CMS.