I think spectral is a long term investment. Just like once upon a time, ray tracing was considered too slow, path tracing was too noisy and approximated SSS was good enough, spectral rendering is at this point in time not in the mainstream. Given faster computers, more memory and better and better quality imagery, i think it’s plausible that spectral rendering will be standard in production by the end of this decade. Might as well get started on it now.

![]() Agree 100%.

Agree 100%.

I would be surprised if it isn’t in the majority of renderers by the end of the decade. We will see how that turns out.

Glad to hear you’ve got that opinion.

Anyone outside of BF who has successfully developed features for Blender, what is the best way to collaborate on development? I’m mainly lacking development skills in C rather than lacking an understanding of the topic or an understanding of software architecture (my day job has a strong focus on writing scalable and maintainable code) so I’m hoping that reviews will be relatively straightforward (even if tedious given my skill level in the language).

Side note:

My understanding is that the assumption that spectral is way slower than RGB comes from the time when hero wavelength sampling wasn’t a thing (or not standard) because from what I can see, there’s actually some chances for significant optimisations when rendering spectrally instead of in RGB. In essence an RGB renderer is just a spectral one where you’re only sampling at 3 wavelengths (and where your materials behave as though every wavelength is equivalent).

I’ll confidently take the under on that.

There’s just too many houses marching headlong that way for it to not have a trickle down effect.

And frankly, with proper development, everyone can get access to it now, so why not go for it. Everyone wins. If you don’t want to use the spectral upside, keep yourself to three wavelengths like BT.2020. For those that do, expose all the things and cut it free.

Better than being late to the party.

Nailed it.

This is hugely important and critical work, even if a majority can’t see it. Don’t base what you are doing on the majority of eyes. Base it on the minority that might be watching quietly. You never know who is watching. Just believe in the work and keep hammering.

I’m struggling to understand what needs to be done in shader_bsdf_sample to convert the relevant closures to wavelength intensities.

I’m looking at the call to bsdf_sample, one of the functions I’m meant to be looking out for. It is passing in a pointer to a local variable called eval, which seems to be modified within bsdf_sample as to be expected, but then upon return it is only multiplied with sc->weight when passed in to bsdf_eval_init which seems odd. Maybe I’m misunderstanding the purpose of that init function… Should I be converting eval * sc->weight to wavelength intensities before passing it into bsdf_eval_init? If so, could someone describe what the init function is actually for?

@brecht and @lukasstockner97 (as the most recent modifiers of this code)

Code for reference

// intern\cycles\kernel\kernel_shader.h:778

ccl_device_inline int shader_bsdf_sample(KernelGlobals *kg,

ShaderData *sd,

float randu,

float randv,

BsdfEval *bsdf_eval,

float3 *omega_in,

differential3 *domega_in,

float *pdf,

float3 wavelengths)

{

PROFILING_INIT(kg, PROFILING_CLOSURE_SAMPLE);

const ShaderClosure *sc = shader_bsdf_pick(sd, &randu);

if (sc == NULL) {

*pdf = 0.0f;

return LABEL_NONE;

}

/* BSSRDF should already have been handled elsewhere. */

kernel_assert(CLOSURE_IS_BSDF(sc->type));

int label;

float3 eval = make_float3(0.0f, 0.0f, 0.0f);

*pdf = 0.0f;

label = bsdf_sample(kg, sd, sc, randu, randv, &eval, omega_in, domega_in, pdf);

if (*pdf != 0.0f) {

bsdf_eval_init(bsdf_eval, sc->type, eval * sc->weight, kernel_data.film.use_light_pass);

if (sd->num_closure > 1) {

float sweight = sc->sample_weight;

_shader_bsdf_multi_eval(kg, sd, *omega_in, pdf, sc, bsdf_eval, *pdf * sweight, sweight, wavelengths);

}

}

return label;

}

BsdfEval is the summed result of evaluating one or more BSDFs.

In shader_bsdf_sample, we are sampling one BSDF, and then evaulating all other BSDFs for the sampled direction too. The result of the evaluated BSDFs is combined with multiple importance sampling weights. In bsdf_eval_init we initialize the sum with the evaluated result from the sampled BSDF.

Converting eval * sc->weight seems correct to me.

@brecht: do you intentionally distinguish between ‘sampling’ and ‘evaluating’ here? AFAIK ‘sampling’ would mean ’taking a randomized vector from the bsdf’ whereas ‘evaluating’ would mean ‘using the first vector, computing what the probability would be for the second bsdf to yield the same vector’. Am I correct in that assumption?

That’s correct. bsdf_eval evaluates the BSDF. bsdf_sample both samples and evaluates the BSDF, for efficiency.

Thanks for that. It seems most things are being converted now due to the fact that shader_bsdf_sample covers so many BSDFs.

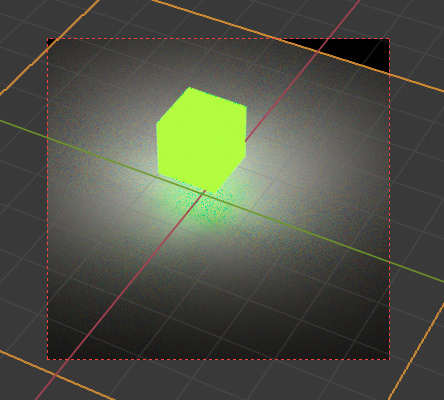

I seem to be only missing a few parts now, namely the environment background which I thought would be covered by emission (but seems like it isn’t) (EDIT: I found I was converting stuff in direct_emissive_eval and indirect_background in the wrong place) and an odd issue where some parts are converted (like directly under the emissive cube here) but others aren’t like what I would expect is sampling the BSDF (like near the edges of the white plane).

Edit again: I think I fixed both of these by finding an issue in my conversion of direct_emissive_eval

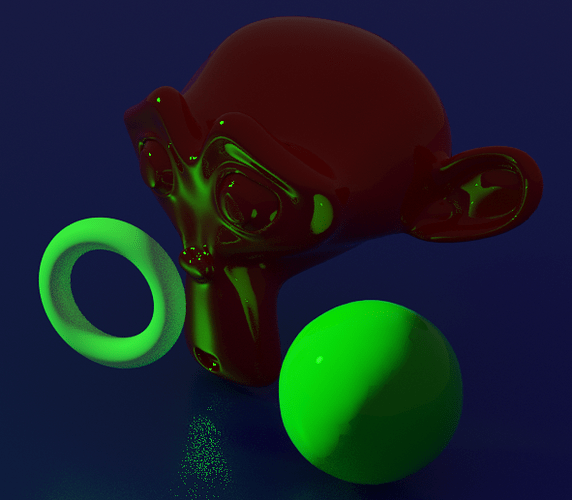

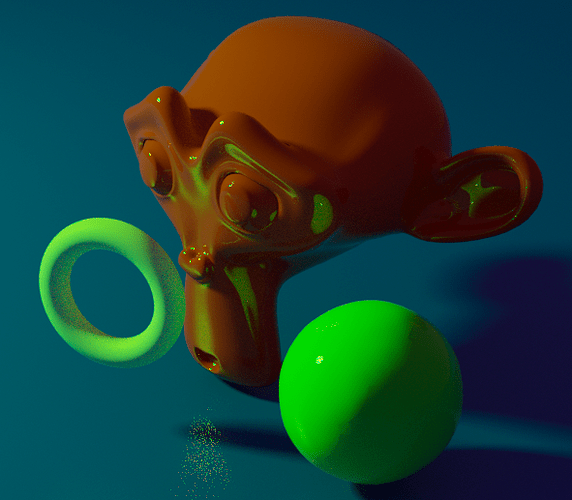

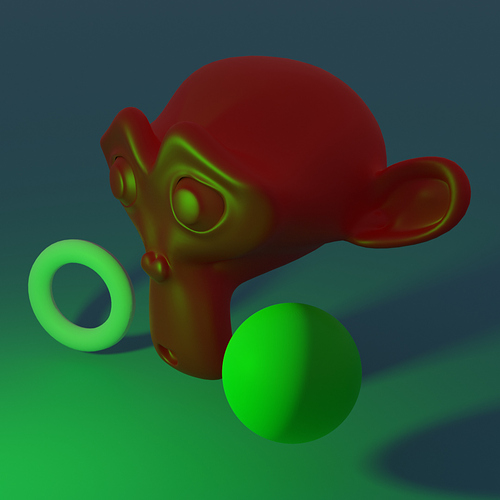

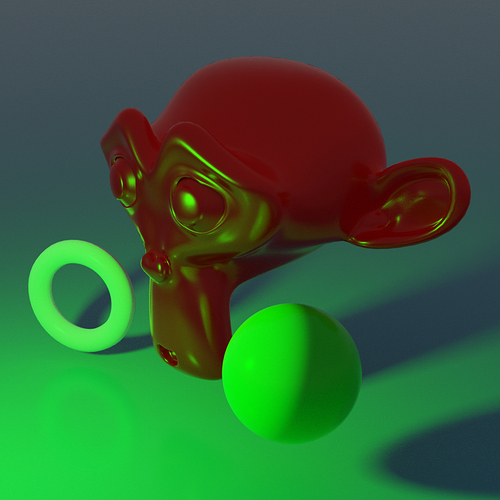

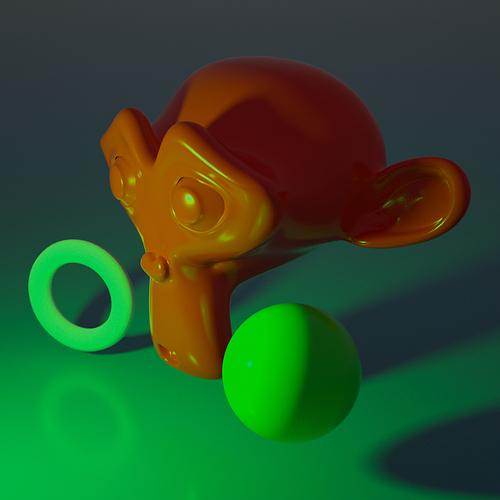

RGB

Spectral

This is the same scene, 100% saturated red suzanne, green sphere, white torus, blue floor plane, green light and very faint white environment.

Even I’m surprised by the difference in the images.

Branch updated if anyone wants to give it a try. Only the combined pass works, and SSS and volume haven’t been converted yet, but I think everything else should be working now.

Does the branch have a separate rug and spectral renderer or did you replace the rgb renderer.

This branch replaces cycles core with spectral rendering, the comparison was made with 2.82 downloaded from blender.org

Even though you are using BT.709 primaries, you can exacerbate the luminance issue by using BT.709 blue, especially if you hand craft a lower luminance mixture that still falls within the BT.709 gamut.

Even just using a range from indigo to blue to magenta would likely yield the most exacerbated results. You could possibly drive an RGB render into zero luminance quickly while a spectral ends up fine.

Might be quite fun as a test case.

It is very easy to make an RGB render 0, just multiply any coloured light by a different colour material (where both are saturated). The diffuse component will be black. You’re right, there would be even more extreme differences with blue, I would imagine.

Im thinking of packaging up a build for people to try out soon, if it is easy I might try to get denoising working, otherwise I’ll upload a build somewhere as is for people to try out and give feedback

Someone in this thread might have the Travis-Fu to add the Travis configuration to your branch and get autobuilds working?

Why is that? Is that because it is positioned far from the spectral locus? I’m trying to understand what the underlying difference is which would cause such a change.

I hope so… I certainly don’t  setting up build and deploy config is the task I dread the most at work

setting up build and deploy config is the task I dread the most at work

It’s easier to visualize if we keep a clean firewall between our understanding of radiometric energy versus perceptual energy.

That is, we could have a super high powered burning laser that was completely lacking perceptual energy because it isn’t in the visible light range. Unsurprisingly, visible light falls into that exact same model.

Some wavelengths of light, at equal radiometric energy, carry wildly different quantities of perceptual energy. It just so happens that the maximal perceptual energy in a mixture is anchored around greenish colours, at 555nms. Everything else is vastly lower, and lopsidedly so as we trend toward shorter blueish wavelengths.

The nature of RGB not being radiometric is the reason that things fall apart much more quickly; the simple math doesn’t work properly on the RGB model.

Right, that makes sense. I was thinking it had to do with perceptual energy but I wasn’t sure in what way they were related. The same perceptual ‘brightness’ (how bright the material appears under white light) in a blue material and green one might have vastly different radiometric reflectivity. Is this the core of the difference in the rendering result?