Yes, those are all assumed to be in scene linear color space as well. Probably every float3 color in Cycles now is assumed to be in scene linear color space, unless I’m forgetting some exception.

Yes, that’s correct.

I’ve struggled in the past to come up with a way of generating a PDF (if that’s the correct term) for an arbitrary ‘distribution’. Is there an easy way to do this? Such that samples evenly distributed between 0 and 1 become weighted according to some function.

Good to know. I’ll keep that in mind, thanks.

This is a really solid idea from @brecht here given that we have the XYZ role; the resulting spectral distribution can be directly plopped back into the scene linear rendering space if all of the spectral goodness is juiced out.

The only place where this becomes problematic is gamut mapping, in that the destination scene linear rendering space may be a different volume than the wide-open spectral calculation model. As a result, it can cause collapsing of certain discrete values into identical destination code values, and you end up with posterization.

Granted, it’s a great problem to have, and one that in theory can be resolved via a rendering transform in OpenColorIO I’d think.

import colour

from colour import plotting

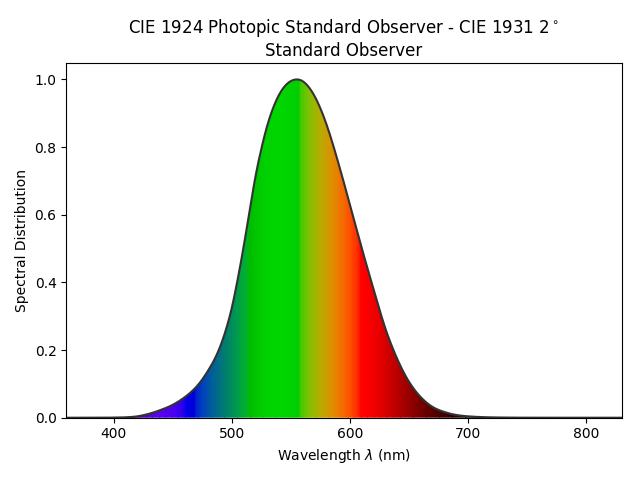

colour.plotting.plot_single_sd(colour.PHOTOPIC_LEFS["CIE 1924 Photopic Standard Observer"])

Just use the luminance weights of the XYZ. That’s your luminous efficacy function baked into XYZ. If you need to operate on the spectral distribution directly, you’ll need the CIE 1924 luminous efficacy function itself. The luma coefficients code I contributed a while back will pull the coefficients from the OpenColorIO configuration, or the newer XYZ role that @lukasstockner97 added recently should suffice.

It should come with caution however, as some spectral wavelengths could have a rather large impact on the resulting chromaticity, and luminous efficacy isn’t the most tremendous metric here I believe. For example, there’s inherent metameric issues as you get near the spectral locus, and doubly so as you head toward the limits on the upper and lower ranges of the wavelengths.

Because I literally just output this, I figured I might as well include it here:

Yep. We just need to rely on a camera response curve to get us from spectral to some camera space, then use the OCIO config to change that to the output space. I think we need this extra step here since the camera response curve takes spectral data into some fixed space, but interpreting that as scene linear (which can change) would result in incorrect colours.

For example, probably a good default would be using the XYZ CMFs then interpreting the result of that as XYZ, which would always produce the correct result in scene linear space. On the other hand if we wanted to use a particular camera response curve it wouldn’t be mapping to XYZ but to some arbitrary camera space, so we would need to then transform that to scene linear with OCIO.

As for importance sampling the wavelength, I agree, blindly using the luminance could result in unoptimal sampling for colour noise. It is a trade-off to make, we can optimise for least brightness noise or least colour noise or somewhere in between, but considering our eyes handle denoising in colour data much better than in brightness, I think it makes sense to weigh brightness more heavily than colour for importance sampling.

But even once we find some desired distribution, I’m not sure how to take that and turn it into a function which maps random numbers from 0 to 1 such that they follow said distribution. I can do it with a precomputation step by integrating the discrete values, but this really doesn’t seem optimal. The other way is to hand-craft the PDF to fit as closely as possible, but again, doesn’t seem very optimised. I was wondering whether I’m missing something obvious.

I think you missed Brecht’s point? I believe he suggested going right back to scene linear RGB. XYZ won’t help the math, and will in fact make any pixel manipulations worse.

I believe Brecht was suggesting simply going right back into OCIO immediately once the spectral work is complete.

It’s possible I misunderstood but I feel I am grasping what was intended. My comment was about how to achieve this.

To go from a spectrum to any tristimulus space, you need to undergo some sort of spectrally dependent ‘component summing’. (I’m not sure what the correct word to use here is). Such a thing would be the spectral response curve of a digital sensor, which has an implied destination colour space. I imagine generating these response curves on the fly in order to land in any colour space is unnecessarily complex. Can you explain how you imagine this step happening?

I’m basing this proposed structure on what I’ve read about Manuka’s colour system. Their system is both unachievable and not a suitable fit for Blender, but they’re doing a lot of things right which I think we can learn from.

Skip the digital sensor portion for the time being, as until the whole pipe is spectral, it’s a mental exercise that’s a complete waste of time from what I can see.

The transform from spectral to XYZ is the basis for everything we’ve been chatting about here. That translation table comes in several flavours, but the one most frequently used is the CIE 1931 Colour Matching Functions, or the CMFs as they are frequently referred to. There are corrections to the original 1931 tables, and are listed by date. For example, there is a 1964 and 2012 set. The 2° standard observer applies for things like looking at a display.

Having just learned what a “closure” is in the terms of the math of light transport models, I believe Brecht was suggesting to transform immediately back to the three XYZ light based model after you finish the spectral calculations. The tables are literally as simple as:

- Looking up the resultant XYZ based on the spectral composition. The tables are located here, from a reliable source.

- Summing the totals to a final XYZ

- A simple OCIO transform via the XYZ role to get back to the scene linear representation, negative colour encodings and all

Including the tables is your first step to properly doing this, and your issue is solved. It’s worth including the original 1931 transform, and the 2012. Why? Because the 2012 has rather critical tweaks to the table that will have an impact on synthetic values. It would make it foreword friendly. A constant, upgraded to a preferences choice with DNA / RNA later would work fine.

Sounds like the way to go, this is what I was planning on doing. Once this transform is configurable it would be interesting to see how using camera responses rather than human eye ones differ, but that’s definitely later down the track, once it is user-selectable at runtime.

Would be rather dull. It’s important for trying to simulate how the camera grabs the spectra of the scene for compositing, and why it is included in Manuka, but from an aesthetic or any other purpose, dull and not terribly worthwhile as best as I can tell.

The camera spectral colorimetry response basically removes another layer of artistic massaging. Specific spectral colours would end up at the virtual sensor in the same way that the camera sees them, as opposed to some other manner.

This is essentially the exact same rabbit hole that proper scene referred compositing heads down; try to emulate the light transport as best as you can in radiometric-like models (RGB being crippled here) and then, after all the math and calculations and such are complete, dump the whole mess through a display / camera rendering transform.

If you do traditional After Effects compositing, you are typically interacting with image buffers that are already baked and warped for aesthetic display, and as a result, the radiometric-like RGB ratios can’t be easily unwound. So you end up massaging and blending and smudging and guessing and primping and pulling and bending the images to match.

EG: The classic “My photo is grey in Filmic, halp!!1!1!” and the associated misunderstanding of what sRGB’s specification is.

If done right, it’s all “for free” and the results are an order of magnitude “more correct”, which will likely elevate the work.

Fair call.

I’m certainly fully aware of how much easier things are if data is in an un-smushed form - attempting to backtrack to that point from camera RAW files was a difficult but worthwhile exercise. It makes working so much more intuitive, and honestly, simpler. I imagine the standard observer CMFs to XYZ are going to cover 99.99% of use cases.

On another note, the learning curve to actually implementing this stuff is pretty much a brick wall. Conceptually I get it, but going through swapping float3s with float4s throughout the codebase seems to be getting me nowhere.

Realistically, I think if this is going to happen in a timely manner it is going to need Blender dev time for the initial switch which requires touching so many files. Once the core is in place I’m sure I can contribute meaningfully.

Which leads me to the question - is this something that the Blender devs are actually on-board with? I don’t want to be pouring so much time into this if it’s going to end up as a fork (at best). If it doesn’t align with the goals and plans of Cycles, at least we’ve come to an understanding of what it could be.

Warning, Really Ignorant Question: Do you have your branch up on GitHub?

Not at all ignorant. I don’t, but could do so. That’s a way of making it easier to discuss at least.

I have now pushed my dev branch to GitHub

It doesn’t have the broken changes to the various colour conversions I did since they just put the renderer into an ‘almost working but not really’ state which I didn’t want to commit.

I’ve created a PR as an easy way to view all the changes from master, too.

@brecht I’d appreciate your thoughts on this when you have some time. I understand all of you are extremely busy keeping up with so many areas of development, which is exactly why I’d like to get clarification on whether or not I can expect to see any support on this. (Your contributions to date have been incredibly helpful, thank you).

While I’m doing what I can with the resources I have available, I think it is unreasonable to commit to doing all of this by myself (as much as I’d like to).

If there is the capacity to have someone assist with this, what’s the best way to communicate and get assistance? If not, could you highlight the reasons why not, if it isn’t the obvious ‘we have higher priorities right now’?

Something I think you might have the ability to answer is this question:

Is this the full list of functions returning closures in Cycles? bsdf_eval , bsdf_sample , emissive_simple_eval , bssrdf_eval and volume_shader_sample.

Extra if you have time

If my understanding is correct, whenever the result of one of these functions is multiplied with some data which looks like RGB (such as sc->weight or some float3 texture lookup), converting the product of that multiplication to wavelength intensities before accumulating should achieve the desired result.

Since it’s a small function showing what needs to happen in every case, can you sanity check this for me? Two comments outlining my current understanding.

ccl_device_inline void _shader_bsdf_multi_eval_branched(KernelGlobals *kg,

ShaderData *sd,

const float3 omega_in,

BsdfEval *result_eval,

float light_pdf,

bool use_mis,

float3 wavelengths)

{

for (int i = 0; i < sd->num_closure; i++) {

const ShaderClosure *sc = &sd->closure[i];

if (CLOSURE_IS_BSDF(sc->type)) {

float bsdf_pdf = 0.0f;

float3 eval = bsdf_eval(kg, sd, sc, omega_in, &bsdf_pdf);

if (bsdf_pdf != 0.0f) {

// this is converting RGB weights from the closure evaluation to wavelength intensities

float3 wavelength_intensities = linear_to_wavelength_intensities(

eval * sc->weight,

wavelengths

);

float mis_weight = use_mis ? power_heuristic(light_pdf, bsdf_pdf) : 1.0f;

// here the wavelength intensities are accumulated instead of eval * sc->weight

// in kernel_write_result, the intensities are then summed back into XYZ and then scene linear

bsdf_eval_accum(result_eval, sc->type, wavelength_intensities, mis_weight);

}

}

}

}

FYI anyone interested: I’ve updated the branch on GitHub to contain the latest changes (which results in a mostly working renderer)