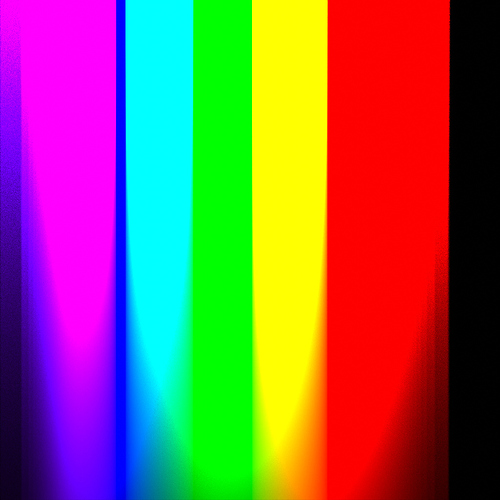

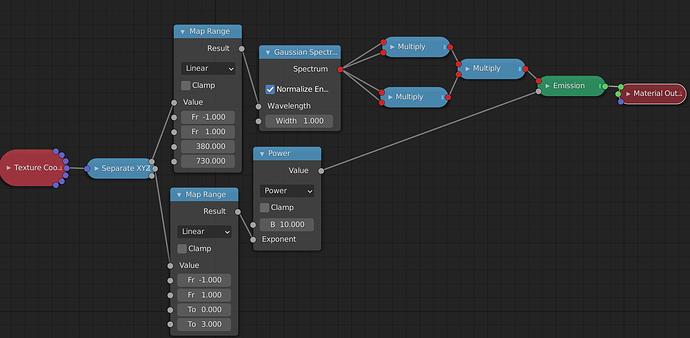

A very similar test with just raw spectral colors without any whitening

https://www.dropbox.com/s/cnm60kkqofslo44/Spectral.exr?dl=0

Not sure if these are of any use but I’m guessing these are demonstrations of where some of the problems lie. (By the way, the extreme ends of the spectrum seem to get cut off quite suddenly. These wavelengths barely contribute to the outcome anymore, and floating point errors may well be at fault here, but assuming that’s not the issue, I suspect that wouldn’t be wanted like this?)