For this point the answer seems to be in the comments:

“usually when you comp shadows they are below one and reflections are above one. So if you want to reduce the reflections only you just have to reduce the values that are above one”

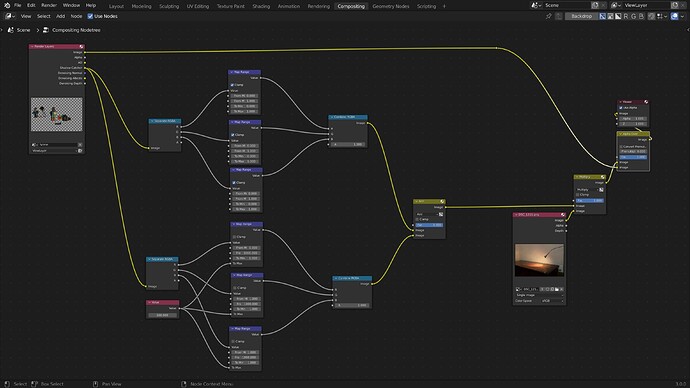

I tried to set it up in the compositor, with the issue that with my setup I am adding the 1 from the 0 to 1 range with the 1 from the 1 to + range, resulting in a wrong value of 2, when it should remain one:

Anyone knows how to set this up correctly?