Hi all,

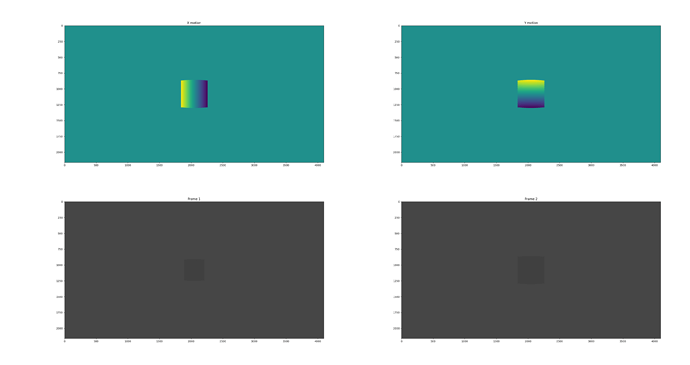

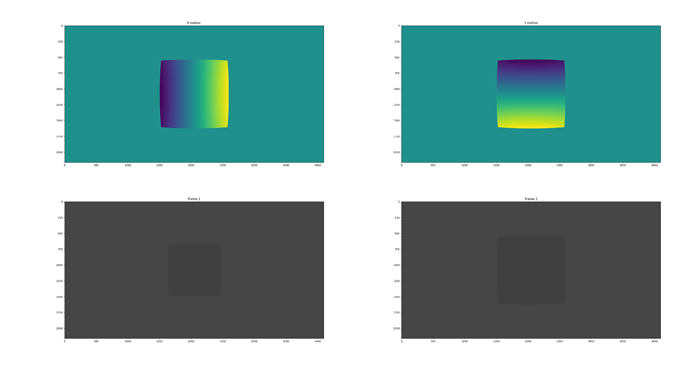

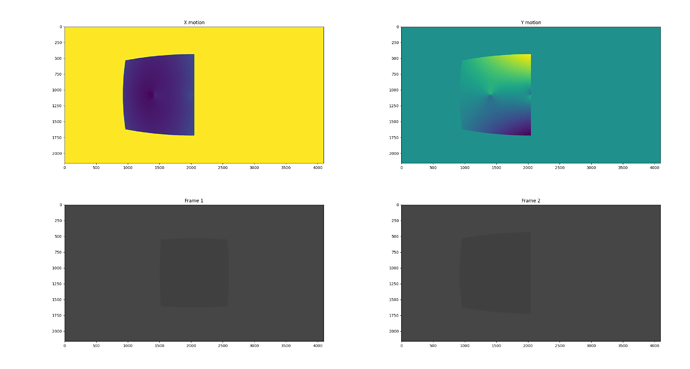

I am using Blender to generate 3D images for my PhD. I would like to output visual images and optical flow.

Thanks to Blender, I can get the Vector pass which works like charm on default cameras.

However, I would like to implement my own camera model to fit better a real camera I used for my experiments.

I was able to implement the visual model on Cycles (thanks to a few modifications on the following repository : rpg_blender_omni_camera/0001-Add-omnidirectional-camera-model-to-Cycles-rendering.patch at master · uzh-rpg/rpg_blender_omni_camera · GitHub). It works like charm. However, the optical flow one seems to have a scale issue.

I use the equations from : Fisheye Calibration Basics - MATLAB & Simulink - MathWorks France

The function omni_to_direction works perfectly. The function I am having troubles with is “direction_to_omni”.

ccl_device float3 omni_to_direction(float u,

float v,

float imageWidth,

float imageHeight,

float radiusPixels,

float a0,

float a1,

float a2,

float a3,

float a4,

float kC,

float kD,

float kE,

float cx,

float cy,

float invDetAffine) {

// scale coordinates and shift center

u = u * imageWidth - cx;

v = imageHeight * (1.f - v) - cy;if(radiusPixels > 0.f && uu + vv > radiusPixels*radiusPixels)

return make_float3(0.f, 0.f, 0.f);// inverse affine transformation

const float affine_u = invDetAffine * (kC * u - kE * v);

const float affine_v = invDetAffine * (-kD * u + v);// ray z-direction

const float rho2 = affine_u * affine_u + affine_v * affine_v;

const float rho = sqrtf(rho2);

const float z = a0 + a1rho + a2rho2 + a3rho2rho + a4rho2rho2;

const float invnorm = 1.f / sqrtf(affine_uaffine_u + affine_vaffine_v + z*z);return make_float3(

invnorm * z, // Turns out we need to remove the minus sign here to look in the right direction

- invnorm * affine_u,

- invnorm * affine_v

);

}ccl_device float2 direction_to_omni(float3 dir,

float imageWidth,

float imageHeight,

float kC,

float kD,

float kE,

float cx,

float cy)

{

const float y = dir.y;

const float z = dir.z;const float affine_u = kC * y + kD * z;

const float affine_v = kE * y + z;const float u = 0.5f * affine_u + (cx / imageWidth);

const float v = 0.5f * affine_v + (cy / imageHeight);return make_float2(u, v);

}

All the process happens in kernel_projection.h.

Do you have any idea where my implementation fails ?