…that’s how keymaps got it quirks…

Great work so far! Is there much chance that this may make it out of experimental and land in 3.4 when released? It is a shame that you have to do alternate weeks of bug triaging. I can only imagine the progress you would make if you were on the compositor fulltime

I would avoid giving any timeline on moving things out of experimental. That’s because—among other things—we will have to wait for other projects, including the Metal backend for MacOS, which have its own timeline.

It would be great to see a clarity / local contrast filter once feature parity has been reached with the old compositor.

Please keep on topic. This thread is for feedback on the amazing work Omar is doing. For feature requests and discussions about compositing in general please use the appropriate channels.

Why do we keep asking this is so we can focus on the short term targets and goals, which is feature compatibility with the full frame compositor.

https://developer.blender.org/rBb828d453e93c30e81d3cb0d5aa3edc553ea9a333

The filter nodes are in master!

Fantastic. What else is needed to do the keyer node?

Question: there are factors such as a huge number of different images (layers, AOV’s, other images), their resolution. considering that the new compositor uses GPU, a crash may occur with a large number of textures. at the same time, the old compositor, which uses the CPU, bypasses this limitation by the fact that it is possible to increase memory (an extreme case is to increase the swap file). the GPU does not have such an opportunity. this is especially critical when the PC video card has little video memory. will some workaround be created that will allow compositing in this case, even if it will be slow?

There are two kinds of limitations when it comes to textures on GPUs.

First, the number of textures, which is similar to how EEVEE refuses to render materials with a large number of textures. This is much less of an issue for the realtime compositor because we split the compositor node tree into multiple shaders, so we can always split more granularly to reduce the number of textures used per shader. Moreover, the compositor node tree is scheduled in such a way to minimize VRAM usage, which consequently minimizes the number of textures used per shader at the same time.

Second, the size of the VRAM used by the compositor node tree. As mentioned, the compositor node tree is optimized for minimum VRAM usage. However, even if it exceeds the VRAM in your GPU, your graphics driver will automatically start swapping to host RAM and even to swap space automatically at reduced performance. So the GPU still have that opportunity, it is just done at the driver level, not at the Blender level.

The Keying node makes use of many operations, including morphological operators. So those need to be implemented first, and consequently, this node will take some time to implement.

This could offer new ways to work with the back plates and other image references once the scale node is available.

Is there a way or a node that could send my composition output in 3d plane as image plane.

I mean directly composite only my 3d image plane from compositor.

I’m used to do Mr Lan Huber’s method of tracking and keying to put my character in 3d in Space.

This is unlikely to be supported. But you could do the opposite of this workflow, which would essentially involve positioning your planes and using a node like UV Map to map your character on the UV pass masked by the ID masks of the planes. But passes are not yet supported, so you will have to wait a bit for this.

now that masking is mentioned, i’ve tried using masks and they’re always changing place relative to the viewport shape even when inside of camera view, is it planned a change to use camera proportions/resolution instead?

scale and distort nodes are not yet implemented. I expect that scene scale or camera view will arrive with these nodes.

More generally, the plan is to limit the compositing space to the camera if it has opaque camera passepartout. The same goes for border rendering when EEVEE supports it.

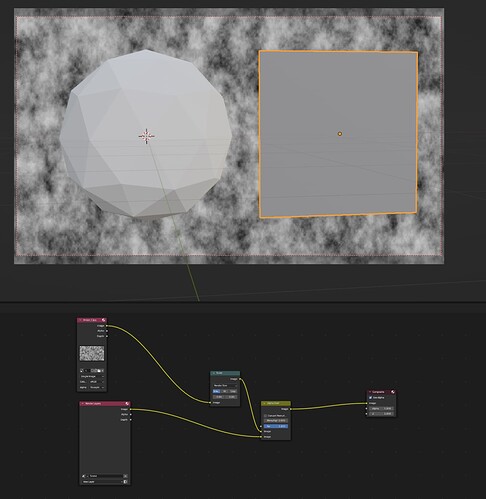

I’m testing the Render Layers – they are shown as complete in the node listing, but maybe the render engines need updates.

I made a simple test scene with a sphere and a cube each in their own View Layer. I set the viewport to rendering in Cycles, enabled the viewport compositor, and set the RenderLayers setting to CubeLayer, but the viewport still displays the SphereLayer that is selected in the View Layer dropdown.

Just figuring out if this is the currently expected behavior, and if so what the task is for updating the render engines to send out the correct Render Layer data to the viewport compositor.

Great to see this moving so quickly.

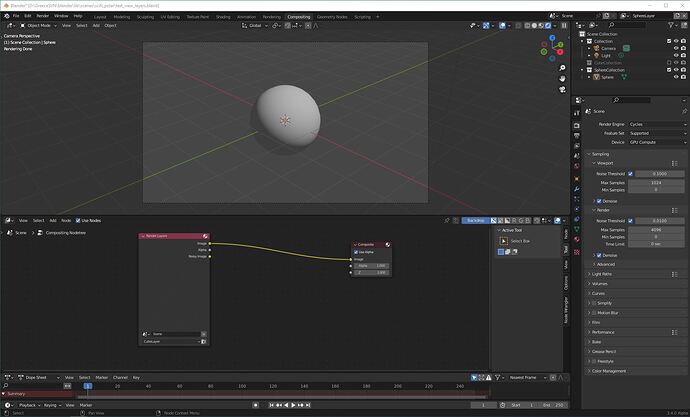

This is expected as multi-layer and multi-pass rendering is not yet supported.

We haven’t started in implementing support for that, but I will make sure to let you know when we do.

Is it possible to use Real-time compositor with OpenCV - streaming video and use RT-Compositor over live input video. Just wondering.

No, the realtime compositor is only used for viewport compositing at the moment.