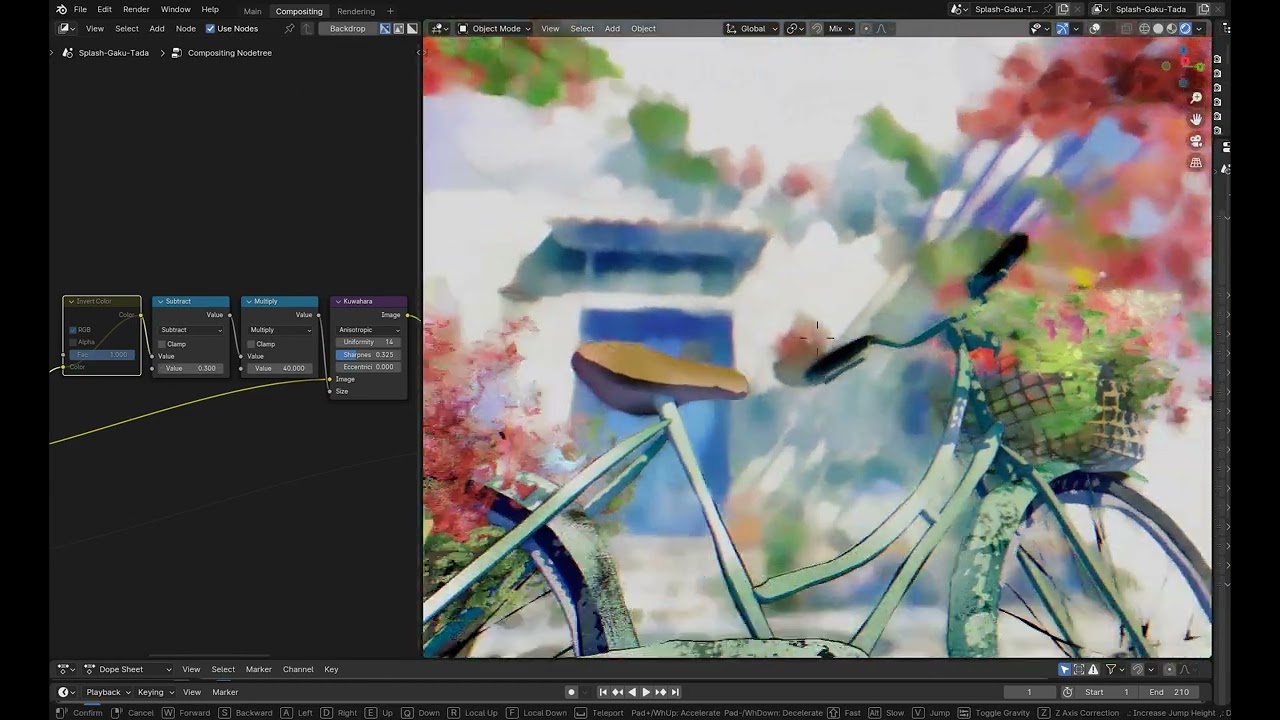

See: #112732 - Compositor: Procedural texturing, Step 1 - blender - Blender Projects

Thanks.

One other issue I have is that it looks like the way image alphas interpreted between these compositors is vastly different which becomes a real issue when used with the Alpha Over node. I need to use “Straight” alpha mode of the reader with the Tiled (or the FullFrame) Compositor but for the GPU Compositor I need to use “Premultipled” alpha mode to get the result properly out of the “Alpha Over” node. I am just wondering if this is a known issue.

There shouldn’t be such differences. Can you open a bug report?

Im doing some compositing with Blender and I would like to be able to reconstruct the ghosts glare effect using other nodes like blur, transforms, glow, whatever necessary so I can have more control over this effect (even if at the cost of performance) and was wondering if its possible for the dev to share a break down of the operations needed to build this effect from scratch.

Is it possible?

Yes, it is possible, you just need blur, scale, and color mix operations. The ghost glare works as follows:

- Compute a base ghost image composed of two ghosts. Generated by blurring the highlights using two different radii, adding both results of the blur after flipping the bigger radius blur one around the origin.

- Keep adding that base ghost image each time with a different scale and color modulation.

See the code for more details:

I temporarily enabled the Depth pass for the viewport compositor, only for EEVEE, in v4.1. One should enable full precision in the node tree options to be able to use it correctly.

Awesome! We slowly get options to make realtime custom bokeh!

Cycles and Defocus Node in Realtime Compositor will be excited.

So that means no more “hacky” plane with bokeh texture in front of the render camera for pleasant/photography image visual ![]()

Regarding the following issue:

A solution at the same performance level is impossible. So we have two alternatives:

- Add an option to allow the user to use a more accurate method, which is orders of magnitude slower, but still faster than the anisotropic variant.

- We document the fact that those artifacts are expected when you have very high values or high resolution images. The user is then expected to clamp the image or scale it down to reduce the artifacts.

What do you think? And if we add the option, what should be the default?

I think option 1 is better- an arbitrary limit that has to be clamped later seems both unintuitive and unnecessary. Performance hits are to be expected on high-resolution images anyway

A more literal definition of “orders of magnitude” would be helpful for voting. 2ms vs 20ms, 4 secs vs 2 secs, 2 min vs 20 min?

The execution time of the fast method is not affected by the radius, so a radius of 10 takes the same time as a radius of 100 or a 1000. The slow method is quadratic in radius, so it can be 10x slower for low radii, 100x slower for medium radii, and 1000x slower for huge radii. However, the slow method should be similar in performance to the anisotropic version, so you can compare the classic and the anisotropic methods to get a sense of the speed difference.

Hello

I don’t know where to describe my feedback/problem, but the compositor and file output node are involved in the process so maybe someone here can show me where to look for help.

This is also related to a bug: #115468

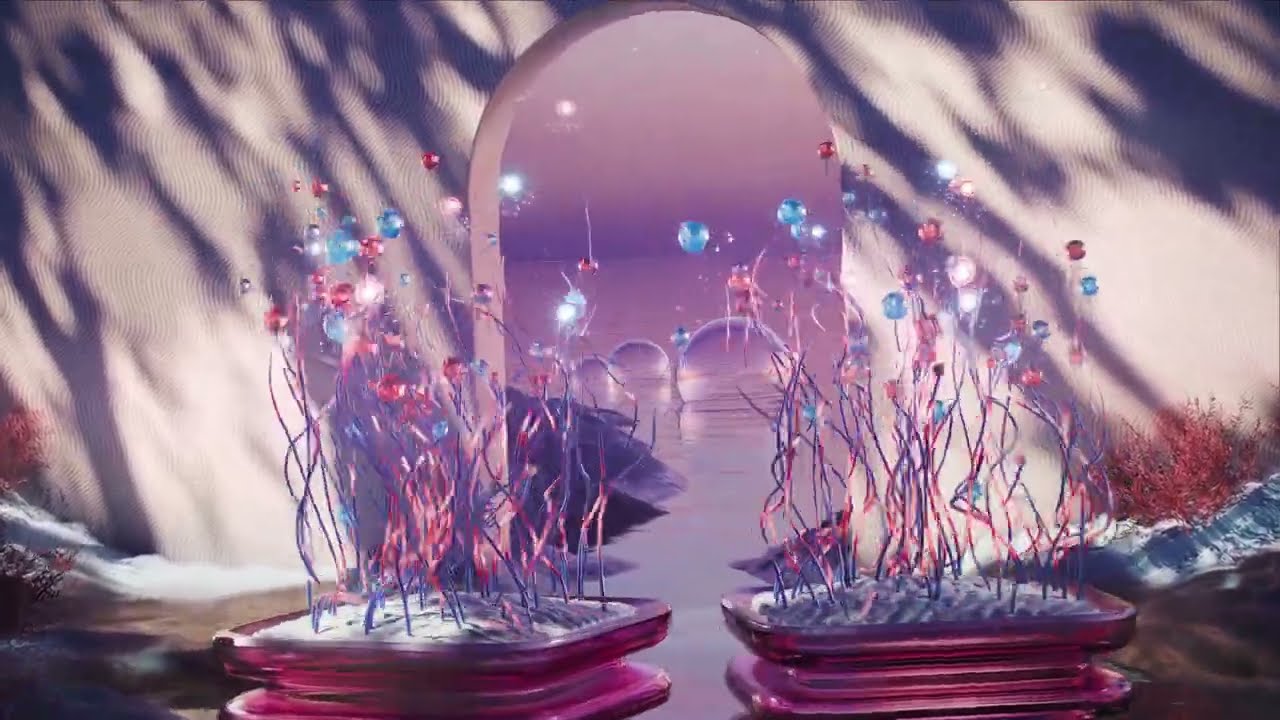

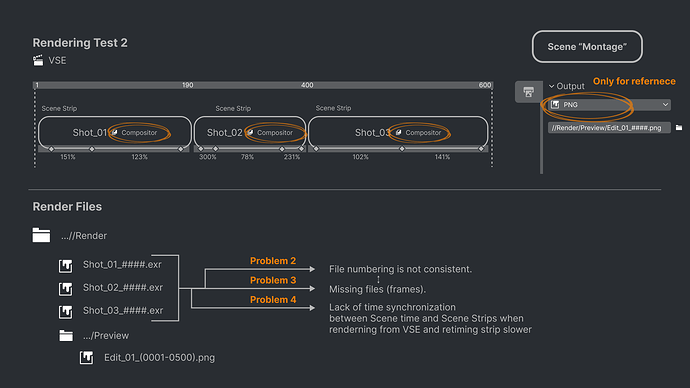

Blender is (as far as I know) the only 3D program that can directly edit strip scenes on a timeline and realtime compositing!

This possibility is a little promoted feature I think.

This makes it possible not only to plan production well but also to speed up many processes.

Normally, if you want to create several scenes for music/audio, you have to render each scene separately and waste a lot of time and resources on rendering, which may be unnecessary or speed up or cut in the final edit.

Blender, however, has this wonderful ability to save time and resources. Thanks to VSE and retiming, you can render only what is needed in the final animation, and don’t worry about editing hundreds of f-curves if you need to update the final timeline edit. This is a big deal. This workflow must be supported not only to make the production process easier but also to avoid wasting energy resources.

This is my test animation for this workflow:

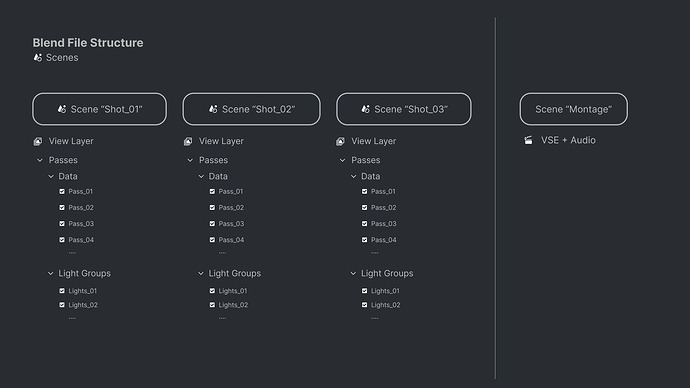

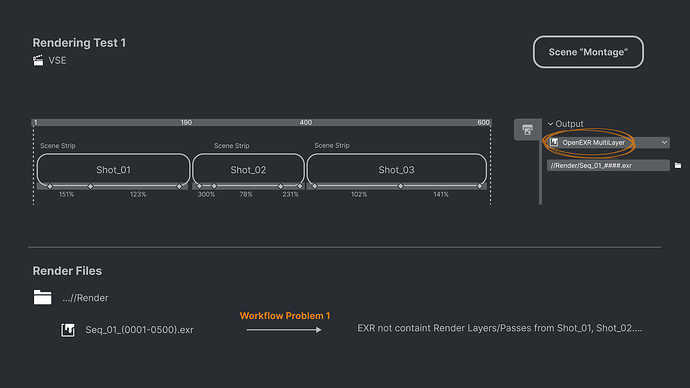

What workflow I am looking for and where do I see problems?

Let’s dive into:

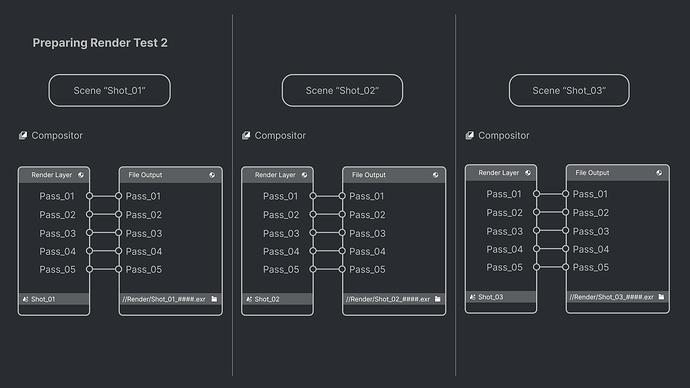

This is a classic setup. Each scene has passes and light groups to compositing later.

This stage is when all the magic happens. The ability to edit animations and speed up and slow down multiple stripes to audio is a game-changer.

If I knew how to bypass this particular puzzle, everything would be great, but when rendering via VSE we don’t get render layers from scenes strips - this is understandable. Although maybe the solution lies at this stage. I don’t know.

Preparing solution for passes and openEXR Multilayer.

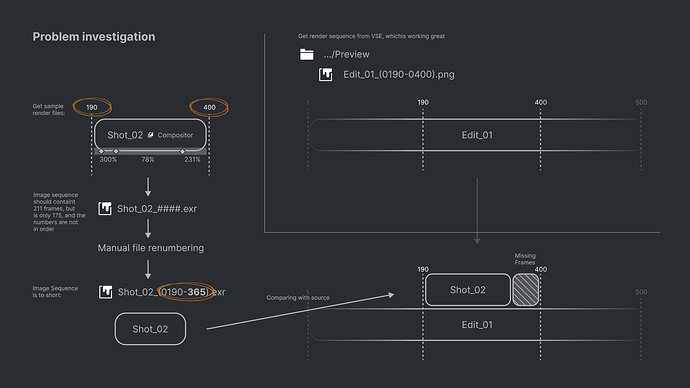

After a long struggle with misunderstanding the problem, I finally managed to find the cause/bug.

I think this is something with subframes and file output node.

What do you think about this and is there any alternative way to achieve this workflow?

Testing out the compositor and noticed that “Color Ramps” can’t be mapped to the camera. The only way to do it is to create a gradient texture and the map color ramp as tint. It’s not intuitive and note exactly the easiest thing to setup.

Not to sure what else to say accept we need that as compositors

There will be a project for procedural texturing in the compositor that should hopefully make this easier.

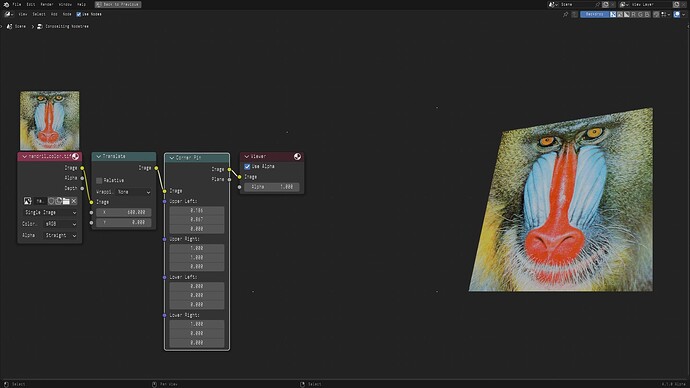

Are there plans to add on-screen controllers to the Transform nodes? If so, is that further down the road in development?

Thanks

Yeah absolutely correct. Dealing with Slider is a huge pain and way too time-consuming and leads to more human error because you left guessing with numbers.

There are no immediate plans at the moment. And now that I looked at existing widgets, they all seem broken with regards to transformations, as shown here where the corners are drawn in a different place than the actual image:

So can you maybe open a bug report for those, which should also serve as a reminder for other transform nodes.

Is there a way to test Cryptomattes in Viewport that was implemented?