I made a Right click select post a while ago about color grading. Vote up:

I’m interesting too, Ask about answer

It’s already in master, just download the latest alpha and it’s there.

Oh, wow, what a fast response.

Thank U a lot. Highly appreciate it=)

to double down down on the fixed effect shaders like bloom and other standard techniques from before…

i created my own a bit more deliberate bloom effect with realtime compositor which requires me to blur the image strongly with 400 pixels wide to get similar bloomeffect like in eevee bloom. the difference on my rtx3060 is around 50% gpu usage(realtime compositor) just for the blur node vs 1 to 2 % with eevee bloom.

so maybe you guys still consider performance optimized shaders for ready usage? and or a code-node

For the real time compositor a really great thing would be the addition of Computational time needed for each node, just like in geometry nodes, that would show us how performant our setups are and where we could improve them if needed

yeah this could be everywhere in blender please , shader nodes too

I don’t think it’s possible.

At the moment, the geometry nodes are the only ones that are executed on the processor exactly as nodes. That is, often when we talk about the execution of a node, you do not say that the node has counted something.

Even geometry nodes have function nodes. They simply add a function* reference to the field’s stack. Most shaders work the same way. Nodes just copy strings (if simple).

And the speed of the work of a separate part of the code in the gpu … I don’t think that this is anything real at all.

And yes, even geometry nodes have problems caused by the fact that nodes work for a long time, for example, Delete Geometry. But the main difficulty lies in the nodes of mathematics. Because (so far) functions* of mathematics are also not logged with reference to the desired real node.

Showing time only seems real for something like saving to a file, or the whole composer… or some specific thing like code generation time…

Guys, is it possible or will be possible in future to mix Mist pass with viewport result in realtime ?

Questiin 2

Is it possible anyhow baked in Davinci resolve LUT add to the render by functionality or realtime compositor ?

Thank U a lot for answer. Blender Devs. you are the best, guys !!!

Same here. To get good looking bloom I needed parallel connected 16x fast gaussian blurs with based radius of 0.001 and multiplied each one output by 1.6 (adjustable) as size for next one in stack.

have a tutorial i can read?

Has @OmarEmaraDev back to work to the Real-time Compositor?? Can’t believe that blender 3.5 is almost out and we aren’t going to have the fog glow node in realtime

Yes, I am now back again starting this week, though I will only be working part time and will thus only be available every other week.

Can’t believe that blender 3.5 is almost out and we aren’t going to have the fog glow node in realtime.

Indeed, the 3.5 ship have sailed already unfortunately. But I am already eyeing an implementation for Fog Glow in 3.6.

Great to hear that you’re back! I read the EEVEE-Viewport meeting notes at 2023-03-13 Eevee/Viewport Module Meeting, and am glad that you’re working toward enabling multi-layer workflows.

We just released a beta of our new virtual production system that uses the Viewport Compositor heavily. There is both an iOS app called Jetset (sign up at Lightcraft Jetset if interested), and a desktop side called Autoshot that uses the Jetset-captured data to build a full 3D tracked and composited VFX shot in Blender. (Downloads are free at Lightcraft Jetset).

I already built a workflow with tracking 3D garbage mattes with the Viewport Compositor (Lightcraft Jetset), and then wiring it into the 2D compositor at Lightcraft Jetset), but it would be amazing to have that all wired into the Viewport Compositor in real time.

It’s exciting to see all the viewport & EEVEE-Next work flying along!

Hey @OmarEmaraDev!

When you get around to implementing the ID mask node for the real-time compositor, could you also update it to account for ID masks of objects that are out of focus?

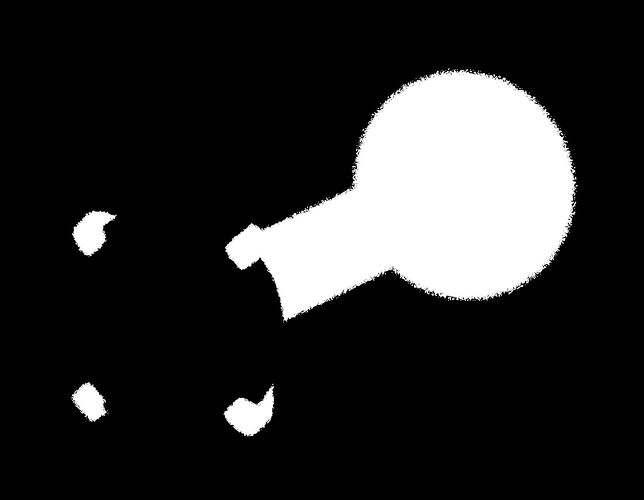

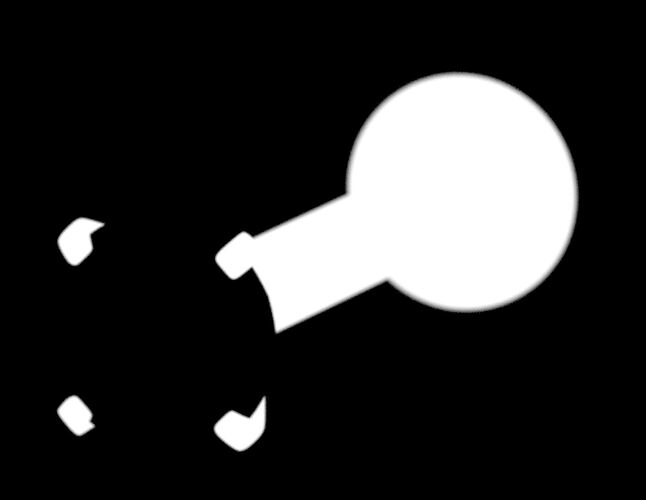

Currently the ID mask produces jagged edges for objects that are out of focus, see below.

The cryptomatte node produces the correct mask for objects out of focus, but it cannot be used inside node groups.

Thanks for all your hard work!

-Tim

This is inherent to the ID Pass itself, not the node implementation, as far as I know. And that’s one of the reason why we have a cryptomatte node. So what you are asking is not possible from the point of view of the compositor.

That’s unfortunate to hear. Who is responsible for maintaining the ID pass?

On a side note, is there a way to make the cryptomatte work inside a node group instead?

The ID pass generation is the responsibility of whatever engine produces it, but it is standard at this point. I wouldn’t rely on it changing somehow.

I am not sure about Cryptomatte to be honest, I haven’t looked into it yet.

It “just” looks like it’s sampled only once, when it should need at least something like 16 or 32 samples to be somewhat smooth. At least these jagged edges have the signature look of low montecarlo sampling.