Sure. Here’s a 1920x1080 image being routed through a Scale node set to Absolute, and resized to 1280x720. However, the image still extends off the viewport edges.

That seems expected, what you probably really need is to set the scale mode to Render Size with Fit sizing.

Hmm. Doesn’t seem to affect the output image size.

It seems to work for me. Can you open a bug report?

OK figured it out. Bug reproduces in the universal-scene-description build but not the mainline 4.1 alpha build, where the Scale node works as expected.

USD build must be a few commits behind. I can use the mainline build for compositing in that case.

What’s next? As you can guess I’m looking forward to the keyer node . .

Hi everyone,

For the last couple of weeks, I have been working on implementing the Glare node, and as I mentioned before, the process have been difficult for a number of reasons. So just wanted to give a semi-technical update on that.

The Glare node have 4 modes of operation, each of which is covered in one of the following sections.

Ghosts

The Ghosts operations works by blurring the highlights multiple times, then summing those blurred highlights with some applied transformations and color modulations. The blur radius can be up to 16 pixels wide, but done using a recursive filter.

So I went ahead and implemented the recursive blur using a fourth order Deriche filter. But I couldn’t use it because it turned out to be numerically unstable for high sigma values, and we can’t use doubles like the existing implementation due to limited support or much worse performance on GPUs. Many of the tricks I used to workaround this issue didn’t work, like decoupling the causal and anti-causal sequences, dividing the images into blocks, and computing the coefficients using doubles on the CPU.

As far as can tell, the only solution is to lower the order of the filter or decompose the filter into two lower order filters, and since this is only significant for high sigma values, two parallel second order Van Vliet filters can be used because Van Vliet is more accurate for high sigma values. This is something I haven’t figured out yet.

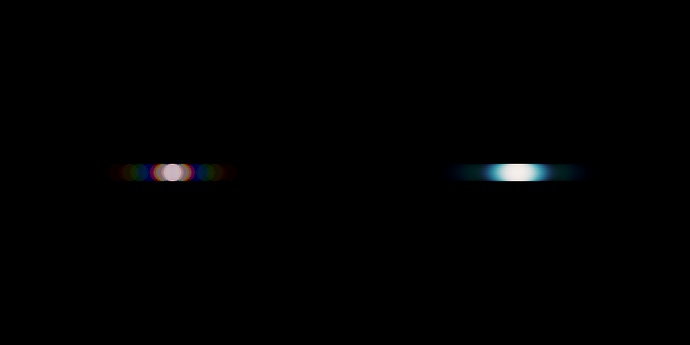

As a compromise, we could use a direct separable convolution, which is faster any ways because we have a max blur radius of 16 pixels. The problem is that it will give a different result as shown in the following figure. But that difference might be unnoticeable in most cases since it will be scaled. Left is recursive, right is convolution.

Note that the recursive implementation is the less accurate one here, and since our recursive implementation that will be done will likely be more accurate that the existing one. That different might be intrinsic to the CPU compositor vs realtime compositor itself.

Simple Star and Streaks

The Simple Star is mostly a special case from the Streaks operation. Both operations work by blurring the highlights on multiple directions and adding all of the blur results. But the implementation is a recursive filter with the fade as a parameter that is applied up to 5 times for each streak. This has serious implications for performance due to the extensive use of global memory, interdependence between pixels along rows and columns, and many shader dispatches. So I decided that this can’t be implemented in its current form as it would not be good for performance.

Possible solutions is to measure the impulse response of the operation on a delta function whose value is the maximum highlight value on the CPU, then perform a direct convolution. But this would also be slow when the image contains very high highlight values. Another solution is to somehow derive a recursive filter based on the fade value using a closed form expression. But I am not sure if this is possible and I am not currently knowledgeable enough to do it.

A compromise would be to try to replicate the same result of the operation using another technique, like the Deriche filter mentioned before, which I investigated and started to implement. But I realized that this would not be possible for certain configurations, for instance, when color modulation is in play, which I thought would just be different blur radii for different channels, but turned out to be rather different and more discrete as shown in the following figure. Left is existing color modulation, right is the color modulation I implemented.

So that compromise might not be feasible after all.

Fog Glow

The Fog Glow operation is very simple in principle. The highlights are just convolved with a simple filter. The origin of that particular filter is unknown as far as I can tell and seems to be chosen through visual judgement. The filter can be up to 512 pixels in size and is inseparable, so it is computationally unfeasible using direct convolution and can’t be approximated reliably using a recursive filter.

So the only feasible way to implement it is through frequency domain multiplication using an FFT/IFFT implementation. I still haven’t looked into this in details, so nothing much can be said about it except that it will take some time as an FFT implementation is hard to get right. Perhaps the best option is to port a library like vkFFT to our GPU module, but this haven’t been considered yet.

It’s really nice to get such insights, thanks for the update. I understand that you’re aiming for faithful backwards compatibility, but if this proves too challenging, as a user I wouldn’t care if these effects were re-imagined. They’re pretty arbitrary on an artistic level anyway, so maybe try changing the design altogether. Cheers,

Hadrien

Couldn’t agree more, keeping the old ones is important but adding “new” effects gives new opportunities.

I’d go as far as saying many if the old implementations are not that great to begin with. So if changing them to something potentially better breaks backwards compatibility, frankly, I’m all for it!

Have you considered doing the convolutions in FFT space instead?

We commonly use FFT multiplications instead of of a regular spatial convolution filter when applying lens kernels in comp (Nuke) to simulate lens effects more accurately. Its order of magnitudes faster doing it in fourier space than image domain.

The last paragraph should awnser this.

Right o! completely failed to read the entire post. As soon as someone starts talking about larger kernels for convolutions I preach FFT.

Just discovered real-time compositor and I was wondering, would this be a good cheap way to create a distance fog effect in the 3d viewport, by using Mist or ZDepth pass? And is there any ETA on when those passes would become available?

I can only underline each word here. As well a the two upper posts.

Go on, make new better and faster glares! You have my blessing

(For the context, I already started to work with a DIY fog glow filter done by packing in a nodgroup a few gassian blur filters and it’s already better on the eyes than the regular glare node solution…)

so far I love it - but I need Z depth pass, and AOV’s to really rock it.

The Pixelate node does not work properly.

A simple setup that pixelizes the image (for ie. pixel art workflows) results in:

Whereas the rendered result looks fine.

Should be fixed in rB1a9480cf25d18f15f154d4ac3ec9720ca9dfb2f7.

The situation is not ideal, because the last scale node is the one that should dictate the interpolation, but it has no option for that unlike the Transform node. So I changed the defaults around to make it work for the common use cases. But the ideal solution would be to add an option to the Scale node much like the Transform node.

The Mist pass probably will not be supported soon enough. As this requires bigger changes to render engines and draw manager. But I am looking into supporting the Z pass, at least in a limited capacity.

Ok, could you briefly explain z path or link to any example articles? Thanks.

Basically, just looking for an artistic way of getting the distance to appear atmospheric. A cheap alternative to volumes.

Path was a typo, I just meant the ZDepth pass. Corrected.