I think ideally the node tree creating the displacement output should be completely separated from the node tree creating the surface shading output. For several reasons:

-

The input nodes for the displacement node tree should be limited to types that make sense in that setting. That is things like position, normal, uv, true normal. Other inputs like light path or ambient occlusion inputs don’t make much sense for a displacement node tree setup.

-

It would allow for a separation of what for example the “position” input is: For the displacement node it is the original not displaced position, while for the shading node tree it would be the actual (displaced) position. For the shading node tree one could also (for example for the normal) expose both, original and displaced variants as input. But the default would be the displaced ones.

-

It would simplify understanding the user interface by making it clear that the displacement tree is applied (separately) first and then the shading tree is executed on the displaced geometry.

An example where the current mixture of both node trees (shading and displacement) gets really confusing:

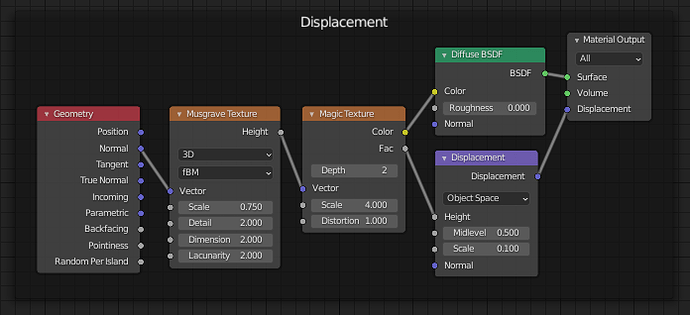

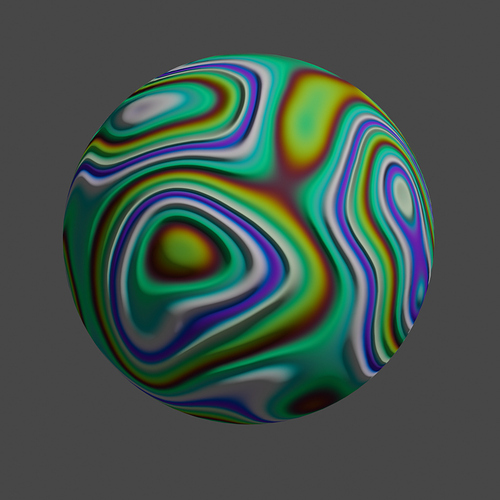

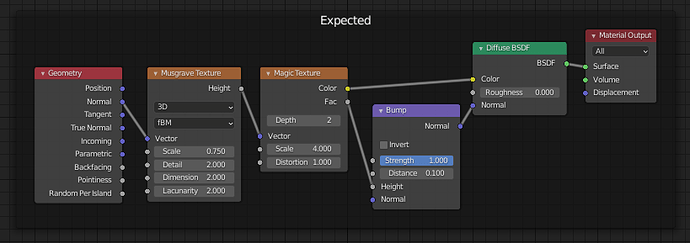

This is a simple setup that creates color and displacement waves based on the current normal of the geometry. Because the Color and Height come out of the same Magic Texture instance, one would intuitively expect the bumps and the color bands to be aligned. Intuitively I would have expected something like this as result:

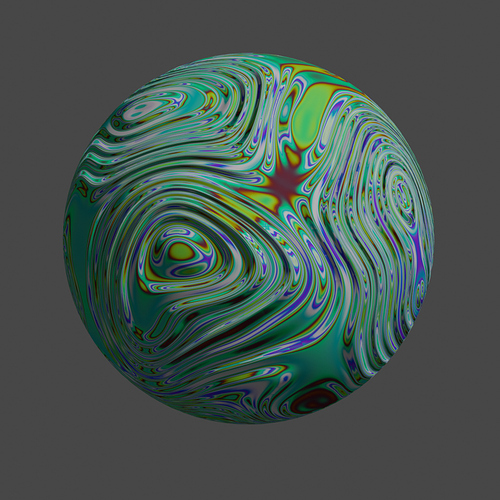

But what you actually get from the above node tree is the following:

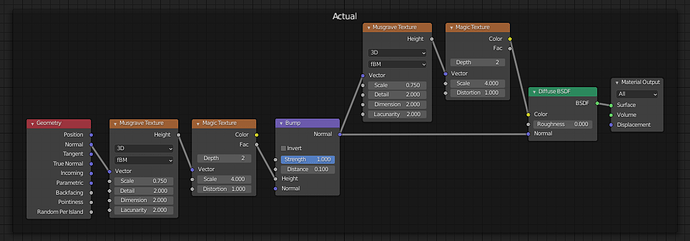

Internally the above node tree gets converted to something like

which produces the same rendered image as the displacement node setup (in Eevee). Note that now there are suddenly two instances of the Magic Texture block with different inputs and one creates the Color and the other the Height for the bump mapping. This also explains why we get color bands with a different frequency compared to the bump bands.

The first rendered image above (what I would have expected as output) has been generated with the following (manually created) node tree:

Here the bumps and color bands are generated from one single instance of the Magic Texture node and the color and bump bands are aligned.

With the automatic bump mapping this problem occurs when the (default) normal input is used in the displacement node tree. By adding an automatic parallax occlusion mapping generated from the displacement node tree, I would expect that things can get even more confusing for example when the position input gets used in the displacement node tree and the shading node tree, which is not so uncommon for procedural materials. For the shading node tree one might want to use the displaced position (for example when checking for light visibility and similar things), but for other operations (procedural coloring) one might want to use the shifted original (POM shifted but not displaced) position as input to get colors that are aligned with the procedurally (from original position) generated displacements.

Maybe there is some good reason for having both node trees (displacement and shading) combined into one that I’m missing?

Still I think that an implementation of parallax occlusion mapping based on a displacement node tree has some advantages. It would allow to specify the height map with a (more complex) node tree instead of a single texture. This could also be achieved with a user exposed POM node type and a (still missing) system of node sockets/connections of type function and a way to define them with a node tree. Beside this, a displacement node tree based solution (which limits POM effects to one single instance) might also help when trying to add support for shell mapping Interactive Smooth and Curved Shell Mapping. Shell mapping would require the generation of shell geometry, which might be simpler to integrate in the user interface if it is a per material setup instead of a per node. Obviously you can’t generate shell geometry for every POM node used in a material.

I have looked into compiling the displacement node tree into a separate GLSL function inside the Eevee codegen (this would be required by the “auto-magical” solution), but without much success so far. Obviously there are solutions, but I would prefer a solution that does not require a rewrite of large parts of the existing GLSL code generator.

Regarding missing parts for a soft implementation of POM as node tree: It would require the following:

-

Some new ddx/ddy node or alternatively some kind of on-the-fly tangent base generating node (that basically outputs the relevant parts generated in the POM code using ddx/ddy). Issues with a ddx/ddy node are the limitations associated with how these values are calculated (2x2 pixel block, derivative approximation is non-symmetric and alternating). Supporting it in Cycles (on arbitrary input) requires node tree duplication, which can be expensive (but it is the same problem for the current Cycles POM code). Exposing (and supporting long term) such low level operations might be against Blender node tree design principles. Chained use of ddx/ddy (for higher derivative) might not work (in GLSL) or at least produce unexpected results.

-

Some pixel depth offset support for actually modifying the depth result of the fragment that gets written/compared to the depth buffer. As outlined in the first post, this might also have other applications (blending), but can’t really be supported in Cycles. It would also need some integration into the BSDF nodes in order to affect lighting.

-

Some kind of iterations support in the node tree. Currently implementing POM in the node tree basically requires completely unrolling the iteration loop. For higher iteration counts this can produce long shader codes (takes long to compile and might not be ideal for execution). Ideally the iteration support also allows for conditionally aborting the iteration (for performance reasons).

-

Some way of control for selecting the mipmap level when sampling the height map texture. The automatic ddx/ddy based mipmap selection does not work very well when iterating the UV in POM. The result is that a too high resolution mipmap level gets sampled during iteration and performance becomes really bad (for higher resolution height maps).

-

Ideally some way to change the height map in a POM node setup (provide it as parameter) without the need to manually open the node group and replace the right part inside it.

If you only use (scaled) world coordinates as UV, you can get away without ddx/ddy parts. If a uv map is used where U and V are (mostly) orthogonal, you can get good enough tangent vectors from the Normal Map node (with constant colors as input), so also in this case you can get away without ddx/ddy. At least in Eevee. In Cycles, the Normal Map node produces “corrected” normals, which are basically just wrong, so not suitable for generating tangents. I started with creating a node tree setup for POM, but the limitations are annoying. That’s why I have written the code to try an implement it directly into Blender.