There has been interest in supporting parallax occlusion mapping (POM) in Blender for some time. See for example https://developer.blender.org/T68477, Right-Click Select — Blender Community and Parralax occlusion Mapping in development?.

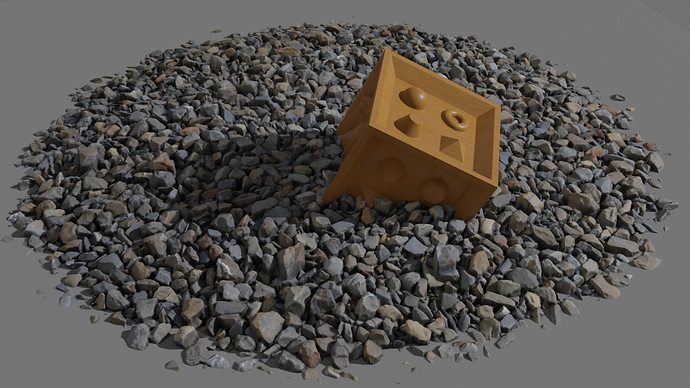

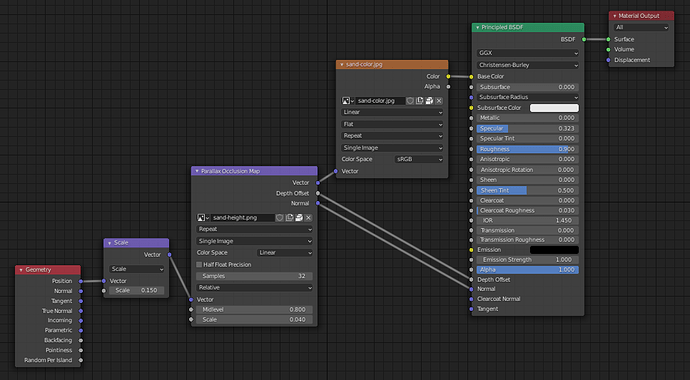

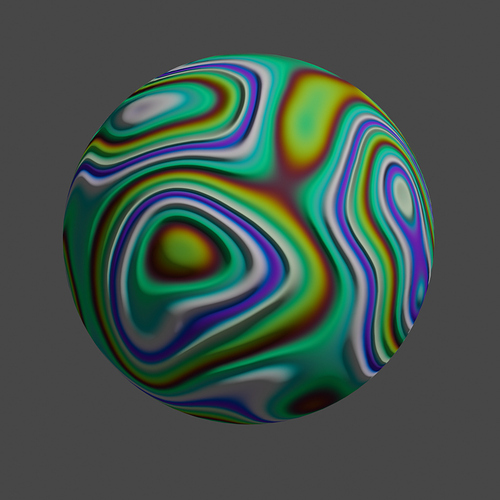

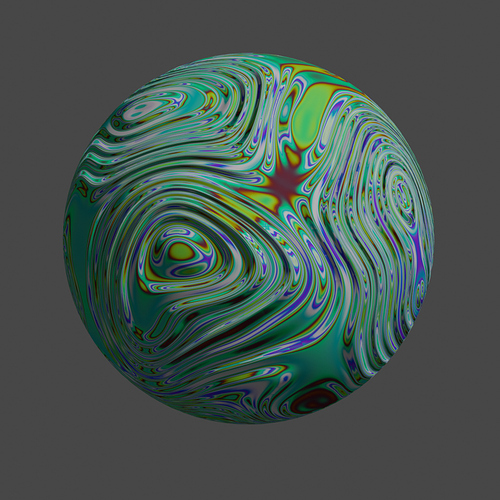

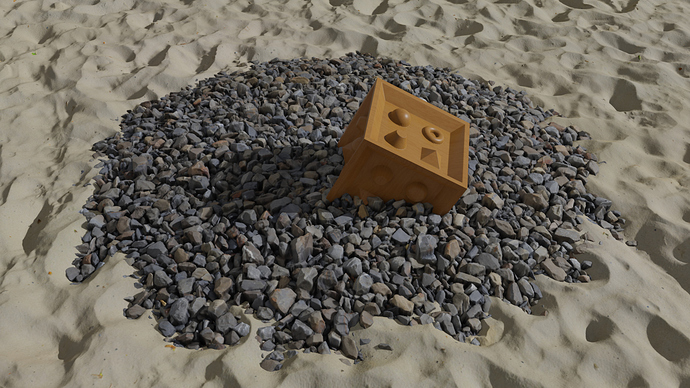

I have developed code that adds a new shader node type “Parallax Occlusion Map”, a new input socket “Depth Offset” to the Principled BSDF shader node and a new shader node type “Depth Offset”. The Depth Offset shader node and input socket are used to implement Pixel Depth Offset (PDO) support in Eevee. The above image shows an example rendered with this implementation.

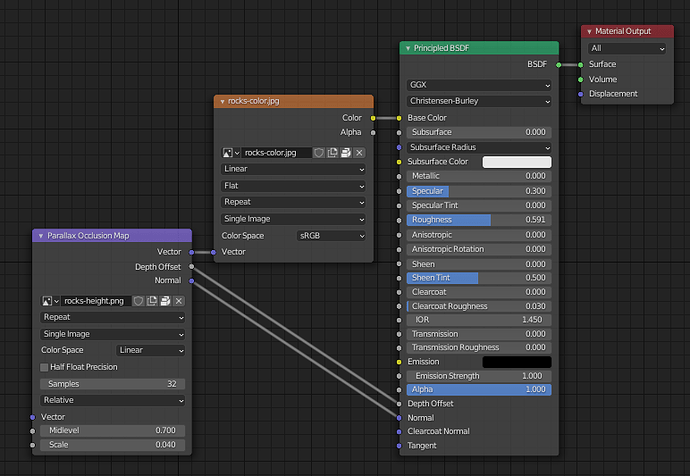

Parallax Occlusion Map shader node

The Parallax Occlusion Map shader node maps UV texture coordinates from input values to values corrected by the parallax occlusion map. The node is implemented for Eevee and Cycles (SVM and OSL).

The node links an image that is used as height map. For the height map, some of the common properties like the extension mode and color space are exposed. For 16-bit height maps, also the option to disable the half float precision (effectively limiting to 10-bit) optimization is exposed.

The “Samples” property defines the number of linear search samples that are used along the view ray from the top of the height map to the bottom. Internally it uses an additional (fixed) 8 samples of binary search to find the intersection with the height map.

There is a property to configure the “Scale” of the height map to be either in absolute world units (“Absolute” option) or relative (“Relative” option) to the dimensions of the texture.

The “Vector” input socket defines UV coordinates that are used to map the height map onto the actual geometry. If the socket is left open it will automatically pick up the default UV coordinates (like the Images Texture shader node).

The “Midlevel” and “Scale” input socket behave the same way as for the “Displacement” shader node in the case of an “Absolute” scale mode. The “Midlevel” is the height (as value between 0 and 1) that will be placed at the location of the original surface.

The “Vector” output socket provides the UV coordinates where the view ray intersects the height map (the first two components) and the height (as value between 0 and 1) at which it is intersected (third component).

The “Depth Offset” output socket provides the distance from the surface of the original geometry to the point where the height map is intersected, projected onto the view direction vector.

The “Normal” output socket provides a new (world space) normal, that is normal to the height map at the intersection point.

The POM node accepts an arbitrary UV parameterization of the surface as input, the required tangent vectors are generated “on the fly” internally using derivatives (dFdx/dFdy in Eevee and nodetree duplication in Cycles). The implementation is based on Surface Gradient Based Bump Mapping Framework.

The linear search for the height map intersection point uses a randomization to achieve a dithered blend for parts of the geometry that can’t be reliably resolved with the given number of samples (Samples property). The number of samples is not adapted based on view direction (as in some implementations of POM) in order to avoid a strong view dependency of search/sampling artifacts (moving layers).

The height map image is linked in the node itself, because Blender does not support the concept of sockets/links of type texture or function (mapping a vector to a vector for example). The POM algorithm needs to sample the height map (function) many times, so it seems not practicable to duplicate an input node tree many times like in the approach used for the bump map node (only two additional input node tree clones are required). Unfortunately, the internally linked image for the height map prevents the use of procedural approaches for generating the height map.

The usual limitations of parallax occlusion mapping are present here as well. The mapping is correct only for flat geometry. For curved geometry, it still “attaches” a flat volume with the height map at each point and the visible intersection point is traced through this non-curved volume.

The code does currently not implement other algorithms like Prism Parallax Occlusion Mapping or Interactive Smooth and Curved Shell Mapping that require the generation of shell geometry.

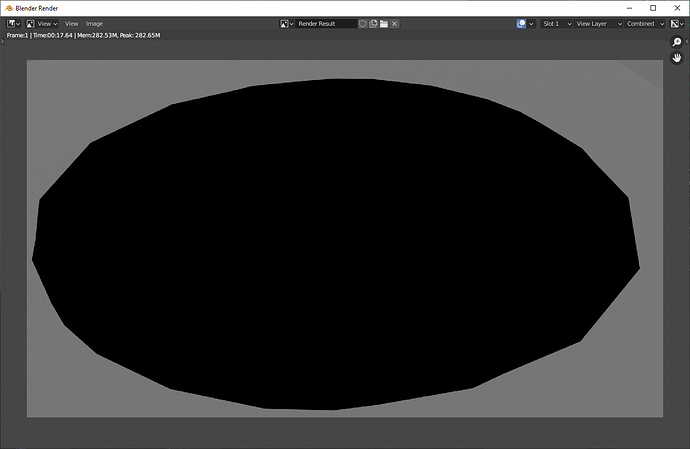

Depth Offset input socket to the Principled BSDF node

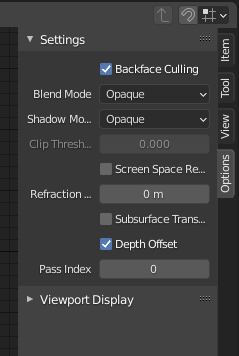

The value provided to this input is used to offset the position of the shaded point along the view ray before evaluating light visibility. It is also used to offset the final depth buffer value (geometry visibility). The actual value provided to this input is the offset vector projected onto the view direction. It can be directly connected to the output of the POM node. This input has an effect only in Eevee, and only when the “Depth Offset” material option is enabled.

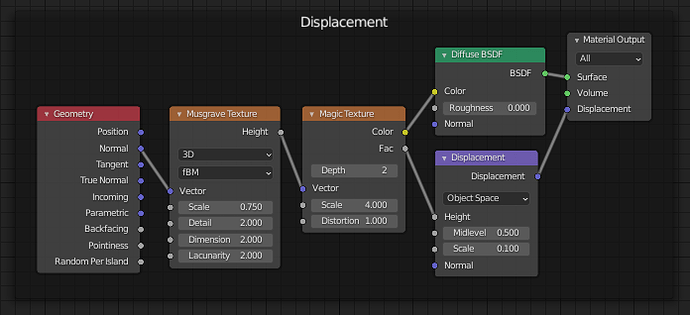

Depth Offset shader node

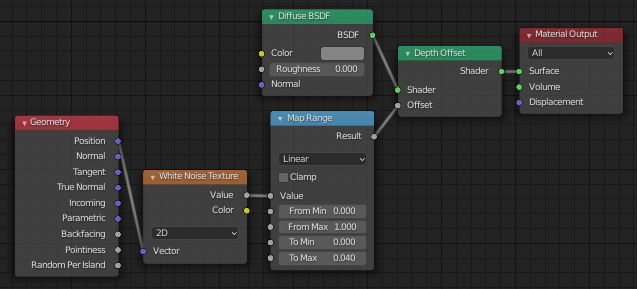

The Depth Offset shader node can be used to apply only a pixel depth offset (PDO), but without affecting the shading of a fragment. The Depth Offset shader node is implemented in Eevee only, in Cycles it is a simple feed-through for the BSDF signal.

The value for the “Offset” input socket is the same as for the POM node Depth Offset output socket and the Principled BSDF node Depth Offset input socket.

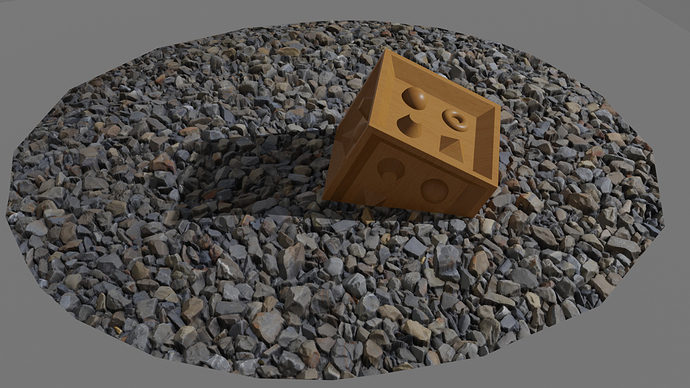

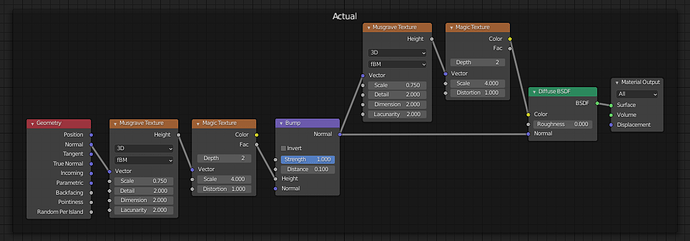

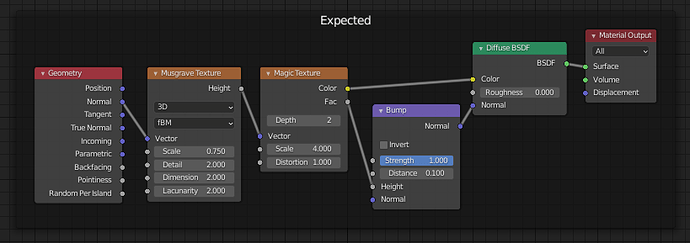

Beside the use of the Depth Offset node in combination with POM, it can also be used for some simple depth buffer based geometry blending (using dithering). A sample setup using a white noise texture is shown in the above image. The following image uses POM with Depth Offset on the sand geometry (flat quad as geometry) and a randomized Depth Offset node on the rock to blend between the sand and the rock geometry.

I have uploaded the diff file of the code based on Blender master commit e5a572157d8cd5947a0b4b7420e728d57d6965ff here: https://developer.blender.org/D9198

On the above page there is as well an example .blend file that shows how the new nodes can be used.

A build for windows can be found here: GraphicAll — Blender Community

It would be great if something like this could be integrated into the official release of Blender. Regarding that objective, I’m open for any change requests.