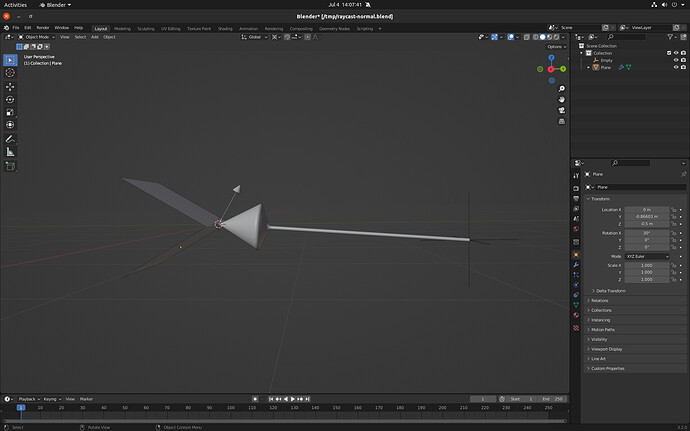

When you trace a ray exactly to a vertex or edge. The normal returned is the normal of one of the bordering faces, which one seems more or less random. While is is not really a bug, it does sometimes lead to unexpected results.

Example:

I have a blend file generating above picture to play with, but I first need to upload it somewhere because you can’t attach .blend files here.

If you move the empty up or down, the normal flips randomly between the normal of the top face and the bottom face.

Turning on smooth shading on the mesh sadly does not help. I had hoped this would cause the normals to be interpolated, but apparently the raycast node always returns the true normal.

I was wondering how hard it would be to return interpolated normals here. I’ve been stepping through the code a bit and I do see some places where I might do something, though I don’t really understand the code yet.

Would this be an interesting thing to research, or is the general feeling here that this is expected behaviour for the raycast node?

Yeah, that’s not the problem ;-).

The problem exists only if you trace exactly at the edge.

edit: but in your case it does seem to do interpolation… hm

need to check

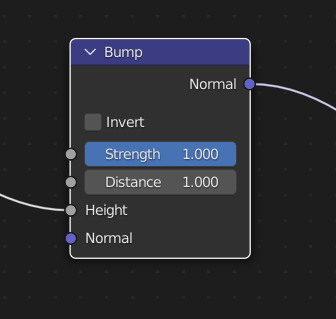

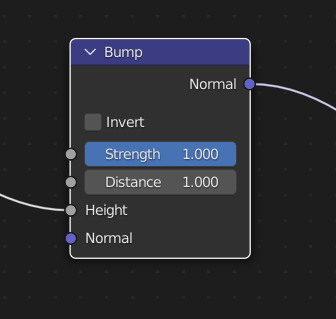

I did try to put normal as input to the attribute, and that didn’t work. But maybe the capture makes it work…

edit2: I can’t seem to make it work. Can you show the part of your nodes tree on the right? I don’t really understand what you do there with all those capture/transfer nodes.

Imho it would be nice if just a straightforward raycast node without trickery would (also?) return the interpolated/smooth normal instead of the true normal.

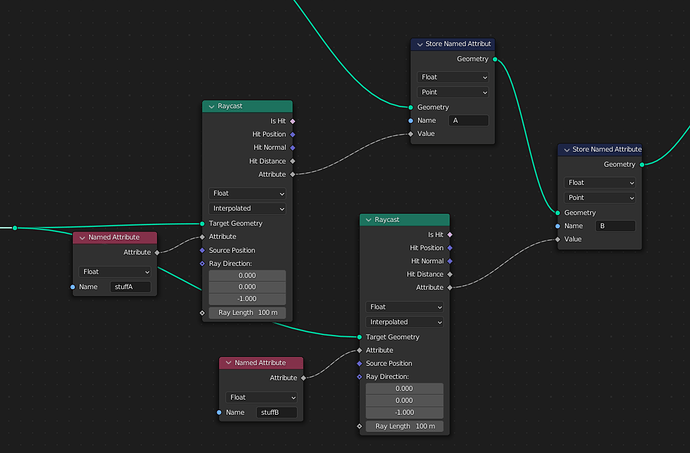

A combination of set-smooth + capture attribute on points and then use that attribute on the raycast yields the correct smoothed normal.

I’d still like it to be a bit more explicit which ‘normal’ in geonodes you get from various sources. The one on faces? face-corners? Vertices? It’s rather a shot in the dark sometimes.

In this case it’s even worse, the normal probably comes from the triangle that the ray hit. We don’t really expose those triangles anywhere in geometry nodes, since they’re derived data. I think this is an ugly part of our design, but I’m not sure how to solve it.

I’d still like it to be a bit more explicit which ‘normal’ in geonodes you get from various sources

I guess with so many options for normals it should perhaps be treated like the optional “Attribute” input, where you get out the value of the field at the hitpoint. Like “Normal” input/output in some shader nodes where it defaults to a standard normal but can be overridden.

There was some discussion when i first made the raycase node about how to allow an arbitrary set of attributes be interpolated simultaneously, but no actual implementation exists yet. Currently you have to use multiple raycast nodes if you want to interpolate multiple attributes, which is suboptimal.

It’s optimal. You have no overhead with this node

Maybe i’m missing something here? The raycast node has been adapted to fields since my original version, but afaict it still does all the raycasts twice if you have 2 raycast nodes.

node_geo_raycast.cc

If you need 2 different attributes from the raycasted mesh you need 2 raycast nodes because they only support one attribute at a time, even though the interpolator could be applied to as many times as needed after finding the intersection. You can also use the hit position with Transfer Attribute, that’s a smaller node but still builds the entire target mesh BVH, so doesn’t improve performance.

Wrap your mesh in UV, get it and use Transfer By Nearest(UV, RayCastAttrOutUV) and you can do as many as you like

Or, if you don’t need interpolation, get index

1 Like

I don’t want to derail this thread any further. What you suggest requires a) a UV map which may not be easy if the mesh is procedural and b) does a transfer which also builds a BVH tree, so does add comparable overhead.

In terms of overhead, this is nearly identical if there were multiple attribute sockets unimplemented

Building a UV only requires space. If precision is not important, Position is the analog.

At the moment, there is not even a design to work with multiple attributes synchronously, so you can think about it