Hi everybody,

I have worked on a better integration of the RigNet addon

Here’s what is new in version 0.1 alpha

- Removed dependencies: binvox, open3d, trimesh

- Auto install pytorch

- Left/Right names for bones

- Post Generation utilities: merge bones, extract metarig

- Samples control

- Progress Bar

- Can skip bone weights prediction

this tool covers the task of assigning deformation bones to a character. Traditionally, it needs to be performed manually, is different every time, and after a while it looks like doing the same thing over and over.

This description fits a class of problems that are hard to translate into conventional algorithms, but on which, given enough data, a reasonably accurate statistic can be built. Tools that use statistics to automate a procedure fall under the field of Machine Learning, which are more and more widespread with the increase of computing power.

RigNet is a Machine Learning solution that can assign a skeleton to a new character, based on extrapolations from a set of examples. It is licensed under the General Public License Version 3 (GPLv3), or under a commercial license.

When I saw skeletal characters coming out in their presentation, I knew that I wanted something like that in blender. So, when the code was made public, I wrote a RigNet addon.

With python as a programming language, making it “speak” with blender was no big deal, but the need of 3d party modules made it difficult for everyone to use.

Dependency Diet

The first version of the addon resorted to trimesh and open3d to handle 3d operations, which is redundant inside a full fledged 3d app.

Binvox, a stand alone tool used to extract volumetric representations, was problematic too, as a binary, non-open source part of the bundle.

When the blender foundation backed the project, my first concern was to eliminate all 3d dependencies. The script still needs pytorch and pytorch-geometric, but their licenses (modified BSD and MIT, respectively) allow their inclusion in a free software project.

As an additional constraint, pytorch must match the CUDA version installed in the system. In the end, I have added an auto-install button to download the missing modules. It uses the Package Installer for Python (aka pip) and virtualenv behind the scenes.

Installation

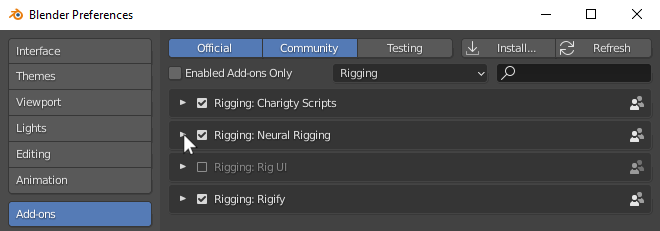

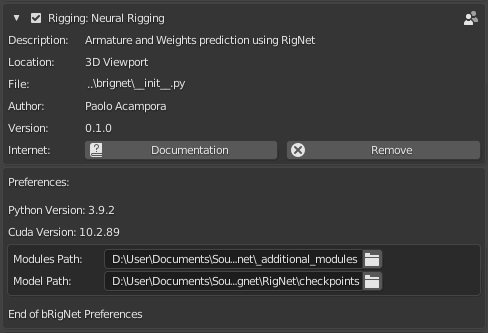

Install the archive, Neural Rigging is listed in the Rigging section

Installing pytorch can be tricky, and usually is done at the beginning of a coding project, with tools like virtualenv, which is part of python, or conda, a proprietary installer. Anyway, it is different for addons of a bigger application.

CUDA is a requirement. This could change in the future, as the torch-geometric library has recently added cpu support. At present prebuilt packages support CUDA 10.1, 10.2 and 11.1.

Owners of nVidia hardware can install the CUDA toolkit from the distributor’s page

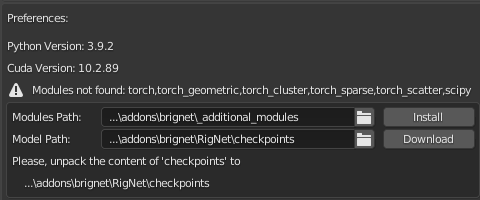

Once expanded, the preferences display the system info and the missing required packages.

If Cuda is found in the system, the Install button can be used to download pytorch to the designated location. By default, the subdirectory _additional_modules in the addon path is used.

It may take time because it has to download the whole pytorch library (2 GB). Some users may want to run it with the console window on.

Trained Model

A Machine Learning tool needs the data from a training session, or it won’t be able to run. The Model Path is the folder where the results of the training are stored

The developers of RigNet have shared their trained model on a public link which can be opened by clicking the Download button.

The default location is the RigNet/checkpoints subfolder of our addon directory, but we can choose another path if we wish.

This model has been trained from about 2700 rigged characters that can be downloaded here. RigNet’s GPL-3 license and the optional commercial license apply to the trained model too.

Once all the requirements are fulfilled, the addon preferences should look like in the picture below, and display no warnings

Remesh, Simplify, Rig

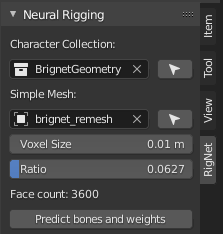

Characters do not always consist of one single mesh, so the addon asks for a collection as input. If we want to create a new collection, the utility button next to the property will do that from the selected objects.

Then we need a single, closed, reduced mesh for our computations. We can generate one using the button next to the mesh field.

The Voxel Size and Face Ratio can be tweaked, but the result should not exceed five thousand triangles. The addon panel displays the current face count, and a warning when there’s too many.

Parameters

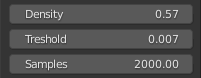

The original RigNet tweaked a bandwidth parameter to deliver more bones with lower values. I have inverted that parameter into Density, which hopefully makes more sense to the user, that adds more joints when set to greater values. Denser rigs require more GPU memory, so more powerful hardware is required to generate rigs with more bones.

Joints with a weighted influence lower than the Threshold value will be ignored.

While RigNet processes the geometry using 4000 samples, I have added a Samples parameter, as lower samples sometimes deliver faster and better results.

Animation controls

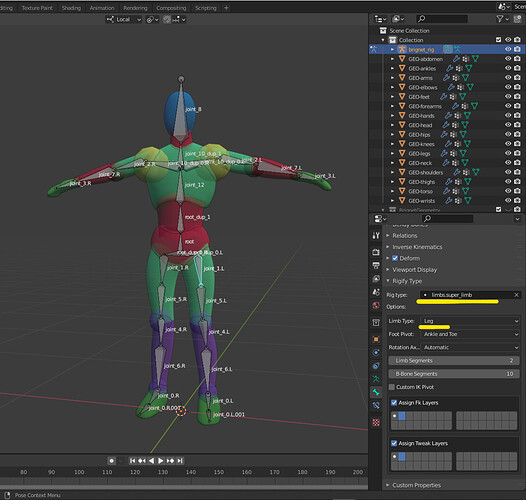

Animation rigs usually have additional controls besides the deformation bones. Rigify is the control generator included with blender. The usual workflow for auto-rig systems is “bone first, bind later”, but we have a bound skeleton already, so we are going to do the inverse.

We can adapt our bone layout to the one expected by rigify, add rigify attributes to the bones of our rig, and use a converter included with the neural rigging addon that creates a rigify base. From then, we follow the standard rigify workflow, like if we were rebuilding a rigify character.

This reverse workflow and the tools involved, would better be discussed with Rigify developers, and hopefully included in Rigify.

Is blender ready for Machine Learning and AI?

Of course it is: the addon system is very flexible, and many AI/Machine Learning projects are written in python, making it reasonably easy to bridge the two worlds.

If anything can be improved, making some of the internal voxelization and sampling functions available to the addons would make the job easier, and the execution faster.

An official solution for expanding the interpreter would be nice as well: something like a virtualenv for the addons.

Last, operators that take much time might benefit from a progress bar or some other way to inform the user about the current stage.

Is Neural Rigging ready for blender?

It’s starting. Though not a new field at all, widespread application and diffusion of Machine Learning is relatively new. At present, rig prediction helps skipping the most obvious steps in a character setup, but the delivered result usually needs polishing.

The requirement of 2 Gigabytes in 3d party libraries is quite unusual, and hopefully improvable, and the trained model plays a key role, in that the actual activity of the tool sits in the data rather than in the code itself.

The original RigNet is released under a dual license, which implies that it can be freed from the restrictions of free software if bought from an authorized vendor.

The blender add-on is GPL only, but contains RigNet as a component. This condition is new to me: technically everything should be fine as long as the GPL is honoured, but it’s better to contact the original authors (tto[at]umass[dot]edu) if commercial implications arise.

Is Neural Rigging going to improve?

Adding more examples to the training dataset could be the first step for improvement. Also, torchscript could help make the addon faster and more portable.

It would be very interesting to add fingers to the dataset. Or we could remove the constraint of symmetry and treat the hands like characters: after all, fingers are human tentacles!

The progress of ongoing research may bring novelties as well, so we’ll better stay tuned.

Please, have a try with addon if you wish: I would love to have feedback and improve the alpha. Also, I could not test the addon on linux yet, so every report of a linux experience is welcome: especially the install.

Thanks for reading all this,

Cheers,

Paolo