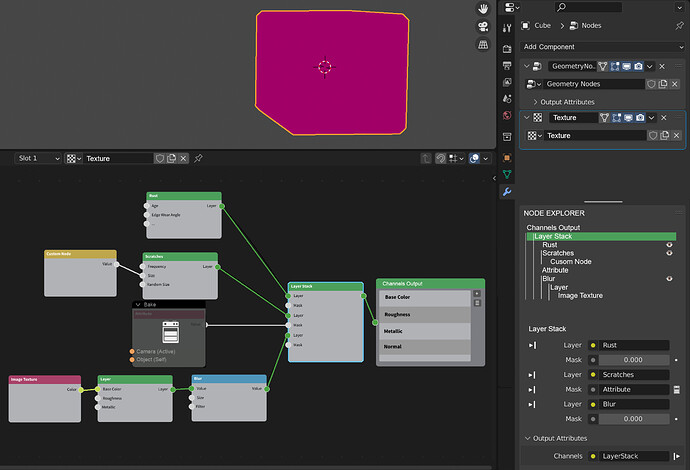

Assuming the Datablocks of the Geometry Nodes, Materials and Textures were the same, I have tried to organize it so that the edition of these is accessible, comfortable and visually unified from the Attribute Editor.

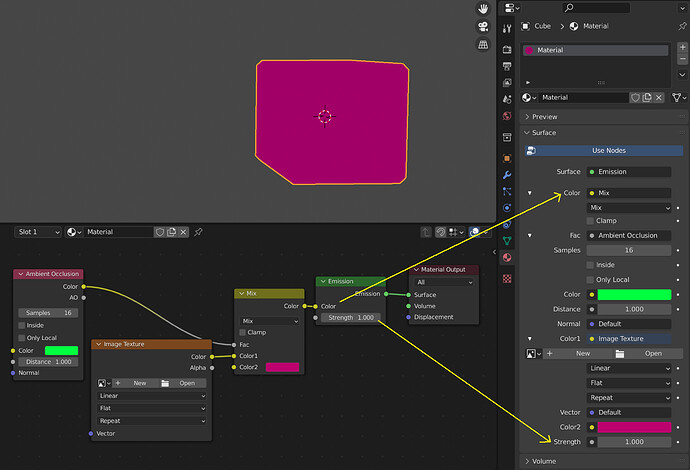

As can be seen in the first screenshot, in the current state it is almost impossible to understand a material tree of simple nodes in Attribute Editor, on the other hand it is not related to the Geometry Nodes, and I do not see relation to the Texture Datablock proposed.

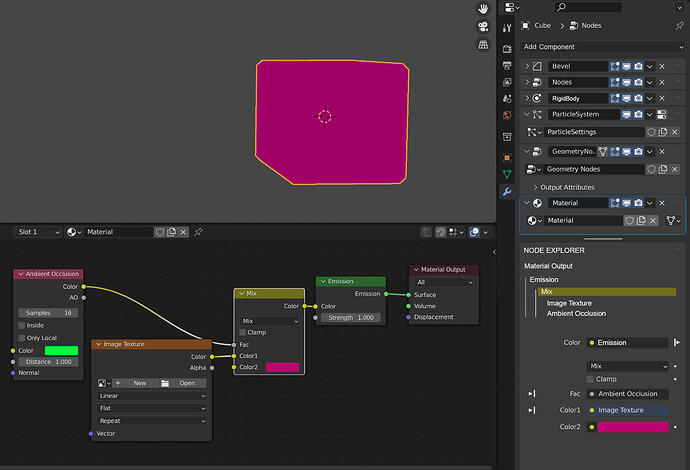

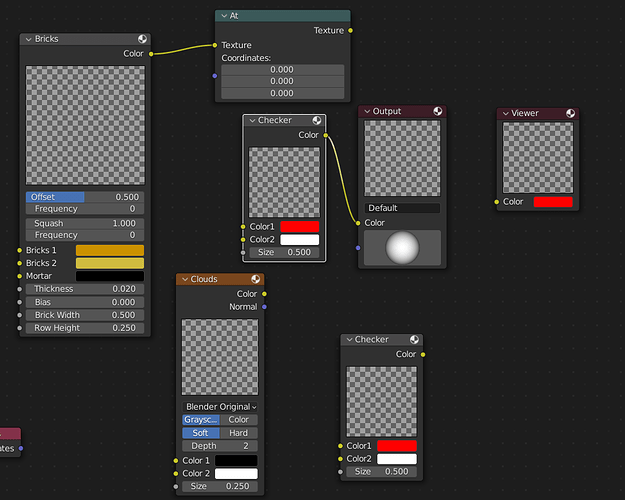

In the second image, the Geometry Nodes, Costraints, Physics, Hair, Particles, Materials and Textures proposal would use the same Data Block and use the same nodes, the Output would mark the difference between one and the other category.

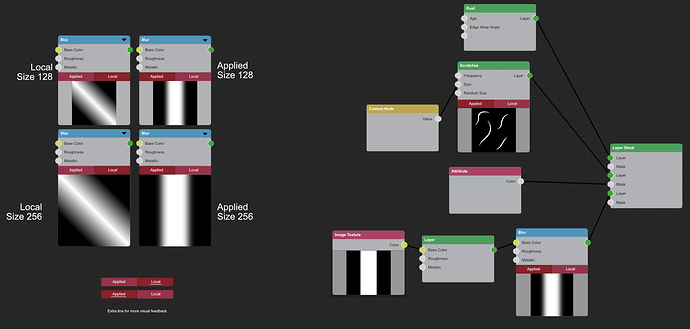

Although a material is shown in the model, and surely there are a thousand gaps, I understand that it can be extrapolated to any tree of nodes, and in the case of textures it could show the Layer Stack as a starting point.

NODE EXPLORER, located below the list of components in the Attribute Editor, would show the nodes calculated by the Output in a format similar to that of Outliner, and would only show the attributes of the selected node. From the displayed node attributes it should also be possible to navigate to its Inputs and Outputs using arrows to the left and right of these respectively. As an Output can be connected to more than one Input, clicking on the output of the Output should bring up a floating menu with all the nodes connected to it.

I hope this is not nonsense.