I don’t understand the fuss, there’s been a pretty healthy back and forth so far and whenever there were concerns with contributions/designs it seems to me they were outlined clearly

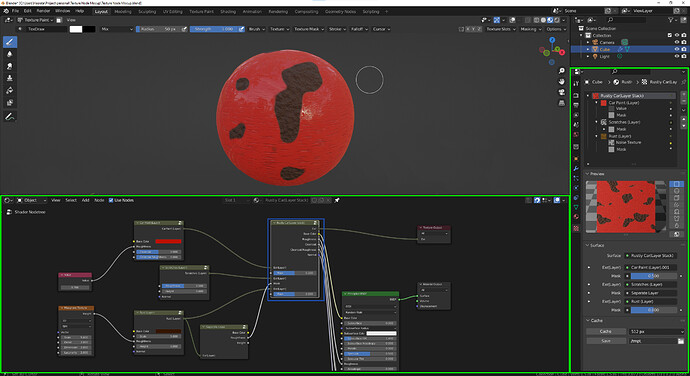

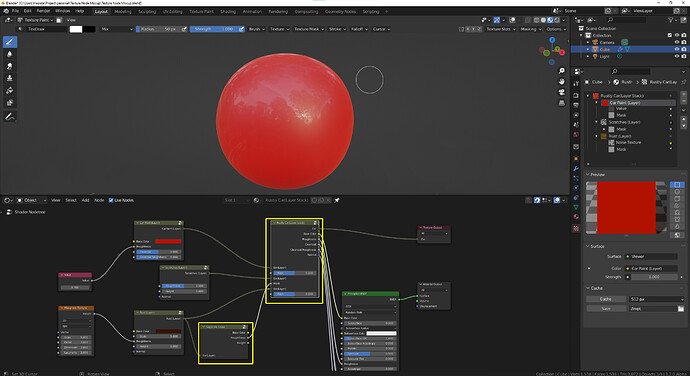

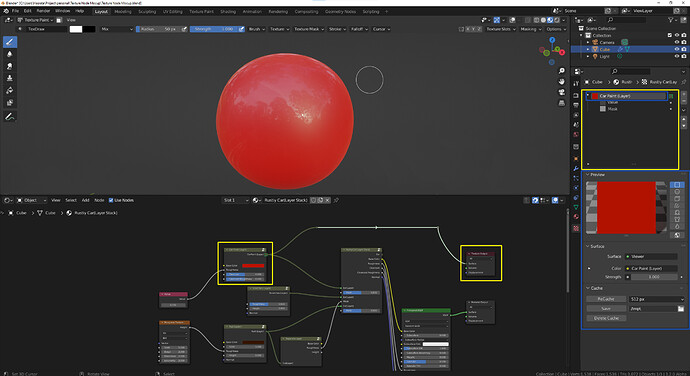

Hi, regarding the design, I just have finished going through all Brecht’s posts on this thread, then I made a mockup.

Although it is similar to others on this topic, I think My design adds some additional value to the general discussion.

Nodes and Layers.

There are three needs that the current Shader node lack and are crucial for texture editing:

- A multi-channel data (layers) or workflow (layers stack, layer combine, etc.).

- Some nodes are harder to execute during render time (blur node, filter, painting on texture, etc.).

- High-level UI for layer-painting.

IMHO we can add those three in the Shader editor in a way that wouldn’t clutter the UI too much and would be easier UX-wise.

-

Adding multi-channel data is pretty straightforward, add a new color for the socket, add a combined channel node, and a layer stack node.

Even the conversion between data types is relatively simple.

I imagine the Asset Layer would be a node group.

-

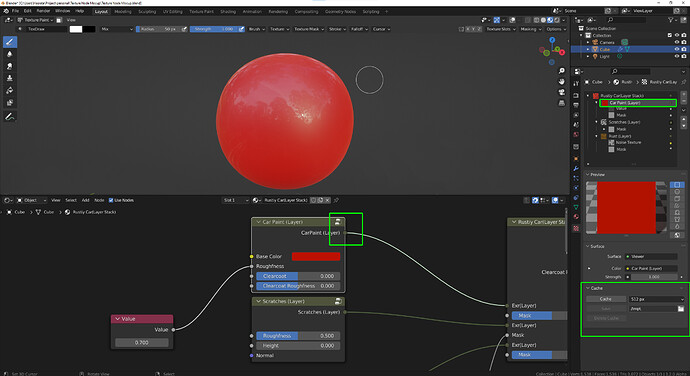

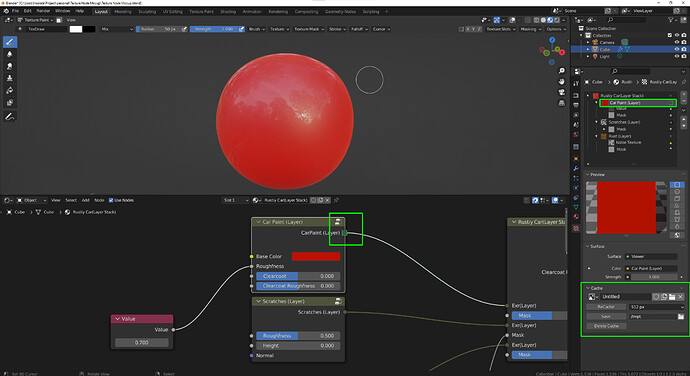

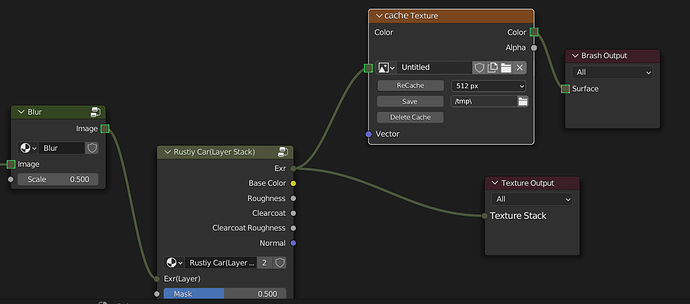

For the second need, I suggest a new kind of data - Cached data.

It’s similar to the way Field differs from regular data.

Every type of data (float, vector, color) except shaders could be cached.

Most of the nodes would work on both of the data kinds, But some nodes would only work on cached data kinds.

This caching serves for painting, special filters (as blur), and optimization.

-

For the high-level UI, I suggest a texture-output node similar to the material-output node.

the output would be presented in the Properties Editor under the texture tab.

The list of layers would be presented with Outliner Ui. Internally, I imagine the Stack would Behave as materials are presented currently in the Properties editor, where you can uncollapse any connected input to edit a lower level layer/node.

Baking:

I’ll be using the proposal uses for different kinds of baking:

- Exporting PBR textures to a game engine.

- For textures with many layers, baking is important for efficient rendering in Cycles and Eevee.

- Some nodes like Blur, Filter, and others require baking to work at all, as they can not be implemented efficiently or at all in renderers.

- Baking procedural texture layers into an image texture or color attribute to continue hand painting.

- Baking multiple materials onto one mesh.

- Baking to attributes.

- Baking from different objects (Normal/Displacement detail from higher res models).

- Baking render-data as Shadow, direct\indirect, etc.

I think some of those bakings are fundamentally different from others.

IMHO there should be 3 \ 4 kinds of baking in Blender each of them in a different place in the UI.

- The per node caching system in my proposal solves the 1, 2, 3, 4, use cases.

- A bake button in the Material \ Data properties editor should solves 5, 6,

- More complex baking in the Scene Properties editor should solves the 7, 8 uses cases, maybe this kind of bake should be held in a dedicated baking graph.

Additional notes;

- Painting would only work on a cached layer.

- The state of the cached\regualr node would be presented in many places.

- I am not sure if it’s possible to use the same texture stack for brushes/geometry as the textures are tied closely to the material they originate from, although the same material can reside on different meshes easily.

maybe new Brush Output which only accepts Cached data. - A specific Cache node may be usefull.

I hope I’ve been clear enough

To make things a bit more concrete, here’s what the baking UI could look like. This would be accessible from the Texture Channels node in the proposal, with most of these settings stored on the Image datablock (mycharacter.png in this example) to be shared across multiple materials and meshes.

[ Update Geo Cache ] [ Bake ]

[▼] mycharacter.png [ ][x]

File Path //textures/mycharacter_<CHANNEL>_<UDIM>.png

Bake Res 2048px

Preview Res 512px

Channel Type Bake Token Pack

-----------------------------------------------

Base Color Color [x] base_color RGBA ▼

Roughness Float [x] roughmetalao R ▼

Metallic Float [x] roughmetalao G ▼

Normal Vector [x] normal_map XYZ ▼

-----------------------------------------------

AO Float [x] roughmetalao B ▼

Curvature Float [ ]

Cache Color [ ]

Cache.001 Float [ ]

This would list all image textures associated with the texture datablock, including input, intermediate and output textures. I think having this type of centralized settings and UI would be easier to understand and manage compared to various bake nodes, as well as being able to do automatic channel unpacking. It works best when everything is inside a Texture datablock, since that gives a clear context to pull together these various textures into a single UI.

Is this a response to my post or the posts before me?

Because your baking mockup can somewhat coexist within my proposal…

A loosely related question since nodes are involved quite a bit in the workflow - the node editor can become quite laggy the bigger scenes get, to a point where even moving or connecting nodes is actually a tedium (even if they’re not supposed to be evaluated).

Is that the type of known issue which realistically won’t be resolved anytime soon?

All things considered I really like the proposal and looking forward to using it in action.

Is that still a problem in 3.1? That sort of thing has improved a lot recently in many cases.

Yes, it’s still a problem. In a scene with about 50M faces (subdivided cubes for the sake of simplicity, 1.5M faces each) it looks like this -

This is on an i9-9900k with 64gb of ram and a 2080.

Since scenes of this size or bigger are very common in production I can see this becoming a general issue for a lot of people with Blender going more node based.

It’s due to the undo system (item 4 in ⚓ T60695 Optimized per-datablock global undo). But that’s really off topic here.

It’s not a reply to any specific post, just clarifying things.

Well, kind of off topic. When coming up with a design heavily reliant on nodes it’s usability is not irrelevant.

If ironing out these issues would take years for example, I’d vote for alternative solutions in the UI that don’t require visual grouping or moving of nodes - something which currently would take a lot of time that otherwise wouldn’t.

Just mentioning for consideration, In my workflow of having multiple objects in 1 texture map, I felt using ‘Material Groups’ as the term to use.

That is pretty cool… maybe nitpicking at this stage but I would suggest being able to separately choose height and width of the texture instead to sticking to squares. than maybe Preview res could be like a division or something 1/2, 1/4, 1/8?

edit: also .tga from personal experience has a better separated Alpha map and as far as I know not a lot of artists in the game industry use .png except maybe for UI. For example exporting a PSD without a properly authored Alpha channel to PNG can cause quite a lot of issues because it can cause artifacts in the color channel where the alpha resides. This is especially apparent when creating textures for Premultiplied Alpha effects. But, I might be mistaken that this is not the case when exporting from Blender. Maybe it’s just whenever I see “.png” it makes the hairs on the back of my neck stand on end because of headaches they caused during production.

Not a friend of PNG too. But It looks like PNG was just used as an example. I do not see anything that will limit us to PNG.

For technical reasons end use (game engines such as Unreal) textures have to be square powers of two, and given that UV’s are square there’s little reason I can see to do anything else. If you have non integral size individual textures then pack into an atlas. If different sizes are allowed though - why not - then please have a ‘lock chain’ button to simply lock them together, and ideally with integral steps of power of two

Rectangular textures (512x1024, etc) are less common these days, but they still can be used in old game engines. Plus blender already supports them in other places (texture painting, etc), I don’t see the reason to exclude them.

That’s not really true for modern GPUs and API’s, but it was the case in the past and can be for legacy reasons/HW.

Unreal requires square powers of two, don’t know the specifics but in tech talks they’ve cited technical reasons for this. Don’t know about other engines, regardless you still have the square UV’s.

ad. Power of two or not

Power of two textures are still relevant. Because of texture sampling and mipmaping.

When GPU wanna sample texture during render, it need to load it, usually from VRAM where it sits. But believe or not VRAM is slow compared to GPU cores, it takes few dozen of gpu cycles to get requested data from vram, so instead of taking it one by one, GPU load chunks of texture to GPU caches (L3/2/1) to have near instant access to that data.

It is as revelant as it was in the past

When object have huge texture (like 4k) and object is small on screen and there are no mipmaps, then loading small chunk of texture wont benefit much, because next pixel that GPU is working on, requires totally different section of texture, that is not in cache and GPU have to wait till it comes from vram (cache miss).

But when there are mipmaps GPU will load that lower resolution texture LOD where texture chunk will have neighbor textures present in cache so it will render faster.

Textures with Mipmaps should have dimensions with power of two, but it does not have to be rectangular. If you have non power of two dimensions and want to use mips You will lose data or waste memory.

exactly, Unity supports the use of textures 512x1024 for example. Will fit nicely in memory, and no data loss while mip mapping. I have made some textures for a 2.5D game background that mainly consisted of wide background elements. It benefitted us there to be able to have a 2048x1024 texture resolution to fit everything on for one level background. But as @DrM is suggesting a lock chain would be great.

@DrM As for UVing using texture aspect ratio is something I used in Maya. Internally of course it’s just squares, but visually you can UV using texture aspect ratio.

That’s the first I hear that POT textures aren’t relevant any more. Every game engine I’ve been using is still scaling any texture to the next power of two if it’s anything else. Even for my non-realtime stuff I try to stick to power of two textures as far as possible just in case I ever need to adapt it to anything realtime (and out of habit).

Do you have a reference where its becoming obsolote in game engines nowadays? I’d really love to see this if true.

Or do you specifically mean rectangular power of two?

In which case I’d still say it’s not really legacy at this point, either. Trim Sheets for example. Certainly don’t need to be square all the time.