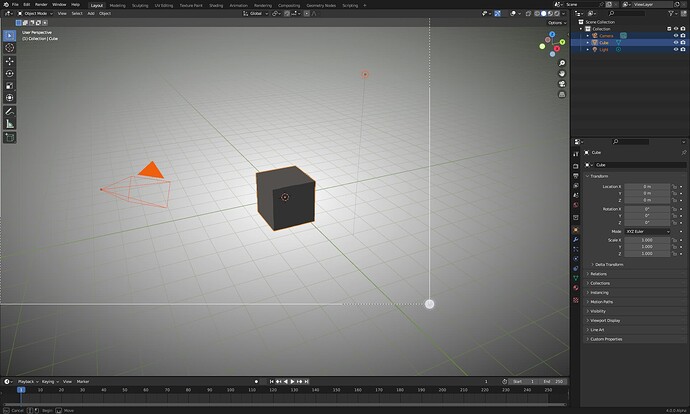

Hi everyone, I recently ported blender 4.0.0 to a Samsung Android tablet. I have made some progress, as shown below, switching to different modules of blender.

- At present, the problem I urgently need to solve is how to handle the actions of pressing, popping up, swiping, double-clicking, etc. on touch screens such as Android tablets so that they can adapt to the current event framework of blender.

After browsing the code, analyzing the process, and trying in the past few days, I encountered several problems. I hope you can provide some ideas.

a) After starting the program, clicking the button and drop-down box on the startup page, there is no response, just closing the startup page (see the picture above).

b) Open the layout page, press, move, and pop up the box selection, no matter where the press is, the starting point of the box selection rectangle is in the upper left corner of the screen (see the picture above).

c) On all modules, after clicking the button, only the tooltip information can be popped up, and the corresponding events cannot be responded to, such as switching modes, opening the corresponding property page, opening the dialog box, etc. (see the picture above). - It feels that these problems are caused by the same problem, but I have no idea, and I don’t know which part of the code can view relevant information. For touch screens such as Android tablets, this is how I currently handle its pressing, popping up, and swiping actions.

// Global function

// Used to handle Android touch related events

// android_app: Android Environment

// AInputEvent: Touch event class

GHOST_EventButton *processButtonEvent(struct android_app *app,

AInputEvent *event) {

GHOST_SystemAndroid *system = (GHOST_SystemAndroid *)

GHOST_ISystem::getSystem();

GHOST_WindowNULL *window = (GHOST_WindowNULL *)

system->getWindowManager()->getWindowAssociatedWithEglWindow(app->window);

if (!window) {

window = (GHOST_WindowNULL *) system->getWindowManager()->

getActiveWindow();

}

// Input sources.

// int32_t source=AInputEvent_getSource(event);

// key flags

// int32_t keyflags=AKeyEvent_getFlags(event);

// key code AKEYCODE_0 AKEYCODE_NUMPAD_3 Keyboard Symbols

// int32_t keycode=AKeyEvent_getKeyCode(event);

// Motion event actions. AMOTION_EVENT_ACTION_BUTTON_PRESS Button Type

int32_t motionaction = AMotionEvent_getAction(event);

GHOST_TButton mask = GHOST_kButtonMaskLeft;

float msgPosX = AMotionEvent_getX(event, 0); // x(Pixel coordinates)

float msgPosY = AMotionEvent_getY(event, 0); // y(Pixel coordinates)

// Move event time(ns)

int64_t eventTime = AMotionEvent_getEventTime(event);

// print log debug

std::string strInfo = "move "+std::to_string(motionaction)+" "+

std::to_string(msgPosX) + " " + std::to_string(msgPosY);

CLOG_ERROR(&LOG, "%s", strInfo.c_str());

GHOST_TabletData td;

GHOST_TEventType eventType = GHOST_TEventType::GHOST_kEventCursorMove;

uint64_t currentTime=system->getMilliSeconds();

if (motionaction == AMOTION_EVENT_ACTION_DOWN) {

// Press Event

eventType = GHOST_TEventType::GHOST_kEventButtonDown;

system->pushEvent(new GHOST_EventCursor(currentTime,

GHOST_kEventCursorMove, window, msgPosX, msgPosY,td));

system->pushEvent( new GHOST_EventButton(currentTime,

eventType, window, mask, td));

} else if (motionaction == AMOTION_EVENT_ACTION_UP) {

// Popup event

eventType = GHOST_TEventType::GHOST_kEventButtonUp;

system->pushEvent( new GHOST_EventButton(currentTime,

eventType, window, mask, td));

} else if (motionaction == AMOTION_EVENT_ACTION_MOVE) {

// Move event

eventType = GHOST_TEventType::GHOST_kEventCursorMove;

system->pushEvent(new GHOST_EventCursor(currentTime,

GHOST_kEventCursorMove, window, msgPosX, msgPosY,td));

}

return nullptr;

}

// Global function

// Android event triggers callback function

// When the callback is called, the specific processing function of

// processButtonEvent above is actually called

static int32_t engine_handle_input(struct android_app *app,

AInputEvent *event) {

GHOST_Event *eventToPush = nullptr;

int inputEventType = AInputEvent_getType(event);

eventToPush = processButtonEvent(app, event);

return 1;

}

// GHOST_SystemAndroid: Android system

// Constructor

// Externally pass in the Android environment object, initialize the Android

// environment object, and set the event processing callback function

GHOST_SystemAndroid::GHOST_SystemAndroid(void *nativeWindow) :

GHOST_System() { /* nop */

timeval tv;

if (gettimeofday(&tv, nullptr) == -1) {

GHOST_ASSERT(false, "Could not instantiate timer!");

}

m_start_time = uint64_t(tv.tv_sec) * 1000 + tv.tv_usec / 1000;

m_nativeWindow = nativeWindow;

struct android_app *app = (struct android_app *) m_nativeWindow;

app->onInputEvent = engine_handle_input;

}

// GHOST_SystemAndroid: Android system

// Get the system time since startup

uint64_t GHOST_SystemAndroid::getMilliSeconds() const override

{

timeval tv;

if (gettimeofday(&tv, nullptr) == -1) {

GHOST_ASSERT(false, "Could not compute time!");

}

/* Taking care not to overflow the tv.tv_sec * 1000 */

return uint64_t(tv.tv_sec) * 1000 + tv.tv_usec / 1000 - m_start_time;

}

// GHOST_SystemAndroid: Android system

// Event processing interface function

bool GHOST_SystemAndroid::processEvents(bool waitForEvent) {

bool hasEventHandled = false;

/* Process all the events waiting for us. */

struct android_app *app = (struct android_app *) m_nativeWindow;

int ident;

int events;

struct android_poll_source *source;

// we loop until all events are read, then continue

// to draw the next frame of animation.

while ((ident = ALooper_pollAll(0, nullptr, &events,

(void **) &source)) >= 0) {

// Process this event.

if (source != nullptr) {

source->process(app, source);

hasEventHandled = true;

}

// If a sensor has data, process it now.

if (ident == LOOPER_ID_USER) {

}

// Check if we are exiting.

if (app->destroyRequested != 0) {

return hasEventHandled;

}

}

hasEventHandled |= this->m_eventManager->getNumEvents() > 0;

return hasEventHandled;

}