Exciting news!!! This will unlock MANY many doors.

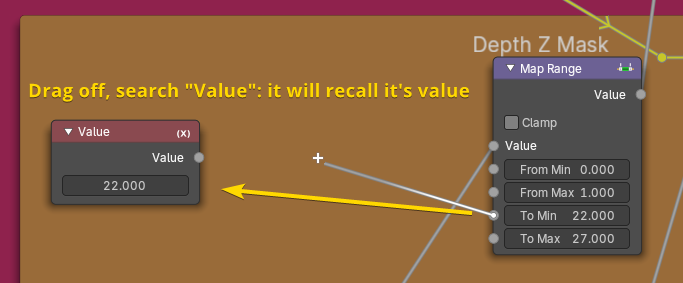

To clarify the first point, here is an image.

With shader and geometry nodes, you drag off a value, then search for the “Value” node and it will “recall” it’s value. Same with colors.

Exciting news!!! This will unlock MANY many doors.

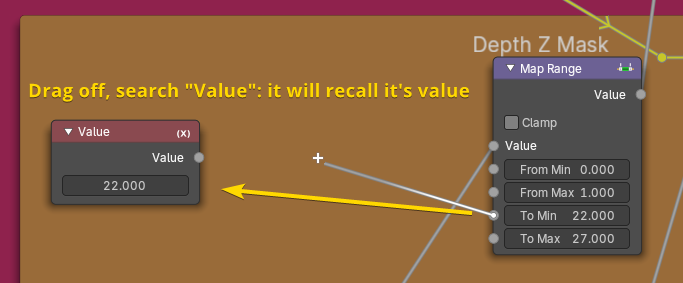

To clarify the first point, here is an image.

With shader and geometry nodes, you drag off a value, then search for the “Value” node and it will “recall” it’s value. Same with colors.

We recently started working on improving the glare node for a better user experience and improved flexibility, as per the Future of Glare node task. You can check what changed in the release notes. But I shall provide a summary in this post.

If you have any feedback on the changes, please let us know.

All node options are now single value inputs that you can link to and use inside node groups. They are “single value”, so they can’t vary per pixel.

Inputs are now organized into three panels, grouped by their function. One panel is for highlights extraction, one panel is for adjusting the generated glare, and one panel is for generating the glare itself.

Two new outputs were added, Glare and Highlights. The Glare output gives the generated glare without the input, and is useful when the user wants to adjust the glare before adding it to the image. The Highlights output gives the areas that are considered highlights when computing the glare, and is useful if the user wants to temporally check the highlights while doing adjustments or wants to use those as a base for creating a custom glare setup.

A new Strength input replaces the Mix option. It has a soft range of [0, 1] and can be boosted beyond 1 for stronger glare.

A new Saturation and Tint inputs were added to allow tinting the generated glare.

The Size input for Fog Glow and Bloom is now in the linear [0, 1] range and is relative to the image size. So 1 means it covers the entire image, 0.5 means it covers half the image, 0.25 means it covers quarter the image and so on.

Additionally, for Fog Glow, the range is now continuous and can take any value, not just the 9 discrete values that were previously possible. Bloom still has this limitation though and are still looking into improving it.

A new Smoothness input controls the smoothness of the highlights. This is similar to the Knee parameter in old EEVEE bloom.

A new Maximum input suppresses very high highlights, such that the highlight has brightness that are not larger than the input. It is disabled when zero. It is similar to the Clamp option in old EEVEE bloom, but is also smoothed by the Smoothness input.

The bloom output now conserves energy better and has a more reasonable range, but can still be boosted using the Strength input.

Th options that were turned into inputs are now deprecated and will be removed in a future version. We versioned most of those changes, but the versioning could not be 1:1, so expect some differences in the Size and Strength inputs for the Bloom and Fog Glow in some files.

Great work on this! I can’t help but think that having a panel for just one parameter (the glare socket) seems like overkill. Couldn’t this be put at the top of the node or even in the adjust panel?

I also think with all these new changes, it would be a good time to finally rename this as the “glow” node. Glare was always a horrible name for what any other compositing software would call glow. A hangover from older Blender naming conventions such as “lamp” instead of light. Using “value” nodes instead of “float” is another example of something that should be retired…maybe for 5.0?

It is true that the Fog Glow and Bloom options have a single option. But other modes have much more, especially streaks. So having a panel is very useful in those cases. And for consistency, we also use panels for all modes.

I think typically Glow is a bit limited in functionality to something similar to Bloom, while Glare is more general, which is what we want since we have many options. And Glare is also still used in the industry. So I don’t personally find this name problematic enough to warrant a rename.

Hello, we are looking for feedback on one of the features we are working on, which will essentially allow the ability to repeat images.

When a node has multiple inputs and you connect images that have different sizes or different transformations, the images will need to be Realized such that they all have the same size and transformation so that the node can process them.

There are two things that can be controlled when doing this realization:

Currently, interpolation can be controlled by the transform nodes like Transform, Rotate, and so on. But boundary extension can’t be controlled at the moment. So your images will always have their empty areas filled with a transparent color. And we essentially want to allow the user to change that by specifying that the image should repeat or extend instead.

Our solution is to introduce a new node called Set Realization Options, which allows you to control the two aforementioned options. An initial implementation of that is available in this patch.

As an example, if I am overlaying a logo on an image, by default, Blender will assume areas outside of the logo will be transparent, but if you insert the proposed node before the overlaying happens and enable repeating, you essentially tell Blender that any future realizations of this image should repeat instead of getting transparent areas.

We are asking for feedback because we are still not sure about the design or naming or any of that. We just know that we need to give the user this functionality. Things to consider:

Build for testing can be downloaded here. (Might take some minutes before they are available)

On first thought - no, the concept isn’t quite clear in terms of “this is in the stream, but is not affecting the stream - until some arbitrary future point”.

Perhaps documentation itself can solve that. The user needs to know what nodes/combinations WILL result in the node having an effect.

Perhaps something more directly descriptive of what it’s doing, and less about the math.

In other words, the node is either tiling the input, or not. The fact that resampling is done is a (required) side effect. So, i think the node should be named something relating to tiling / filling, not sampling (and certainly NOT “realizing”.)

That might be a good idea, yes. Although I’ll keep my thought in the previous line, regarding the name. So also not “Set Boundary Extension”.

From a user POV, it all sounds more complex than it should be, I’d say it’s better to have just one node called “Repeat”, “Tiling”, or something like that, that groups all the options related to that functionality.

Also, having a node that doesn’t have any effect until you use other nodes after it sounds like it could cause a lot of confusion and frustration.

So in the screenshot you included, plugging the “Set Realization Options” output directly to the Viewer would act the same as muting that node?

If so, what’s preventing Blender from applying the realization “before” the output node of the compositor pipeline? This way, it wouldn’t feel special to the user anymore (even if technically the application of the effect is delayed until needed)…

What about “Sample Image” or “Resample Image”? Or is there concern that it’s technically not what the node does, thus being a misnomer?

I’m wondering if the user must understand this implementation detail, or if it can just be part of the documentation but otherwise transparent, since it’ll “just work”.

Is it really necessary ? I would expect overlaying any image would fill the empty space with transparent pixels, and if you want that image to repeat instead, that’s something you should do upstream, explicitly, with a dedicated node.

It is not an arbitrary future point, it is any node except transform nodes. And in practical cases, it is the node that immediately follows.

We are actually going to add a separate node that tiles/repeats the image. But this node is more than that, the node will allow other modes than repeating in the future, this patch is just an initial version. For instance, extended boundary will be possible, like we have in shader image nodes.

If the image is not transformed, then yes, if it is transformed, then the node will have an effect, it will control its interpolation, and how empty areas outside of rotated images will be filled.

I am not sure why you specifically mention output nodes. The realization is not delayed til the output, it is delayed until the next non-transform node.

As mentioned, we will add a dedicated node for repeating. But this is nice to have nonetheless because if you use a repeat node, you need to specify how many repetition are need or some more complicated node where you can say “repeat until you cover this reference image”. Furthermore, a repeat node will be much less efficient, as you will creating large images which takes up memory and requires processing time. While doing repetition during realization is free, does not consume any memory or processing time.

And you can’t really fill the space upstream, you can think about it using the above example and you will see that filling with non transparent areas is not really simple.

Well, that was unexpected. In that case…

Ok then I don’t understand the purpose of this new node at all… I don’t think I can give proper feedback about it ![]()

Let us say that we don’t have the node in question, and instead we we added a new node Repeat that takes two integers that define the amount of repetition in X and Y. How would you create a watermark node group like the example above? You will find that the node tree will be quite involved for that simple task, and this is why the proposed node is useful.

It is true that is more tailored for the advanced user, but that’s why I am asking for feedback on how to make it more clear to the average user.

But why would it be complicated? I would expect a repeat node to repeat, with four parameters- X offset, Y offset, X count, and Y count. No node tree, complicated or otherwise, needed.

What @josephhansen said, that’s what I would expect as an average user, I have no advanced knowledge about the technical details of the implementation and, again, as an average user I shouldn’t have to understand any of that to be able to use a repeat/tiling node, is not an advanced task after all, and it’s pretty simple to achieve that in other software. That’s what I don’t get about the current proposal, why the need to have several nodes to do one “simple” operation?

Again, this is just talking from the UI/UX point of view and clearly ignoring the technical aspects, but the simpler you can make the functionality the better. One node for repeat/tiling, that groups all the needed options for it should be the goal IMO.

The type of repetition in the Set Realization Options node is most useful for texture patterns (e.g. lens dirt, camera noise, background pattern). For those you want to fill up all available space with repetition or mirroring.

The other use case is when you have some element that you want to repeat a specific number of times. For that the planned Repeat node makes more sense. It’s just different use cases.

Personally I still think putting these realization options on the Transform nodes wouldn’t be so bad. Usually the empty space to fill in is the direct consequence of a transform, or at least related to positioning two different size images relative to each other.

This sounds like it could be at least more intuitive, but I still can’t understand (literally) why the need to have 2 separate nodes that might confuse the users. Even if the use cases are slightly different, wouldn’t it make more sense to just create one node to cover both cases? It sounds pretty similar to me from the user POV…

There could be a Repeat node with two modes, fill available space and fixed number. The resulting image size and how it combines with other images would be quite different depending on the mode. Might be confusing in its own way, but not sure, maybe that would be clear enough.

Where to put the choice of extend edges vs. fill transparent would still need to be made somewhere as well, and would need to be mutually exclusive with infinite repetition.

Hi @OmarEmaraDev ! This looks amazing! I only had one thought though:

Concerning the single input sockets: has any thought been given to them having a different appearance than the variable float input sockets? I’m thinking something similar on how geometry nodes has the hollow and filled socket types. It sure would remove some confusion when starting to expose more options as single value sockets.

Thanks for the awesome work! The bloom itself looks very nice!

As per the realization conversation, I also think this proposal feels too technical of a way for average users to work. I also understand the motivation behind the conversation - automatically realizing the transformation after every transform operation can lead to serious detail loss. Alternatively allow me to suggest:

Other compositing software packages have the concept of concatenating transform nodes.

Basically, there would be nodes that need realized data and nodes that don’t. For example, if I were to do two transformations in a row, the second transform node wouldn’t need the realized data as it could add the transformation matrix to the previous one. The same could also be true for a hypothetical “repeat/tiling” node (as suggested by @JulianPerez ). On the other hand, most nodes do need the realized data.

The solution this other software packages have, which I think is quite clever, Is to keep the data unrealized until you reach a node that needs it. When the chain of concatenated transform nodes reaches a node that needs the data realized, it does so automatically using the interpolation method specified in the last transform operation.

For example (assuming the color correct node would need the data realized):

A chain of scale(50%) - cc - scale(200%) nodes would result in detail loss.

A chain of scale(50%) - scale(200%) - cc nodes would not.

A chain of scale(50%,bilinear) - scale(200%,nearest) - cc would be realized with nearest interpolation.

I think something like that could be the best solution for the balance between control vs UX.

I hope I’m making myself clear. Otherwise just ask! Thanks for the cool work!