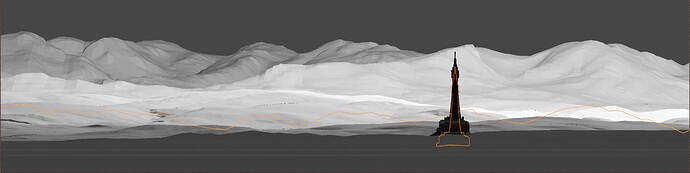

A common thing for me is the need to render images as they would appear under influence of atmospheric refraction. This effect makes distant objects like mountains appear higher than they really are, and is caused by vertical variation of refractive index of air making light rays bend down towards the earth. Being able to simulating such an effect with blender is a useful technical tool, although I concede it won’t be that useful for artists.

I’m writing this post to give an idea of what I want to implement for myself, while opening up for influence to make things align better with the goals of Cycles, so that the functionality may be merged and benefit others than myself, if thats relevant at all.

I have already made myself a basic raytracer/shader for this, written in Python for Blender, which works okay, albeit very slow. To improve the speed, I’m interested in implementing it in a faster engine, like Cycles which uses Optix/CUDA.

To trace the ray in the atmosphere, one needs to solve two coupled ordinary differential equations.

The integration method I’ve implemented is a fourth-order Runge Kutta integrator. Instead of shooting a single ray from the camera, it makes several finite rays in sequency that together trace out a curved path, until the path intersects with scene objects, where the ordinary shading operations of the raytracer may occur.

The variables affecting the curving is the refractive index (scalar), and its gradient (vector). These will again vary with factors like temperature and pressure, which both are determined by altitude. Since this effect is only substantial in the atmosphere, it makes sense to me to implement this in the renderer itself instead of relating it to any data connected to blender objects. To do this, the renderer must be configured with some idea of which direction is “up”, and at what level the gradient of the atmosphere starts.

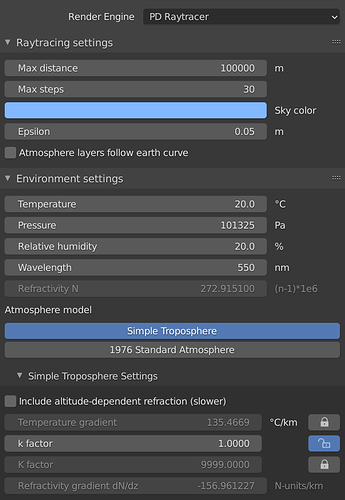

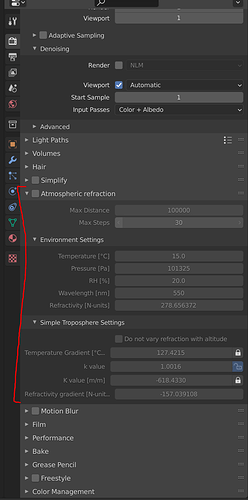

Here is the control panel for my Python prototype renderer. (My renderer assumes atmosphere starts at z=0 and that z represents altitude.) The user can choose the integration step size and maximum ray distance. A sub-panel contains settings for adjusting the atmosphere. These settings are essentially a calculator which takes in surface temperature, pressure, humidity, wavelength, temperature gradient (i.e. lapse rate) and some other factors, to then calculate refractive index and its gradient which is used for rendering.

I have gotten blender to build with CUDA support. I will try to get Optix to build as well. I’ll start digging in the codebase to find the code path for raytracing and where it makes sense to put this stuff.

Good tips and ideas are appreciated ![]()