Hi, with the new EEVEE realtime viewport rendering system, obviously a lot new things are “possible” but I really, REALLY want / need a realtime rendering feature. This is totally possible and barely / might not even need any real change to the C++ (I only did a little so far), so far I’m able to actually get the 3D viewport image, save it to a file, and get the dataURI for websocket use, and the files save for me IN REAL TIME as I play the timeline, here is my code:

import base64, io, os, bgl, gpu, bpy, threading, time, sys

import numpy as np

import multiprocessing.pool as mpool

from gpu_extras.presets import draw_texture_2d

from PIL import Image

from queue import Queue

myQ = Queue()

finalPath = bpy.context.scene.render.filepath + "hithere.png"

WIDTH = bpy.context.scene.render.resolution_x

HEIGHT = bpy.context.scene.render.resolution_y

offscreen = gpu.types.GPUOffScreen(WIDTH, HEIGHT)

def draw2():

global finalPath

global WIDTH

global HEIGHT

context = bpy.context

scene = context.scene

render = scene.render

camera = scene.camera

scaleFactor = 1

view_matrix = scene.camera.matrix_world.inverted()

projection_matrix = scene.camera.calc_matrix_camera(

context.depsgraph, x=WIDTH * scaleFactor, y=HEIGHT*scaleFactor, scale_x = scaleFactor, scale_y = scaleFactor)

offscreen.draw_view3d(

scene,

context.view_layer,

context.space_data,

context.region,

view_matrix,

projection_matrix)

bgl.glDisable(bgl.GL_DEPTH_TEST)

draw_texture_2d(offscreen.color_texture, (0, 0), WIDTH * scaleFactor, HEIGHT * scaleFactor)

buffer = bgl.Buffer(bgl.GL_BYTE, WIDTH * HEIGHT * 4 * scaleFactor * scaleFactor)

bgl.glReadBuffer(bgl.GL_BACK)

w = WIDTH * scaleFactor

h = HEIGHT * scaleFactor

bgl.glReadPixels(0, 0, w,h, bgl.GL_RGBA, bgl.GL_UNSIGNED_BYTE, buffer)

print("starting thread for pic:" + finalPath)

needle = threading.Thread(target=saveIt,args=[buffer, finalPath, WIDTH, HEIGHT])

needle.daemon = True

needle.start()

print("finished starting thread for"+finalPath)

def coby(scene):

frame = scene.frame_current

folder = scene.render.filepath

myFormat = "png"#scene.render.image_settings.renderformat.lower()

outputPath = os.path.join(folder, "%05d.%s" % (frame, myFormat))

global finalPath

finalPath = outputPath

h = bpy.types.SpaceView3D.draw_handler_add(draw2, (), 'WINDOW', 'POST_PIXEL')

bpy.app.handlers.frame_change_pre.clear()

bpy.app.handlers.frame_change_pre.append(coby)

def saveIt(buffer, path, width, height):

print("now I'm in the actual thread! SO exciting (for picture: "+path+")")

array = np.asarray(buffer, dtype=np.uint8)

myBytes = array.tobytes()

im = Image.frombytes("RGBA",(width, height), myBytes)

rawBytes = io.BytesIO()

im.save(rawBytes, "PNG")

rawBytes.seek(0)

base64Encoded = base64.b64encode(rawBytes.read())

txt = "data:image/png;base64," + base64Encoded.decode()

filebytes = base64.decodebytes(base64Encoded)

myQ.put(filebytes)

f = open(path, "wb")

f.write(filebytes)

while(myQ.qsize()):

f.write(myQ.get())

f.close()

print("gotmeThis time for picture:"+path)

It’s not ideal for many reasons, first I had to change some C++ code as described in this post (look at bottom of “EDIT 4” section): [https://blender.stackexchange.com/questions/128174/python-get-image-of-3d-view-for-streaming-realtime-eevee-rendering](http://python get “image” of 3D view for streaming / realtime EEVEE rendering)

(basically just a this to bgl.c:

static int itemsize_by_buffer_type(int buffer_type)

{

if (buffer_type == GL_BYTE) return sizeof(GLbyte);

if (buffer_type == GL_SHORT) return sizeof(GLshort);

if (buffer_type == GL_INT) return sizeof(GLint);

if (buffer_type == GL_FLOAT) return sizeof(GLfloat);

return -1; /* should never happen */

}

static const char *bp_format_from_buffer_type(int type)

{

if (type == GL_BYTE) return "b";

if (type == GL_SHORT) return "h";

if (type == GL_INT) return "i";

if (type == GL_FLOAT) return "f";

return NULL;

}

static int BPy_Buffer_getbuffer(Buffer *self, Py_buffer *view, int flags)

{

void* buffer = self->buf.asvoid;

int itemsize = itemsize_by_buffer_type(self->type);

// Number of entries in the buffer

const unsigned long n = *self->dimensions;

unsigned long length = itemsize * n;

if (PyBuffer_FillInfo(view, (PyObject *)self, buffer, length, false, flags) == -1) {

return -1;

}

view->itemsize = itemsize;

view->format = (char*)bp_format_from_buffer_type(self->type);

Py_ssize_t *shape = MEM_mallocN(sizeof(Py_ssize_t), __func__);

shape[0] = n;

view->shape = shape;

return 0;

}

static void BPy_Buffer_releasebuffer(Buffer *UNUSED(self), Py_buffer *view)

{

MEM_freeN(view->shape);

}

static PyBufferProcs BPy_Buffer_Buffer = {

(getbufferproc)BPy_Buffer_getbuffer,

(releasebufferproc)BPy_Buffer_releasebuffer,

};

```

and then change this:

/* Functions to access object as input/output buffer */

NULL, /* PyBufferProcs *tp_as_buffer; */

to this:

/* Functions to access object as input/output buffer */

&BPy_Buffer_Buffer, /* PyBufferProcs *tp_as_buffer; */

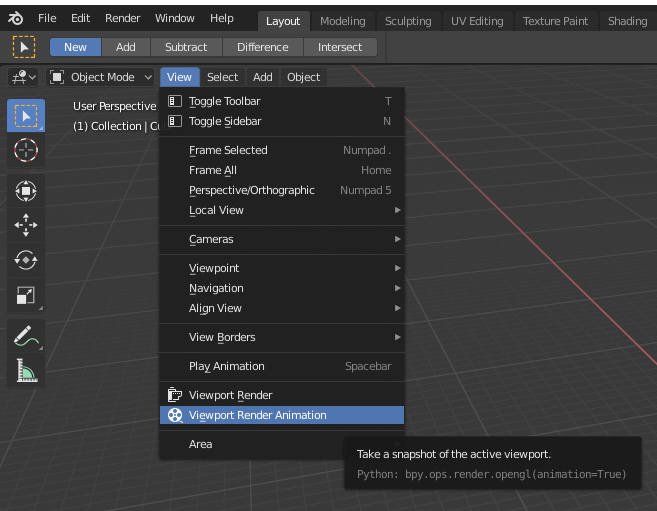

but there's probably another way to convert the blender buffer to bytes, AND I had to install the python moduele PIL manually for saving the image, but there's probably another way. Anyway, if you do those two things, you should be able to test my code. I made a simple animation for about 600+ frames with the camera moving, and when I start from frame 0 and simply hit the play button, I can watch my /tmp folder instantly fill up with images. The problem: I image only captures what is actually SHOWN on the viewport, so if the 3D viewport is covered partly by another window (which is usually almost is), then the picture comes out looking like this:

notice that the top part of the picture looks somewhat normal, but the entire bottom is smeared; that is because that entire bottom section is covered by another (the python editor) window....

SO my main problem is actually VERY SIMPLE to get this to work: I just need a way to "resize the window" but behind-the-scenes, preferably so the user doesn't even see a new window opened up, or if I need to open up an actual window, then that would be OK... and then I need to be able to get the 3D image of that window, similarly to how the render function works.

I made a post on the blender stack exchange (linked above):

but I didn't really get the answer I was looking for, the main answer just said to use the **`gi`** library for screenshots, but first of all it's almost impossible to install on my computer, and I don't know how to get an EXACT resolution (like 1920 / 1080) from that, also IDK if the screenshot would have an alpha background, whereas this solution, so far, indeed has alpha in the picture.

The bulk of this approach is basically from the blender docs (linked in the above stackexchange post, at the beginning of "_**EDIT 3**_")

I really need someone who understands the offscreen rendering properly, if there's a way to do it so that the camera view is actually capturing in 1920 / 1080, and not just by scaling / pixelating the openGL, besides the fact that it's pixelated, there is still the problem of the other windows overlaying.

SO: How can I (either by editing the C++ more or expanding this python script) get the 3D camera-view image of the viewport in 1920/1080 format, without any windows blocking it? Prefereably I'd like to use this method made so far, and not some other 3rd party screenshot addon, since I also want to get the dataURI for use with a websocket... but the main thing is:

**_How can I get a 1920/1080 resolution image of the camera-view of the rendered scene?_**