Hi!

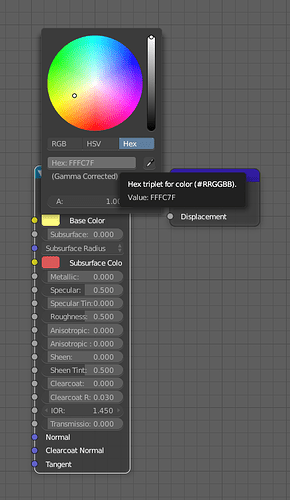

I would like to know how to get the Hex (Gama Corrected) value from a color in python.

getting the default_value of the input i can get the float point color but i could’t find a way to the hex gamma corrected one or at least the float point gamma corrected color.

I don’t think there is an API function for it. But it’s basically this, converting linear to sRGB and then to hex:

def to_hex(c):

if c < 0.0031308:

srgb = 0.0 if c < 0.0 else c * 12.92

else:

srgb = 1.055 * math.pow(c, 1.0 / 2.4) - 0.055

return hex(max(min(int(srgb * 255 + 0.5), 255), 0))

This worked perfectly.

Thanks!

Thanks a lot for this! Do you also have the from_hex version? I feel like these should be documented somewhere or available through API.

No, they should not be available through the UI as hex codes are meaningless.

They are also from a bygone era.

As someone that has had to explain colour to smaller teams, I can state anecdotally that hex codes will cause more confusion than aid. They should be avoided at all costs, doubly so if someone is thinking about coding them into an API.

why the strong feeling against hex codes for colors?

I think they have one very handy attribute - these can be copy&pasted, more or less independent of the application.

looking at this function with … various magic numbers … i would prefer to have it somewhere in the API as well

About 15+ years of correcting what I am about to correct in your post. ![]()

This is unequivocally false.

You have to remember that a display referred value is a light ratio. It communicates something about the ratio between the other channels. What doesn’t it communicate?

- The colour of the lights in question.

- The intensity mapping that the ratios represent.

That is, with no additional information, they are pure garbage values. Worse, they are in an archaic hexidecimal format which makes them seem magical, as opposed to just the really dumb idea they are.

So the problem specifically with regards to imaging applications such as Blender, is that they are legacy notions tied to sRGB. If you were to input the precise values into say, an Apple product from 2016 onwards, the light ratios are applied to a completely different set of lights, and the resultnig colour is bogus. In Blender, with wider gamut rendering becoming more and more common, they are equally useless.

So TL;DR: They are junk. Don’t use them. Don’t refer to them. Don’t help spread more confusion among the poor people that deserve better.

I am sorry, but that is not entirely true.

- They are not garbage values, they are just three integer values written in a row one after each other in a hexadecimal format, e.g. FFFFFF being 255, 255, 255 (pure white color).

- Hexadecimal format is not archaic. It is industry standard format of displaying binary data, because it can represent a byte ranging in values from virtually 0-255 can be represented with just two hexadeciaml digits, e.g. 7F for 127. So, hexadecimal is used a lot by programmers.

What you are trying to tell has nothing to do with hex, it relates to different color space problems. Blender, as far as I understand, uses a linear color space for its color pickers. The output hex value is a converted value of the color in linear color space to the sRGB color space. The hex string, thus, is useful for quickly copying over and exchanging the color between applications, because most of them expect an sRGB value.

The reason why it should be exposed to API is because, for example, when writing an importer/exporter for a game format, one might need to import some colors. And… Blender expects them to be in linear color space, so they have to be preconverted prior to setting them to properties with the reverse version of Brecht’s formula from the post above. Additionally, when trying to map values from GLSL or other shaders to Blender’s nodes, the input colors have to be converted back to sRGB color space, since the math from shaders is meant to be working in that space, not linear.

In fact, when writing my own addon for supporting a custom game format, I had to deal a lot with these issues as they are not clearly documented anywhere. There is no information specifying the color space used by color pickers (e.g. the color picker from vertex paint somehow uses sRGB, but the rest including custom ones seem to be using linear).

Linear isn’t a colour space, and sRGB has very little basis in many other spaces. I assure you it is garbage having hex codes around from sRGB when you are working in ACES or some other space.

False on several levels. You did not understand what I stated.

Do you mean like Unity or Unreal where the reference space is set to ACES frequently and the codes have no meaning?

Linear is not a colour space, and again, sRGB doesn’t have much bearing on contemporary game engines.

If you are serious about your work, learn more about this domain, as it is vastly important.

I am not here to engage in a discussion over terminology, but it is often refered to as “linear color space”. Gamma and Linear Space - What They Are and How They Differ — KinematicSoup Technologies Inc.

It does not change anything in what I previously said, anyway.

The raw bytes for RGBA color white (255, 255, 255, 255) would be literally FFFFFFFF when written into a binary file and displayed in a hexadecimal representation.

The hexadecimal representation itself has nothing to do with the used color space, color model or whatever. It is just the way of presenting integers in a different counting system. The reason hexadecimal strings are used is because they provide an easy way to copy paste them around different apps as a single string, opposed to copying channel values individually. This is used a lot by graphic editors, 3D editors and many other software. Also it is widely used in web.

There are also other game engines except these two. GLSL shaders by default at least operate on regular RGB colors, for example. That means that if I divide 255 by, to say 2, I am gonna get half the channel - 127, or 0.5 in float representation. In case of Blender color pickers operate in a different space, and the value in for RGBA (0.5, 0.5, 0.5, 1.0) will be (0.212, 0.212, 0.212, 1.0). The hex output there is gamma corrected and appears as expected 7F7F7F.

That is why it is important to have this exposed to Python API. When you are exporting the color to other software, binary file format or anything, it is very likely that you need this representation, be it hex or decimal, not the other one, as gamma things may differ betwen applications and implementations.

Do some homework first then we can discuss things.

Yes, the best way to end an argument is definitely to vaguely claim someone does not know things while providing no real arguments.

Apologies. In this instance, there is no other means to communicate it.

I can easily step you through the core concepts, but that’s up to you.

What does an value from an RGB triplet represent?

It represents the intensity Red, Green, Blue component

HEX values are still widely used in corporate style sheets and websites, so it is useful to know how to setup Blender so that the colours in a rendered logo from Cycles or Eevee match elements that are specified in HEX values.

Also, to quote the manual for Unity HDR Picker and some perspective: Unity - Manual: HDR color picker

Whenever you close the HDR Color window and reopen it, the window derives the color channel and intensity values from the color you are editing. Because of this, you might see slightly different values for the color channels in HSV and RGB 0–255 mode or for the Intensity slider, even though the color channel values in RGB 0–1.0 mode are the same as the last time you edited the color.

Yeah good luck with that.

Maybe try writing them down and then taking a photo of them?