Right, a boolean attribute could exist on any domain, edge, face, vertex, so therefore there can be selections of any of these types of elements. Any node that supports more than one of these domains would try to use the domain of the input attribute. If the node only supports one domain, the attribute can be interpolated or converted to the correct domain.

The attribute input sockets are just string sockets for the attribute names. They’re exposed just for the ease of plugging in the same name to multiple positions and like @ManuelGrad said, to expose the names to the modifier.

I do hope that we can add some sort of static analysis on the node tree to provide a drop down of the attributes available at a certain point in the tree. For now we’ll just have to type them in manually or use copy and paste though.

A full-blown script node might not even be necessary, just an expression node might well do. The whole node tree in a sense is a script/program of a sort already.

Ok so i was completely misled! not sure if it’s because i missed something important? I’m also not sure if I’m the only person that will fall into the trap of thinking that way. Might be a thing to consider in UI design

Why are the sockets for floats a bright greyish yellow instead of a pure grey like in the shader editor?

What’s the reason for this?

The script node in both Sverchok and Animation Nodes is extremely useful so I would definitely want to see one in GNodes too! To be able to run some custom script mid-tree is so valuable for procedural modelling

A full-blown script node might not even be necessary, just an expression node might well do.

This would be so useful to simplify node trees, also in the material shaders.

There definitely needs to be the ability to run some sort of user defined code over geometry… The problem is that the only scripting language Blender really has for working with geometry is Python… Which can be extremely slow, a bit of a blunt instrument feature wise, and not very self contained for just working with simple operations over geometry.

So maybe script nodes should be encouraged only to be used for special use cases like working with files/external code while node based visual scripting can tackle daily tasks like attribute logic.

Question here:

Right now using a transform node after instancing objects onto something rotates objects as if “only locations” were on. These cones are being instances from a plane; currently, seeing as there is no option for the instances to inherit the target meshes normals yet, if I were to manually rotate the plane this is the behavior I would except. When it comes to the transform node, however, I think I would expect it to treat whatever is being plugged into it as a single object with an origin point in the middle, which I think should allow for it to simply be aligned to world space.

I know that might bring up a whole barrel of worms for how orientation is dealt with, but I was curious if this is currently intended behavior, or something to look over later as the design continues.

I know a lot of things in this thread will inevitably be things you already know and are planning on, so we all appreciate you putting up with us ![]()

I did the same test I did the other day, but this time with today’s code, I also changed a bit the node tree to avoid generating the planar grid, and wow… it has improved, not sure if you did something or if the geometry nodes branch had something that was killing performance but before it was unusable, right now it’s slow… but “usable”

We are talking about the distribution of 1.000.000 spheres with 1.920 triangles each, the other day it was awful, today we are talking about 1.3 / 1.4 fps, not shiny, but at least you can move around between those 1.920.000.000 triangles, I hope it can be further improved, but it’s a good step in the right direction

I tested also the same simple tree of the other day and it has improved also to the same speed, so clearly you did something.

I also have to say that I tested it in a TR-2990wx instead of the i7-5960X, BUT I only see one thread being used, so the i7-5960X should be faster in the end.

I’ll repeat the test tomorrow in the i7-5960X

Regarding the colors: is there something planned that might use the different socket shapes that are possible besides circle?

While I generally don’t really care, I find it odd that shader sockets are now red so that geometry sockets can be green ![]()

I think its more thematically consistent, object data properties are green after all. Makes sense for geometry in that context I think! And after all the material icon color is red too, so it makes sense the shader output/input would be red.

I agree. Orientations of points is currently what is preventing users to really use geometry nodes for serious stuff.

Most of time, we need oriented instances.

If it is not possible, we have no other choice than continuing to use particles or dupliverts, duplifaces.

A random rotation or instances pointing to the sky does not cover regular use cases.

Regular use cases are the instances following instancer’s global orientation or normal of surfaces of instancer.

A random rotation or instances pointing to the sky does not cover regular use cases.

Regular use cases are the instances following instancer’s global orientation or normal of surfaces of instancer.

Let’s agree that both ways are “regular” ![]()

particles following emitter normal seem hyper basic not to be implemented. I mean it’s a first necessity feature I’m sure it’s on devs TODO’s top priority

particles following emitter *local orientation is already possible, see below

*(you used global emitter, but I suppose you meant local space emitter?)

But logically the rotation seems to be constantly re-calculated for each particles if you are rotating the terrain for example? might be a slow method? might be best to use another one that transforms before distribution or after instancing?

Or it might be best to just set the point cloud object as the terrain child? This is also working.

but this will have an impact particles…it’s another workflow possibility with a bunch of major consquences on the particles behaviors.

for example it will be harder to get particles back to global orientation. And it could resolved also with the same synch technique used above (if we multiply the the emitter object orientation vector by -1 to cancel out the rotation, see gif below)

Basically changing the point cloud location/rotation/scale (by making it a child of terrain) will also influence particle density, rotation and scale. i’m not sure yet if it’s a best way to scatter. might be best to keep all particles settings global, and always lock the point cloud object transforms to location(0,0,0) location(0,0,0) and scale(1,1,1) ? As playing with point cloud transforms add a ton of confusion…

here I created a simple animated .blend handy to test rotation normal/local/global

The trickiest thing is to add two rotation together, let say the rotation synched with local object rotation (like first example gif)+ a random rotation in xy? how to do that?

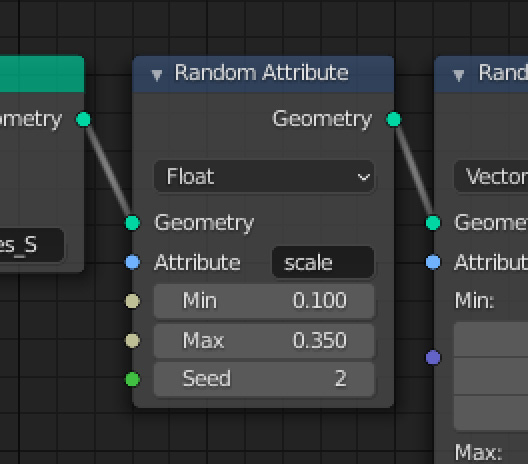

Funny that you’re using a random attribute node with the same minimum and maximum to set a constant attribute value. We should probably add a “Fill Attribute” node!

I did a bit more testing with a vertex-group masking workflow,

this is a classic camera-clipping vertex-group density set-up that use dynamic paint, nothing fancy.

Now that we have the new particle density system, it could potentially work with animated cameras, greatly optimizing the viewport for huge scene.

but unfortunately as soon as we add random rotation and scale attr, the technique is suddenly not usable anymore. i wonder what’s the trick then. Anyone have ideas?

Here’s a .blend if anyone is interested in the set-up

and to potentially resolve the random attr rotation and scale seeding problem?

*(note that i use a handler script to follow active-camera movement)

Not much detail in this task, but it’s for solving this problem: https://developer.blender.org/T83108

We were discussing methods of solving this but I don’t think we reached a conclusion today. Personally I like the idea of evaluating 3D white noise based on the position attribute. That might need to be a new node though, not sure.

Correct.

I wanted to express the fact that people more rarely choosing to make things random.

*(you used global emitter, but I suppose you meant local space emitter?)

Yes. I was thinking about that whole that can currently be transformed by object properties but can not be have kind of delta transforms from nodes.

And I wanted distinguish that from individual particle normal. For example, a little planet project where each building , each tree is pointing in a unique direction that corresponds to normal of surface of sphere that is the emitter.

They only have 2 weeks before end of Bcon1.

I am wandering if it would be possible to use Camera Culling margin from Simplify panel of Cycles Render properties to obtain an EEVEE equivalent.

They only have 2 weeks before end of Bcon1.

Screwing up/rushing important features / future foundation of everything-node to get everything done in time for an imaginary deadline?

I sure hope not. I heard it was done in the past so i guess you have your reasons to believe that.