The guarantee right now is only that elements with the same id will get the same object

If there’s no way of users to know for sure that N id == X objects, then we are possibly missing the following opportunities

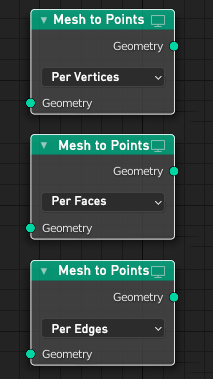

control instances spawn % rate by id

control instances spawn with color values

control instances spawn from the point scale attributes (important for realism)

control instances spawn with texture color values (very important for green walls and flower patterns ect)

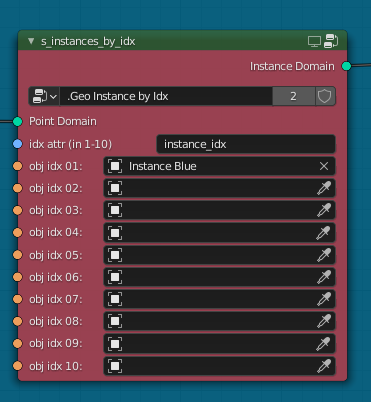

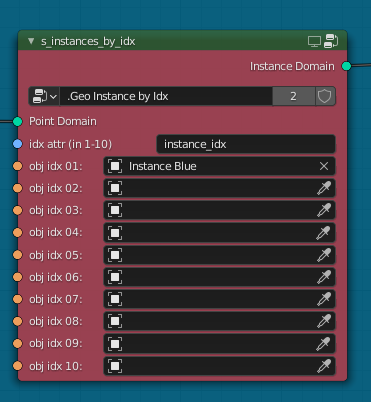

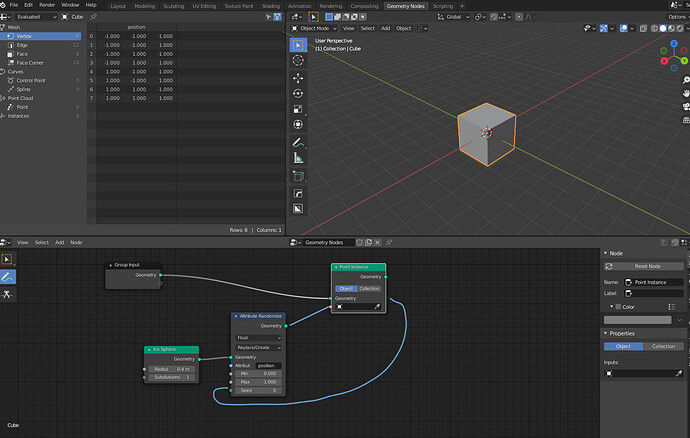

All of the above is possible because i naively implemented “Instancing by index” with the nodegroup below (from 2.93) (with the help of the point separate)

But this was only possible because user know for sure that N id == X asset

If you want to instance specific objects on specific points, you should splitup the points with a Point Separate node first currently.

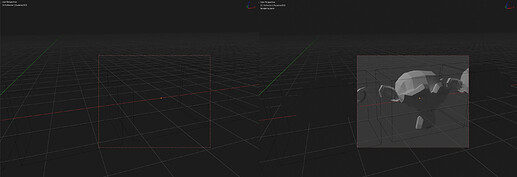

Note that running multiple points separate in a row as suggested had severe impact on performance (this set up is 50% slower than random collection instancing) & also it add a lot of unnecessary complexity in the nodetree

So making this node not pick instance randomly, (by for example, if there’s 6 element in collection, 0,1,2,3,4,5 int value would correspond to the object order, perhaps everything above should repeat or be random) would add up a lot of opportunities, while making it pick random do not really add any advantages in this point instance node afaik?

I hope my feedback was useful

this issue as well.

this issue as well.