Hello, all. This is my first post on Devtalk, though I’ve been using Blender since 2.49b.

I’ve been working a lot with Geometry Nodes for some architectural modeling, and they’re fantastic so far. The one limitation I’ve found seriously impeding is the requirement of input objects for the point nodes. It causes the node graph to need an external reference, and if one is sharing the graph across projects or with team members, the more it can be self-contained the less fragile it is.

There’s been a lot written about the valid technical reasons why Geometry Nodes need to be attached to an Object, and why they can’t just internally generate more Objects, but I think the discussions so far are missing an opportunity that this could be done without breaking Blender’s assumptions about the Modifier stack.

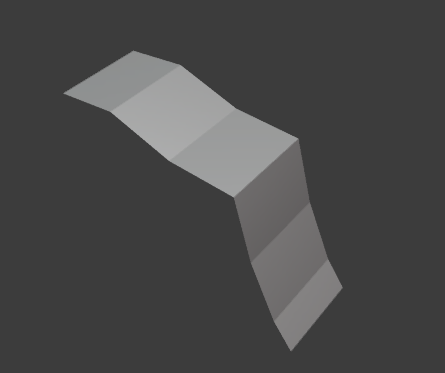

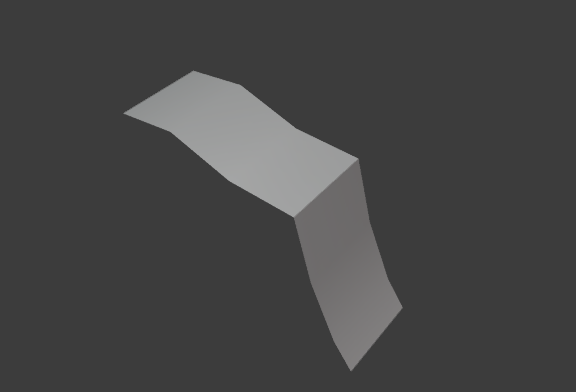

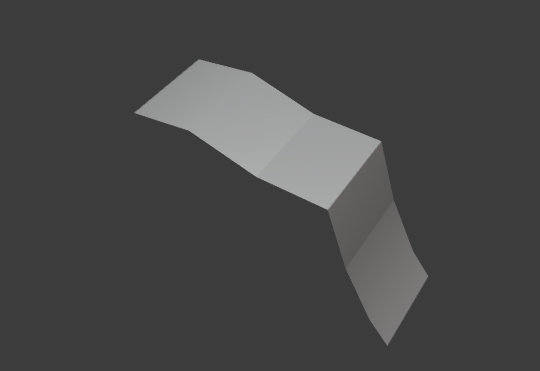

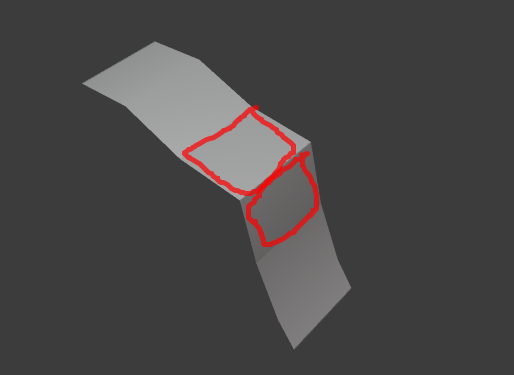

Here’s an example use-case: I want to create a Grid primitive in Geometry Nodes, then place one of the mesh primitives such as a sphere at every point. I do not need those to be objects, just new vertices, edges, and faces that are part of the same mesh.

Now, I could easily make a Geometry Nodes graph that generates a sphere with the new primitives node. Then I could pass that to multiple Transform nodes, combine their outputs, and have exactly the same thing – just really inefficiently.

My question is, what are the technical reasons why the point nodes couldn’t take geometry input ports and replicate those vertices and faces as needed, with an implicit Join within the point nodes, rather than replicating Object instances? I am aware of the ramifications for GPU instancing if there is one large mesh instead of multiple smaller meshes that can be instanced; obviously what I propose would (like any other tool) need to be used appropriately.

Another thing that might be useful is to allow Geometry Nodes to be attached as a modifier to an Empty object, for those cases where one would otherwise sever the connection from the input object and generate the geometry to the Group Output.

After posting this, a third option occurred to me: Is it feasible to have Blender offer static (immutable) instances of some existing primitives, at unit dimensions, that could be retrieved as predefined (and shared) Object inputs to Geometry Nodes? If the Geometry Node graph needs a default cube a an input, could this be an implicit system-wide resource that can’t be altered but doesn’t have to exist in each Scene?

I’m considering taking a dive into the code and mocking up one or more of these ideas, but before I do that I wanted to ask about the concept here to see if I’m overlooking something obvious. I had no trouble reading the source for some existing Geo Nodes, but I realize I lack the deeper understanding of the application context and the assumptions that drive the API contracts between modules.

Thanks for listening.