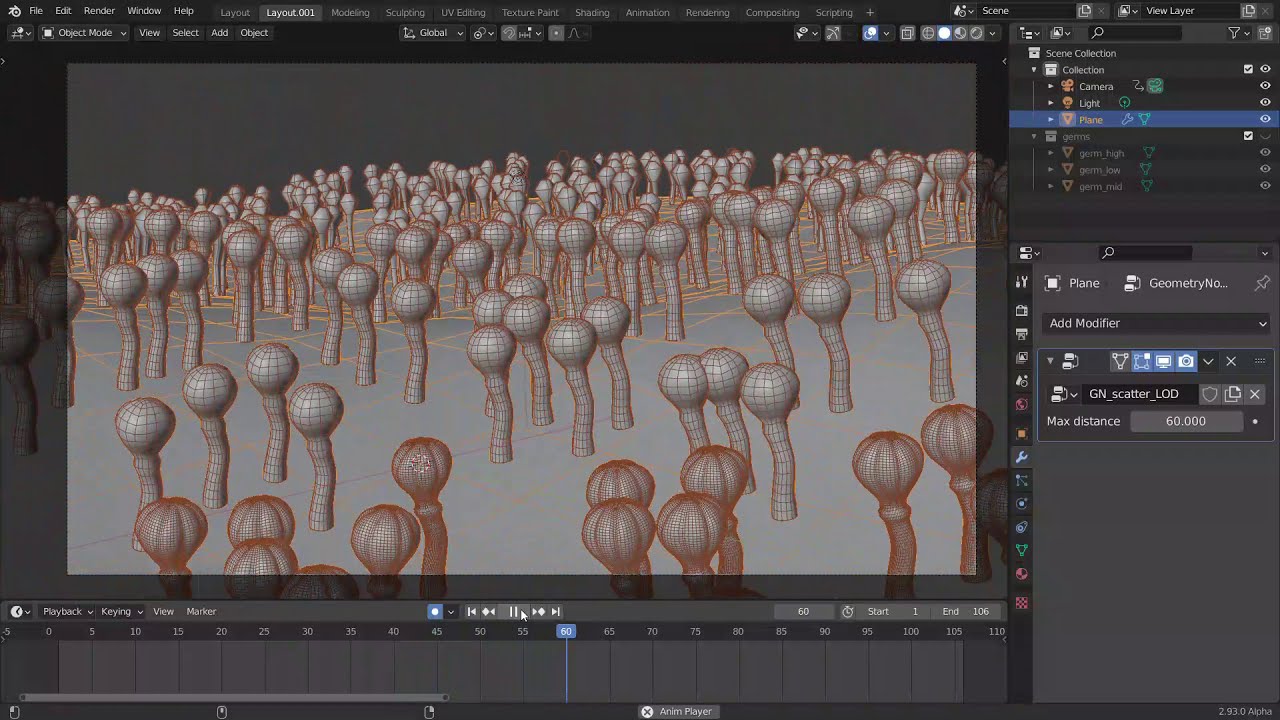

As you say it’s as simple as distributing different models on different distances. Of course without loops we can only hardcode the number of levels, here I made three (dynamic number of levels is something a dedicated node could handle, I guess). There’s also frustum culling that I honestly wouldn’t know how to do. Haven’t thought about it.

Calculate a decimate object for lods is the dead of the performance. Actually decimate need sometimes several minutes to calculate a complex mesh.

LODs could be a list of meshes. Like actually inside the object we have the mesh node add 2-3 optionals mesh nodes to add a LOD version. And if wa want to use in geometry nodes that it could be a parameter

It will have the pro that LODs are not only a geometry nodes option, it oculd be used for instanced geometry or collections.

Not really, though.

If nodes are cached that shouldn’t be a problem ? Decimate once, scatter everywhere. I’m just hypothesizing.

There’s no more Global/Local projection technique for the Procedural texture scattering?

That only works with completed models and closed steps. Not with non destructive workflow. In other software that we cannot reffer have same problems and decimate are a pita. And in blender is worst because decimate is not multithreaded

Like I told, is easiest give the user the option to select other meshes like lods, and only use decimate if they want. More options for the users, more possibilities

also, don’t forgive that we have asked for lods for a lot of things the last years. They are not useful only with geometry nodes

He might have been referring to the LOD system used by UE4, not UE4 as a whole -

https://graphics.pixar.com/library/StochasticSimplification/paper.pdf

Neither manually created LODs nor decimated distance based ones should be an issue in a node based setup I guess.

Ah that makes sense. Well that can already be done. What would be the key features in a LOD system that we can’t replicate right now in geometry nodes ?

I’m sure there was a reason for the rules, probably legal concerns. When it comes to node based stuff it’s pretty hard not to reference big H in every other sentence though.

As long as everyone’s aware what can be achieved and how high the bar is we should be fine.

If you refer to the paper then that is still not open-source but public domain then? So still different.

It’s an IP issue. If the cited papers are publicly available however, I don’t think there is a problem : you can cite these publications on here too. But since compiled proprietary software can be patented, the foundation could be sued for breaking patent law, or other equally dumb charge (can you tell I don’t like patents?).

@dfelinto isn’t there a sticky summarizing this ? so somebody can just link to it whenever it is brought up ?

It is actually open source, there are no constraints to access the source and you can create and use your own version of UE4, what it´s not is FREE open source, but it is actually open source

So I would expect the rule can be avoided for UE4.

EDIT: WRONG! It seems is not "Open Source” as per the terms explained in The Open Source Definition | Open Source Initiative mentioned by @megalomaniak

However. I donˋt think explaining things naming other packages, open or not, is useful, usually doing that you can show the “surface” of the feature, but not the important things and specifics, also using other software as example expects the dev to check how the other package works, which is not useful, it makes the dev to loose time, and it´s a potential source of copyright problems and accusations.

So it´s not “censoring” per se, it´s more like a safeguard that makes the development process better and safer for Blender and its users.

It also does one more thing, when we propose a feature it forces us to properly think about it, and the majority come to an improvement of what we know, so it´s more work to propose a feature, but it´s also a better way of proposing that will probably lead to a better outcome

Ok, I grant you the “open source” part, but then how would you name the way UE4 provides it´s source?

Anyways, donˋt answer, you have the point here, and no need to derail the thread, the most important thing of the previous thread I wrote it´s the ”Why should we avoid naming/showing other packages to explain a proposed feature"

Proprietary¹ software developed under a open(as in issue tracking, source and commits visible) development model, not to be confused with open source - which has a rather specific meaning. Simple as that.

¹ Licensed under a proprietary custom EULA reserving all the rights over the work to the private entity(in this case Epic Games).

Don’t get me wrong I appreciate what Epic Games is doing, it’s the closest thing to going open source they can afford to do, without actually going open source(which is something they sadly just can’t afford to do).

The posts can be separated out into another discussion topic.

FYI, Jacques Lucke basically says LOD won’t give you much of a performance boost right now.

Currently, our main bottleneck when it comes to instancing seems to be not so much the complexity of individual objects but the number of objects…

Distance culling/ FOV Clipping would tho

Distance culling/ FOV Clipping would tho

Isn’t that something you can do in the viewport already by playing with far clipping plane?

If you are talking about the native clipping sliders, well this will clip everything, what we need is a clipping slider only for particles. Vista preview shouldn’t be lost of course, only the massive numbers of particles needs to be clipped

If you are talking about weight group set up with for example vertex weight proximity and a dynamic paint set up that can clip with a camera FOV cone mesh, yes that can already be done

In fact I’m working on an addon that facilitates the work (Scatter5 i’ll share it when it’s done ![]() ) , but well a simple culling distance slider and enable camera clipping for active camera natively implemented would be so handy

) , but well a simple culling distance slider and enable camera clipping for active camera natively implemented would be so handy

So far the scattering pebbles and scattering flower scenes were testing only on small areas, not really a real-world scenario, users are likely to create particles on extremely large planes (Yes really ![]() ). I hope the devs will start to test out scattering on large areas, so optimization like technique above can be tackled

). I hope the devs will start to test out scattering on large areas, so optimization like technique above can be tackled

Imo it’s quite important that the workflow is tested on huge areas…

only working with tiny plane is kinda cheating? ![]()

How do you do that ? Is it simple camera matrix multiplication ?