Hey I did some mockups / have a few calls into question

about the workflow design,

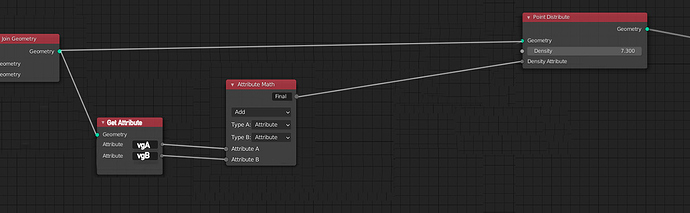

when i see the @dfelinto designs the workflow is working with mainly geo data that is passed around. and attr data only available as inputs

if we want a flexible workflow, shouldn’t we be able to separate attr from geo and do attr manipulation from the attr types?

Aren’t attributes mostly just floats or vectors ? (even point positions and normals ?)

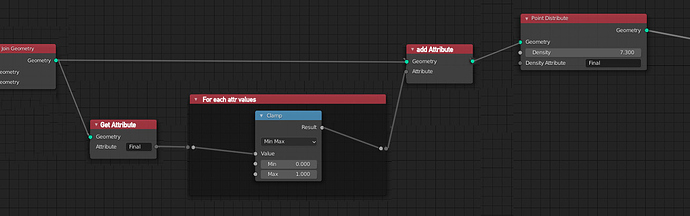

@HooglyBoogly even if the math nodes are within a special for_each attribute values box?

so from the user perspective, it would be visually the same experience, even if the code of the nodes need to change once inside the box.

I believe it’s quite important/basic to introduce loops to users if we are working with arrays of values

*assuming the workflow would let the possibility of separating attr from geo ofc