I may have missed something, there are no plans to share assets? (Geonode,Tools,Collections,etc…)

Those style guide pages are meant to be applied to the addons that are currently shipped directly with Blender, which understandably need to have higher code quality than other addons.

AFAIK, that doesn’t apply to extensions, they can be formatted as horribly as you want as long as they work.

i believe it to be the opposite, as it’s clearly stated Extensions Policies — Blender Extensions

Acceptable code practices

- Extension code must be reviewable: no obfuscated code or byte code is allowed.

- Extension must be self-contained and not load remote code for execution.

- Extension must not send data to any remote locations without authorization from the user.

- Extension should avoid including redundant code or files.

- Extension must not negatively impact performance or stability of Blender.

- Add-on’s code must be compliant with the guidelines.

as the point of this system seems to be so that extensions are 3d party add-ons “shipped” on demand through automatic download if uploaded here, which in consequence would need them to be compliant with built-in addons standards

Fair point, I didn’t see that on the extensions page.

I don’t really see how they can enforce code quality, though. Some clarification is definitely needed.

What happens someone takes my Github repo and publishes on the extension platform as if they developed it? I think that discouraging such abuses should be part of the platform publishing guidelines.

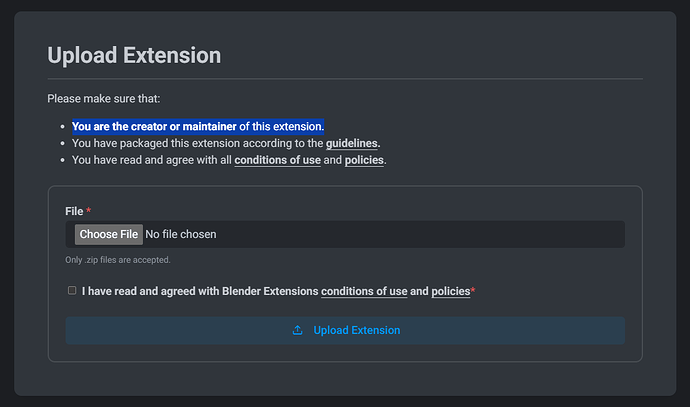

It’s literally the first thing that appears when you are about to upload an extension, it’s even in bold:

“Please make sure that you are the creator or maintainer of this extension”

Other than that we can’t really check every single extension, that’s why we rely on the community to help to review and report such abuse through the following ways:

1. During Review

The review process involves the community. We call it the Approval Queue, a public listing of all extensions waiting to be published. Everyone is welcome to comment there. Only moderators can do the final approval. At the moment there are only a few moderators but we plan to expand the team (help welcome!) in the coming months.

2. Report Abuse

On every extension there is a “Report Abuse” button at the bottom of the page.

This website is a community effort. We should all try to help by testing the extensions, leaving reviews, reporting any issues, unfair use, and so on for it to become a healthy place we can all share.

What is the policy on distributing forks on platform? If I have my own fork of CC-0 add-on created by somebody else, can I share it? Are there some follow-up rules?

Thanks for the clarification. I did not do any submission yet, so I was not aware of the text.

Hello ! I think to facilitate the review process a link to the code repo should be mandatory on the extension page, or a way to directly review the code online based on the zipped archive.

I think it is risky to download random potentially malicious and unreviewed code on my computer ![]()

It seems to me like the status in the Approval queue — Blender Extensions is not up to date with the actual status of an addon. I can see multiple cases of someone changing the status to Awaiting Changes while in the queue it says Awaiting Review.

Hello, trying to give feedback on an add-on in approval queque but when I click on comment it says “500 Server error”.

Hi all,

In reference to #119681, I was prompted to add my voice wrt. dependency handling in this feedback thread. Having worked professionally with supporting first-year-uni-student Python environments, I must admit skepticism at the vendored-wheel approach - both for addon devs (young and old), for user-support-entropy, and for ecosystem health. I hope these perspectives are welcome, and I’d potentially be happy to help out with contributions if desired.

I’ve supplemented with examples from my own experimental in-dev scientific addon with heavy PyPi deps, whenever I’ve tackled a problem I’m describing.

Vendoring Kicks the wheel to the Build System

At some point, some tool is going to need to wrangle dependencies in order to built wheels at all. The tooling for this is very diverse, patently non-interoperable, and very hard to help addon devs with in the general case - not to mention wheels can be an extremely iffy kind of thing to analyze after creation.

Consider, however, how tools like rye make requirements.lock files (example) which resolvers (ex. the beautifully fast uv) guarantee to work perfectly together. For better or worse, from poetry lock to pip freeze, such files are as much of a “just works rosetta stone” as we might ever get with Python packaging.

When imagining a Blender-extension-distribution paradigm based on only such lockfiles, a lot of very “smelly” (as in code-smell) questions maybe get easier:

-

Parsing/Validating: It’s fast and easy to parse and validate

requirements.lock, including whether all entries are installed correctly, with only Python’s stdlib (example). -

Distribution: Not only can

wheels get huge but their implicit dependency (also transitively) on ex.glibccan be extremely nuanced and hard to declare (especially for minimal VFX-reference type workstations). In constrast, it’s exceptionally lightweight and easy to pack up (examples) a pre-maderequirements.lockwhen zipping up an addon. -

Isolation/Cross-Platform:

pipcan install to isolated addon-specific target dirs (example), and generallypip installtakes distribution nuances into account decently (see Problems further down).

pip is already bundled with Blender, and by “just” building on that in this paradigm, there are very real things you gain the ability to do:

-

Supply-Chain Security: You’re shipping arbitrary user-uploaded code. Mandating

requirements.lockmakes security-scanning of all Blender extension dependencies near-effortless; arbitrarywheels, not so much. It’s also far easier to, say, “add your extension fromgit” (maybe with GitHub / Codeberg login), to ease the risks of dealing with directly-uploaded arbitrary code! -

Reproducibility for CI and Devs: Since

requirements.lockis checked intogit, reproducibility becomes a lot easier to guarantee. Very real headaches start with “dependency x broke my niche undocumented use of y in v.v.2, not v.v.1”; with this paradigm, that’s infinitely easier to catch (hey, Blender could even sell an addon-build-service that reports this kind of thing )

) -

Sane Addon-Specific Dependencies:

with-wrappedsys.path(example) allow installing addon-specific python dependencies in a fully cross-platform, addon-specific, and non-polluting way.- So far, it seems robust in my own addon - but

sys.modulesis such a janky thing, that I’d want to test more to be sure. I’m generally confident that it can be done in a way that’s clean for the user, though. - Ex. Blender could wrap the call to each addon’s

registerin such awith, so addon developers never feel the distinction.

- So far, it seems robust in my own addon - but

and more.

Native Code as Python Packages

“What about things that need to be artifacts, like compiled C/Rust modules?”

Why not just make them python modules too? Say I’m making an addon, but need a fast Rust function. In a modern tool like rye, just do:

rye init fast-func --build-system maturin" and code!git push https://github.com/me/fast-funcrye add fast-func --git=https://github.com/me/fast-functo add as dependency in the addon.

As a bonus, Blender-the-extension-platform can now:

- Excise Unknown Binary Code w/Less Manual Review: The

requirements.lockhas a direct reference to a HEAD inhttps://github.com/me/fast-func, making it infinitely easier for Blender-the-extension-platform to understand what is coming from where, and why (and boot projects whose references disappear). A key foundation for many security policies, among other things. Human time is valuable, limited, and imperfect! - Minimize Special-Casing Extensions: “If you want it, it needs to be a Python package” is perhaps a healthy approach. One removes all need for addons to depend on each other (they can depend on a common Python lib); one can easily correlate issues ex.

glibcissue with a particularpippackage, across many extensions; and platform-side, one can entirely eliminate specialwheelhandling by having such a relatively compact Blender-localpip installlogic.

It may seem harder, but in my humble opinion, language integration is fundamentally difficult, and the approach shouldn’t hide that (even as it simplifies working with it). In my continuing opinion, pure wheel building tends to be too good at hiding how and why which C compiler needed a turn at something.

Another package is more complexity, sure, but greatly discourages the easy mistakes that lead to thorough jank, and makes it easier to reason about the difficulty of extension modules in an isolated way (including pre-bootstrapped ex. rye init my-rust-func --build-system maturin).

Problems

There are many, and while many also apply to wheels, oh boy do they all bite!

pip installcan have hidden dependencies on C libs, headers, etc. .- It may sound a bit excessive, but Blender could quite easily fuzz PyPi for such issues and only allow addons to use packages that pass this check (per-platform). Perhaps a talk with the PyPA would be helpful? In any case, such a thing would be damn near a public service at this point…

- Blender would have to physically block part of

register()before dependencies match, which demands careful UX design to 1. Drive users to install dependencies and 2. Try to avoid “why is my file look broken” type issues arising only from missing dependencies of an addon.- I’ve hacked it in my own addon by having two rounds of

register()(here). The first is only enough to install deps; the moment deps are installed, the realregister()happens and the user is happy. But it’s not ideal. A new extension approach could be much less hacky.

- I’ve hacked it in my own addon by having two rounds of

- Blender, as an application, networking with PyPi repositories is something that would need to be made peace with, and the security implications of this aren’t trivial.

And so on. Still, to me, it seems like these span a more definable and solvable problem space than straight-up shipping wheels, while on the whole, providing excessive benefits to addon devs:

- Allowing addons to easily access

pippackages is an excessive productivity boost; not just because of easy access to libraries likejax,sympy,pydantic,scipy,colourandgraphviz, but because it makes it far less tempting to reimplement common functionality per-addon by making it easier to factor out evenbpy-dependent functionality (plus, helping quell common discussions about “can I have my Python library ship with Blender, pretty please?”) - Treating Blender addons as specially-packed

pythonpackages brings it closer to all the good tooling that managed to sneak into the mess that is the Python package ecosystem. I’d bring me infinite joy to see an extension ecosystem whereruff(alsoruff fmt),pytest,beartype,mypy, etc. are standard fare when working with Blender addons, and the more “you need to build a custom wheel and put it here” stuff there is, the more friction there between good tooling and developing Blender addons.

Thanks for reading; again, I hope the perspectives are warranted, and maybe even useful.

IIUC, you are proposing that extensions bundle a lockfile containing dependency declarations, and when an extension is enabled (before register is called), Blender automatically ensures dependencies are satisfied by using pip install or equivalent?

My first though is the consequences for “self-contained extensions” and offline installation of extensions.

Precisely; and I agree with the self-contained being important (it seems “static vs dynamic linking” has made it to the thread!). To clarify, I’d propose something like:

-

Extensions bundle only a lockfile declaring dependencies (with some constraints), for which various scans/checks are performed by extensions.blender.org.

- “Self-contained” shouldn’t be the default in my opinion, but there’s no reason why one couldn’t have it as an option to let the platform ship pre-

pip install --targeted content as part of thezip(mind the OS though). - Here, I’m presuming that Blender’s platform takes responsibility for bundling “self-contained” addons with its own

pip install --targetinto anotherzip(a little caching and version policies a la “no 10yo scipy thanks” would render this a relatively inexpensive feature, presuming a matrix of OS-specific build servers are already available). - Of course, extensions that don’t use Python packages would be de-facto “self-contained” with no further info. As a side note, it’s not always to obvious to new addon devs how much is already actually installed!

- “Self-contained” shouldn’t be the default in my opinion, but there’s no reason why one couldn’t have it as an option to let the platform ship pre-

-

Blender checks, on addon install / before

register(), that the dependencies are somehow satisfied, possibly with user input, ex. through:- Running

pip install --target, which effectively dumps dependencies in the addon-specific python dependency directory. - IF the addon is “self-contained” (aka. the zip comes with a folder where someone else has already run

pip install --target, and the bundled versions exactly match the lockfile, and the builder-OS matches the user OS with deeper checks than just “Mac”/“Windows”), copy the files over to the same addon-specific python dependency directory - same effect aspip install --target. - Variants of dependency management, all opt-in, that some more niche users might need (ex. custom PyPi repo for internal studio tools, etc.)

- Running

-

Speaking of addon install, that lovely popup on drag-and-drop could include these dependency installation options.

This proposal (as I guess it ended up being?) presumes that the user typically drag-and-drop downloaded the extension from the internet, indicating that they probably have some kind of internet. In this case, there is a ton of saved bandwidth via. the pip caching for the user, and for the platform by not shipping .sos (they can get very, very big; see pytorch with all the CUDA kernels and such, which is not a very far-out thing to want).

…BUT you’re totally right, self-contained extensions are really important for ex. institutional / render farm / “not so good internet here’s a USB” use. Luckily, pip comes with perfectly usable mechanisms for local installs that don’t require venv (though, at some point, they’re gonna have to make it from pypi.org), and there’s no reason there can’t be a “self-contained” zip versions leveraging that for users that need that kind of thing.

Side note, it occurs to me that Rust’s focus on static linking are a real good fit for extensions. Building static C libraries can be a headache. I would add that extensions maybe shouldn’t be relying on dynamic libraries spuriously (platform-enforced), or at the very least that dynamic dependencies should be clearly stated. The nice thing about starting an ecosystem is this kind of thing is easier to implement.

One other question regarding paid vendors utilizing extension repos. How does Blender plan to handle authentication for checking and downloading updates? With BlenderMarket, for example, users will need some form of credentials (or login prompt) to ensure that they are a legitimate customer.

They could just give each customer a unique repository URI

This is a security concern for sure. Especially since there might be different expectations from Blender-hosted add-ons than from add-ons downloaded from other arbitrary websites.

This I don’t agree with. Since add-on code can already easily do os.removedirs('/*') (and obscure that code), there is no such thing as “near-effortless” security scanning of add-ons. Just knowing the SHA-sum of a wheel doesn’t mean it’s vetted to be secure.

pipcan install to isolated addon-specific target dirs

I think this is only part of the picture – the other part is how to ensure that each add-on only sees its own dependencies, and not those of other add-ons. You describe SysPath, but unfortunately that URL gives me a 500 Internal Server Error.

If sys.path and sys.modules are manipulated at import time to get this isolation (like I did in the Flamenco add-on), it also has an impact on how the dependencies perform their own imports. If those imports are delayed (for example only importing a heavy dependency when it gets actually used, instead of at import time) they may fail when the addon dependencies are no longer available on the global sys.path.

IMHO this question of per-addon dependency isolation is the more important problem to solve first. Once we have a solution for that, we can look at tooling that gets those dependencies in a way that’s compatible. As I describe above, certain approaches to isolation will create limitations to what those dependencies can do, and thus rule out certain packages. Maybe that also simplifies their installation, I don’t know.

Hi @sybren,

Thanks for your answer! Generally agree a lot.

I think this is only part of the picture – the other part is how to ensure that each add-on only sees its own dependencies, and not those of other add-ons. You describe SysPath, but unfortunately that URL gives me a

500 Internal Server Error.

Indeed; apologies, my own server seems to have a hiccup. I replaced the links with links to the GH mirror, but on further testing, I agree that sys.path + sys.modules mangling isn’t good enough for the many-addons-incompatible-pydeps use case.

I believe Flamenco also does something like this (I just consolidated the SysPath link into a gist for clarity): A context manager that allows for imports unique to within the block, so that code in the with block can use its very own version of whatever.

Some caveats to this method that I’m noticing:

- As demonstrated in the gist, any function that relies on an implicitly cached module variable (like callbacks), which is later re-imported, will use the newly imported module (see the Python docs entry) It’s a tricky one; there’s only a problem if addons re-import the same dependencies, with the same name, but an incompatible version. Rare-ish, maybe, and can be dealt with using decent policies, buuuuut it isn’t clean and will absolutely bite back, hard.

- I’d borderline suggest that Blender could replace (more like, say, augment) the builtin

__import__function as described here. Essentially, one could add logic to ex. cache addon pydep module names with an addon-specific prefix when importing from an addon, so that each addon would be truly looking up its own name insys.moduleswithout even knowing it. Still within the framework of this context manager.

So, yeah - ironically, I think maybe slotting in an almost-identical __import__ Blender-wide (and using, oh who knows, some kind of introspective black magic to detect which addon an import-calling function is in?) could be a strangely clean approach. One that’s compatible with all the random little callbacks swimming around bpy.props update fields (__file__ doesn’t tend to change, right?).

Alternatively, I’m also finding sys.meta_path and sys.path_hook, which if I’m understanding correctly, allow adding custom methods of finding modules to import and ways of loading them? If so, maybe that’s a bit less dunder-y way to get to the goal of ‘addon-namespaced sys.modules entries which addons don’t know are addon-specific’. See this… Damn, I do NOT enjoy Python’s module system…

Since add-on code can already easily do

os.removedirs('/*')(and obscure that code), there is no such thing as “near-effortless” security scanning of add-ons. Just knowing the SHA-sum of a wheel doesn’t mean it’s vetted to be secure.

You’re right; “near-effortless” is a bit strong and broad. I’ll perhaps constrain my opinion by saying that, if the threat model is a malicious addon author, then, well, good luck.

I’m more worried about / referring to the supply-chain threat model. These aren’t hypothetical; a quick search for ‘pypi supply chain attack’ finds dozens immediately (like this one), and it’s not unique to PyPi (I’m almost scared to try searching npm). Without meaning to, an addon author will one day most likely one day inadvertently pull in malicious code - and the worst part is, it might not even be anybody’s fault.

As I understand, these are mainly:

- Vulnerable Versions: It’s not uncommon for ex.

urllib3to suddenly get a nasty CVE attached. The platform-levelrequirements.lockscanning (like GitHub now enforces as opt-out) allows the final-release version of an extension platform the ability to, for example:- Notify users who opt-in to update checks, that there’s a vulnerability.

- Notify addon devs that they need to bump the version.

- If it’s serious enough, temporarily pull the addon and notify users that they should disable it.

- Namespace Attacks: By knowing which packages Blender addons tend to use, an attacker can target Blender users by squatting on ex. common misspellings of packages that Blender addons tend to use, providing almost the same package, with the little extra addition of, say,

time.sleep(3600); os.system('rm -rf /*')- It’s a nasty one, especially because it’s easy to miss the difference between

xarrayandxqrray, especially if the malicious variant installs a wheel with the namexarray. - The more niche the package, the bigger the problem - and it’s really hard to prevent, is the bad news (attackers can just focus on packages that are niche in the PyPi sense, but popupar in the Blender Addon sense, and maybe coast by).

- The good news is, security researchers (and PyPi itself) spend a lot of time proactively looking for these kinds of things. But the only really scalable way of staying up to date on what they find, is if the platform scans everybody’s ‘requirements.lock’ files.

- It’s a nasty one, especially because it’s easy to miss the difference between

- Real Compromised Upstream: I imagine

scipyhas hardware-backed PGP signatures and such, but does everything? Do they all stay up to date with other’s vulnerabilities? It happens, and more even than other kinds of issues, I’d imagine one would want to get the patched version installed pretty fast.

It’s a specific kind of threat, and I don’t think by any means that having deps scanning would mean the end of talking about security. But it’s a very real-world kind of attack, and I’d argue it’s a relatively low-effort-high-reward mitigation to put in place when making a platform like this.

Well, given the DOS attacks on the blender sites a few months ago - clearly, the software/company is on someone’s radar.

As a user who knows very little about python, and also not much at all about expertly dealing with sec threats - I’ll just say that all of this above + Extensions is an active connection + the existing " Allow Execution (permanently? y/n) dialog box - does give me pause for concern.

Hi, is the file size limit for the .zip file final, or is it possible to increase the 10MB file size limit? We have just recently switched to packing binaries instead of implementing everything in Python, because dependencies felt like a western and users were frustrated. Even if we would not switch to binaries, I would need to bundle for Python 3.10 and Python 3.11 for 3 platforms and 2 architectures if I want to support 3.3LTS-4.1+. I believe this to be reasonable strategy, but it is way over 10MB limit. Thank you for the info, btw the platform’s great!