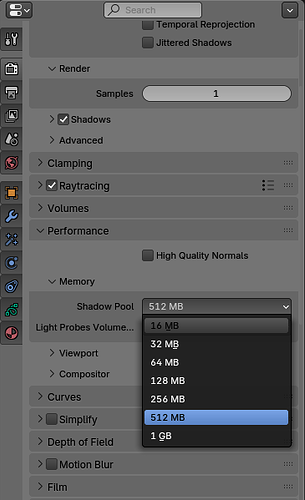

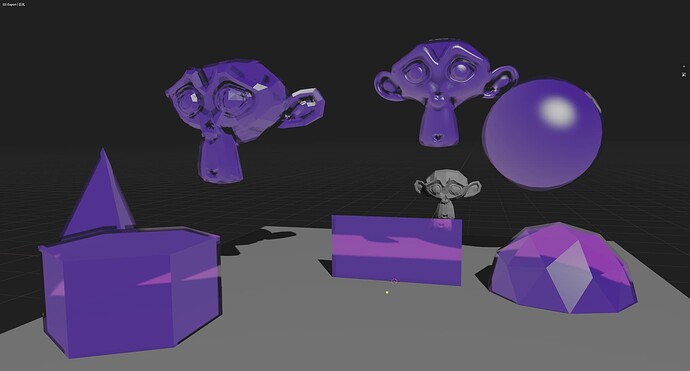

This is a general issue with Blender when too many lights are used in eevee-next. Ive found that the best solution is to up the memory to 1gb.

this helps somewhat but i feel the limit could go even higher…

Indeed, this limit feels quite small. Couldn’t the limit be dynamic? Say, half my GPU’s VRAM?

What would help, was a tool to manually prioritize the shadowmap resolution close to the camera… kinda like it was in old eevee, but that is a different and painful topic ![]()

Please stay on topic.

The limit of 1GB is technical. We can increase it but it requires a bit of changes. I added it to the roadmap.

What would help, was a tool to manually prioritize the shadowmap resolution close to the camera… kinda like it was in old eevee

This is exactly what the new EEVEE does. And it does it better than legacy one and on every light. The issue is that the old system could go easily beyond the 1GB of VRAM memory usage for all shadows and the new one cannot.

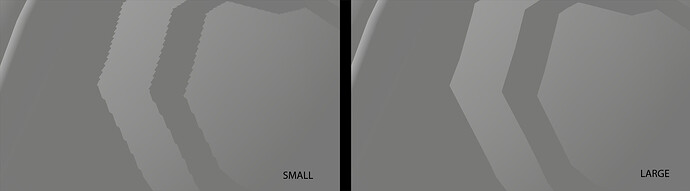

This is another one of those problems having to do with Blender in general and not the NPR engine. Don’t know much as far as the terminators go, but I do recognize that type of jagged edge. It’s the size of your mesh that is making the problem more apparent. It’s not that you actually did anything wrong here, it’s just that unfortunately, Virtual Shadow Maps don’t seem to dynamically change resolution based on distance.

I learned that the hard way when I was doing NPR in UE, had to switch to the raytracing shadows because a similar thing used to occur when VSMs were activated.

Anyway, a workaround (not really a fix) is that you can increase the scale of your mesh. However, this is REALLY gonna mess with physics simulation. Below is a comparison of what happens:

Definitely not a viable workaround for any situation I’m in. Not just phyics, but props, materials, relight the scene, etc etc.

(And the shadow artifacts + terminators are still keeping me on Eevee Legacy.)

Thanks for your reply, much appreciated. You’re right, the object size does matter, but I’m need this in production and with rather complex scenes, rigs, etc that I already made. So upscaling everything just isn’t an option for me. On top, it feels kinda bizarre to make workarounds for such a bread and butter thing as a close up of a real world scale object.

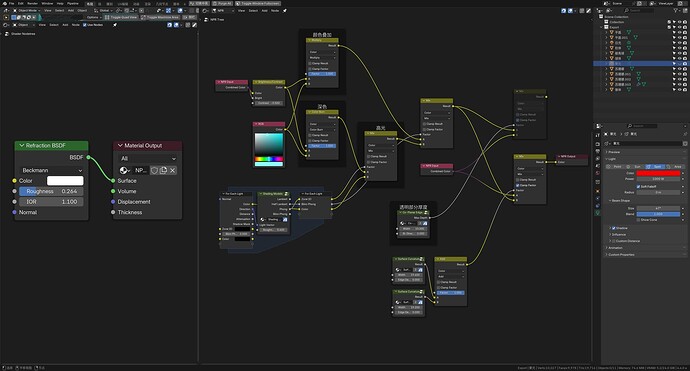

Since shader repeat zone isn’t specific to the NPR design, wouldn’t it make sense to just try and get it in master sooner, instead of along with everything else?

Not if that results in any limitations being put on the NPR feature set, for reasons of forced compatibility with other renderers.

We actually briefly discussed that internally. It does make sense, but it has quite some implications with engine compatibility, interoperability, and material export / import.

It is definitely solvable but we reserve this discussion for later for now.

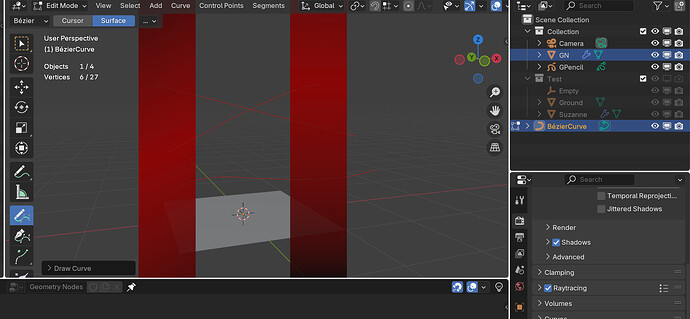

there is a bug about curve in npr branch,sometime the curve disappear in edit mode, and when u switch between the wire mode and the solid mode, the curve start glitch like this.i opened other branchs but they all good , so i think it is a bug only in the npr?

Adding a principle volume material to an object in the NPR prototype will make the world background black,Submitted report #4 - 4.4NPR 原型着色器 - 世界受对象的体积材质效果影响 - npr-tracker - Blender Projects — #4 - 4.4NPR Prototype Shader - The world is affected by the volume material effect of the object - npr-tracker - Blender Projects

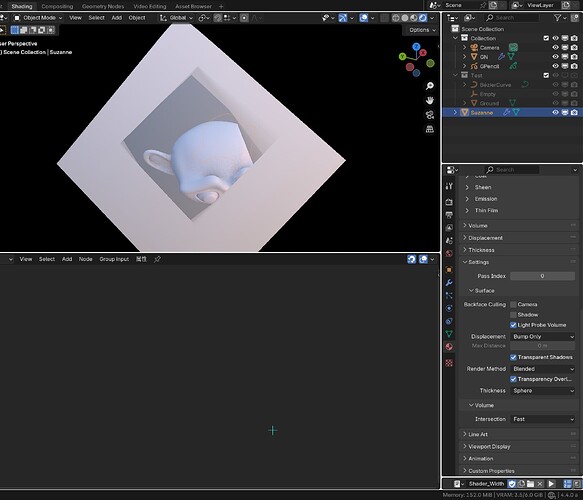

The suzanne uses the blend mode, the npr plane use dithered mode .But the result is weird.Does the npr node didn’t support any materials using Blend mode?That’s will be a pain.

It’s hard to say what’s going on there just looking at an image.

NPR is not supported on Blend mode materials at the moment, but you should be able to use regular Blend mode materials as normal.

i know why ![]() i forgot to check raytraced transmision in suzanne.Always forget that

i forgot to check raytraced transmision in suzanne.Always forget that

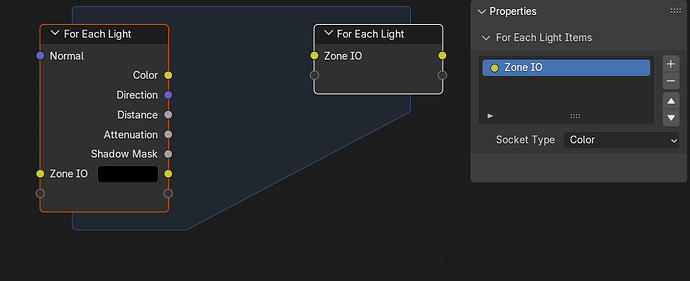

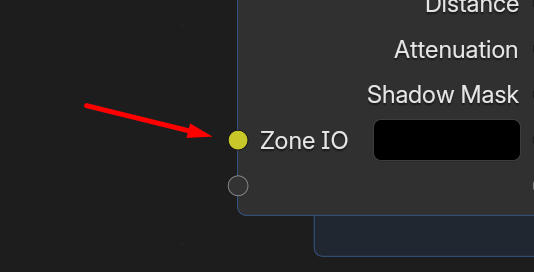

Sorry if the question is dumb, but in “For Each Light”, what is the Zone IO exactly?

Is the Diffuse Color of the material?

I’m asking to understand, in case we’re not using its RGB value, what is supposed to be used as input there:

Thanks!

Not a dumb question at all, especially if you are not used to Geometry Nodes Zones.

The simple answer is that it’s whatever you want, it’s just a zone parameter.

They’re very similar to Node Groups inputs/outputs, so you can add/remove them at will.

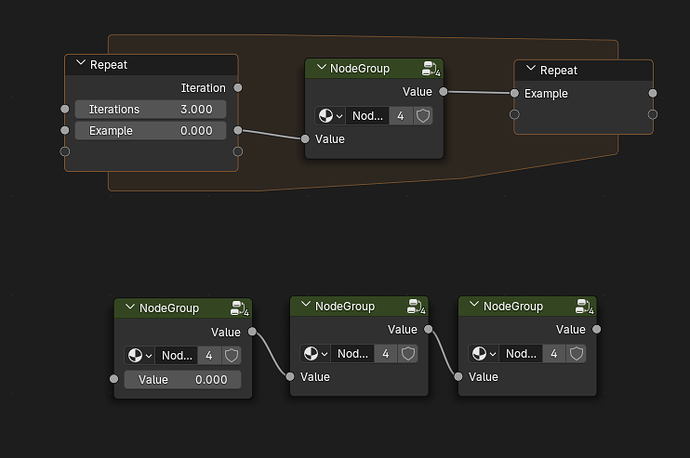

I think the easiest way to understand zone properties is with the Repeat Zone.

Here the Repeat Zone and the 3 chained Node Groups are equivalent:

The default Zone IO in most cases would be used to add together the contirbution of each light , but it’s there just for convenience.

You can check the Sample Assets for an example.

Is the ‘IO’ meant to refer to ‘input/output’? If so, I usually see that abbreviated I/O, but also I wonder: it’s only input, no? the output would be on the other side?

I understand that it being a zone, it’s going to go through multiple times potentially, but it still seems a confusing name to me.