hi so i think i figured it out; it only appears when plugged into a principled bsdf with the IOR higher than 1

would be nice if i can still modify that while using the specular inputs without it affecting the diffuse input

OK, one last round of tests and blends and then I’m cutting myself off, at least until there is an update.

I really enjoy all the features and node groups in NPR, and I definitely miss them when I’m back in standard Blender.

Lighting:

A common effect setup in old EEVEE was an Emission and Transparent Shader masked by a texture, and with a higher emission strength value and the Bloom effect, you could get a really nice glow outside of your object.

It seems like this setup could be replaced by a Refraction Shader alone in the new system, but I wasn’t sure how that would interact with Compositor Bloom, or other objects in the scene.

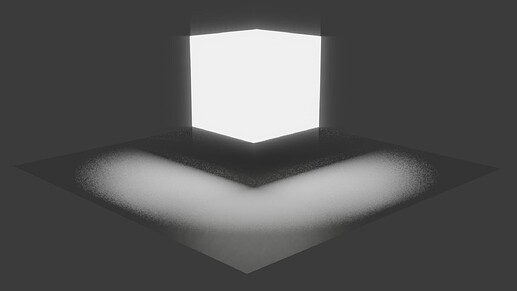

I did a quick test of a scene with no lights, no Emission Shader - just NPR nodes multiplying an RGB value - and enabled Raytracing and added Glare/Bloom in the Compositor:

So, you can get emissive, bloom effects just by boosting a color value - it makes sense, but I wasn’t sure it would work.

Also, shadows have been disabled on the Cube, but it still creates a shadow underneath it, despite emitting light and ignoring shadows. I suspect this is to do with how EEVEE is calculating screen space lighting, as the shadow would move when I rotated around the scene.

I took this idea into a campfire type scene - rocks are “Ghibli” style, based on Kristof Dedene’s technique, logs are the BG Wood Board material I posted above applied to cylinders, the fire is masked noise on an emissive plane.

First, with Linear blending on the mask:

Then, with Constant blending for hard edges:

Much more light in the second, though the Emission strength is the same, only the blend changed.

It also suffers from the shadow issue - shadows are disabled on an Emissive plane, but it still casts shadows relative to the Camera - rotating around the scene will move the shadows.

I should maybe be sourcing my light from a Probe, rather than Screen Space, but I had some issues when I tried that in another test:

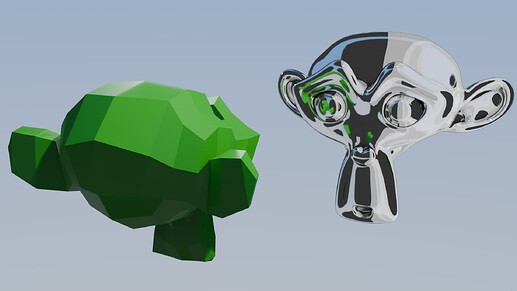

Chrome / Metal:

This is using Screen Space Raytracing, when I tried it with a Light Probe the reflections were reversed.

Same material, but with a Wave Texture on the Normals to give an X-men Colossus type ring effect:

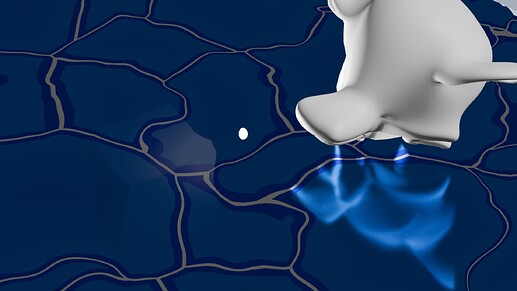

Water:

Simple toon water with transparency, refraction and reflection:

Geo Nodes wave setup behind a Kuwahara plane:

Didn’t notice that the water texture and foam weren’t aligned until after it rendered, blerg.

Refraction:

Shockwave effect using Ggenebrush’s lowpoly buildings:

RGB Glitch effect - split Combined Color into R G and B channels then recombine with different offsets and textures (offsets from 0 - 1000 shown below):

Misc:

A feathered wing - tons of stacked transparencies, my computer hated me for this one:

BG-style cell bars Geo Node setup:

Toon Axe:

OK, one more that I forgot to put in the last post:

What if mesh-based processing but without AOVs or Camera Planes?

Can the old inverted hull outline method be co-opted into a new stylized workflow with the new system?

Yes. (Except you don’t need to flip the normals in this case.)

Pros:

- no AOV setup

- no Camera Planes

- quickly add a second layer of NPR processing to a single mesh

Cons:

- Solidify can be unpredictable on complex shapes

- may not be able to use effects outside of the mesh as easily (you can extend the modifier beyond the mesh, but you would have to be careful about how your textures were mapped, unlike the Camera Plane method)

- adds geometry

uhhh there seems to be some weird artifacts that appear on fairly low poly models that i cant seem to find a way to remove. altough they only appear when the camera is up close it kind of ruins any way of having close ups with the model. i am using the latest release and i’ve seen this problem being brought up in other threads here, but none of the fixes i’ve found (like slightly raising the soft falloff to 0.1) dont seem to work

(also, i wanna add: is it intentional that any texture values also come through to the direct diffuse input? that seems kind of counter-intuitive as i have to put the texture into the tree just so the black outlines on my texture doesnt interfere with the shading.)

I think that those are not related to to the NPR branch, but rather to EEVEE since the Next release.

Apparently, just yesterday was committed a fix that should solve this: #136136 - EEVEE: Add quantized geometric normal for shadow bias - blender - Blender Projects

Does changing the Clip Distance of the Camera/Viewport have any effect on them?

In old EEVEE there were some artifacts that I could remove by tweaking the Clip Start.

unfortunately no, and these artifacts were never really present in old eevee either so i dont think any solution from the past can really help

and also, turning off cast shadows from the light removes the artifacts entirely. problem is, i still lose cast shadows which might not be a good compromise.

Note: The cracking effect is simulated by geometric nodes, and only NPR correlation is demonstrated here, that is, hiding itself by blocking and AOV to transmit information such as the image of the front object to the back object

NPR cannot animate parameters for the time being, I believe it is only temporarily not in the prototype plan, the subsequent official version should be fixed; For this, the temporary solution that I think of is that the shader can still do animation normally, and it is feasible to put the parameters that need to be animated in the shader and pass them to NPR measurement using channels

With NPR, would something like just ignoring color for a material be possible? So you could have a material on a mesh just inherit color from whatever is below, and only use it for something like normal decals. I know this isn’t the precise terminology for this sort of stuff, but stuff like this tends to be common in deferred shaders

Now that 4.4 is released, will the NPR prototype move forward in parallel with it? Will there be a 4.4.1 NPR prototype? Will new blender master features be included in the development of this branch?

Development Feedback

I love all the work here, and from the little I tried, this opens a pandoras box of creativity, but I see it also as a hard one to merge to main. Seems this project would be quite large, adding in pieces of these features in a stepped delivery I think could be sane. Not only for publicity sake (the most hyped feature coming to Blender) but also for practicality sake:

Process of commits:

- Add in the Half Lambert shader to begin

- Add in the screenspace refraction system to RGB (core feature missing from EEVEE Legacy)

- Add in the node tree type for the Shader to RGB pipeline (or NPR nodes)

- Add in the For Each Light

- Add in new nodes for the new system, one by one, etc

- Optimization passes, etc.

I really do want to see many of the core values of this prototype in main branch, for EEVEE, the power of this spearhead against competitions is this - and right now it’s EEVEE is needing this more than ever.

Sorry again for taking so long to answer. ![]()

Baking in EEVEE would be nice, but that’s out of the scope of the NPR project.

What’s this about?

That was the initial idea, but the details for the final implementation are yet to be decided.

Nope, the plan atm is to include some initial NPR features in 5.0.

That’s the expected behavior, since you’re reading a refraction ray, not a camera ray.

Refraction materials in main work the same way.

There may be better ways, but an option could be to output an AOV from the World material with your Camera Background Color.

There’s no final decision yet on how this will work, or if there will be separate NPR trees at all in the main release, or if we will try to integrate everything into the main Material tree.

But yeah, something like that was my initial intent, we’ll see. ![]()

AFAIK, this is planned. No idea on ETA, though.

We also have plans for a somewhat related NPR-oriented solution that doesn’t involve multiple view layers, but again, nothing is set in stone yet.

Not atm, but this is planned and agreed upon already for the main implementation. ![]()

Kind of, to some degree, since you can read refracted material properties or AOVs.

But in this case, the normal could only be used for NPR shading, not for PBR.

Sure, once 4.4.1 releases. It should take me like 5 minutes.

Probably not. We should be starting to add NPR features into main once 4.5 branches out, but getting all devs to agree on how to approach NPR is super hard, so we’ll see.

Yes, that’s the plan. ![]()

As mentioned by @slowk1d this is being fixed as part on EEVEE.

There are still some issues to iron out, though.

Also, please guys take a look at this, since it’s related and can be quite relevant for NPR:

@Alaska @Sil3ncer were mentioning this issue as well, and this is not an NPR issue, but something that affected old and new EEVEE alike: line width changes relative to the object as the camera moves.

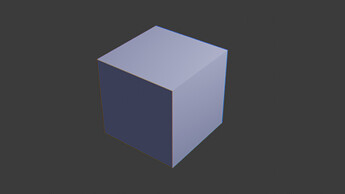

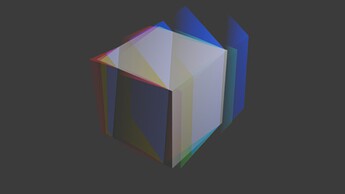

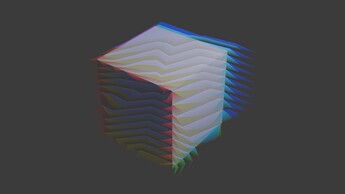

This is a cube with the Co-Planar Edge Detection node group with the camera in close:

This is the same cube with the camera zoomed out:

Relative to the size of the cube, the outline in the first image is 0.01x the size of the cube, and the outline in the second is 2-3x the size of the cube.

This is an extreme example, but the effect is noticeable with smaller camera movements as well.

I understand that there are limits when using screen space calculations, but it would be nice to be able to keep lines closer to their ‘intended’ size, if possible.

Hopefully that makes sense, and the other users can chime in if I’ve mischaracterized their issues.

Thanks for all the work and all the updates!

personally i think if this were to be implemented, it should at least be a toggle

a use case i’ve found for co-planar edge detection is to create cleaner outlines for rendering models as sprites for a game. though it’s a niche use-case, the option to have the outlines be based on screnspace resolution should at least still be there especially for stylization

also it would be nice if the edge detection nodes had a way to not be affected by other objects around or behind a surface, and im not sure how i can filter it out with AOVs. (this used to have a reflective floor but it just removes the outline for the feet)

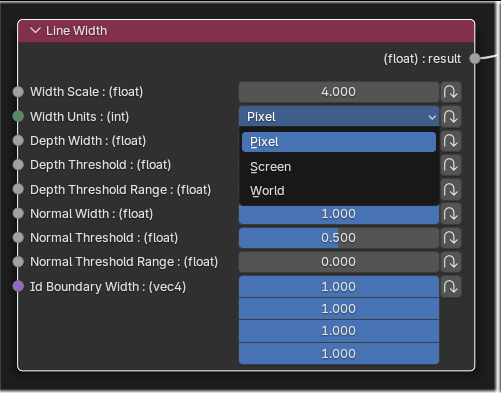

I see. For reference, in my render engine I have this:

Here you can set the units in pixels (for cases like the @snowtenkey one), in screen space (so perceptual line width doesn’t change depending on the resolution), and in world space, which is what @spectralvectors wants (I think).

So I agree this kind of thing is important and there’s no “one size fits all”.

The Image Sample node allows selecting between View and Pixel space offsets for this reason.

But yes, for the final thing we need something more fleshed-out.

(this used to have a reflective floor but it just removes the outline for the feet)

This is where other types of edge detection should come into play (based on normals, object ID, or custom IDs set from nodes).

Yes, I probably should have just said World Space, but that is exactly what I mean!

Something like your Line Width node with an Enum to choose between Pixel, Screen and World would be great.

This is a very cool project, I love what I’ve seen so far. I see a potential here for this to be the thing that finally allows a lot of us motion graphics folks to be free of after effects once and for all. I don’t think this is a small thing. It could potentially bring a lot of new artists into the Blender world. I’m wondering if anyone else has been thinking this, and if there’s support behind this idea. From what I can tell, we would only need a couple key things for this to happen, and they could be implemented in one of several ways. Not sure if there’s already talk of these things being part of NPR:

- The main thing would be some way to use the existing compositing-effects within shaders. So we can have 3d planes, with a video texture, and key or blur the video, for instance. Being able to put a complete compositing node-tree inside a shader, like a node group with an input and output, seems to be an intuitive way for this feature to be used (you could tell the node-tree that it’s making a texture of a certain size, and then plug that into texture inputs), but maybe there’s a more practical way on the development end.

- The other main thing would be easy control over blending modes of individual objects in the scene, and it looks like maybe NPR already has this?

- The dream wish-list item would be a more easy to use image sequence/video loading node, which could link to some sort of intuitive control in the animation timeline or VSE, allowing for easy control of start and stop/loop/time stretch. (some people might want to load dozens of video layers in their project, and having a quick way to control their timing would be a huge benefit. Rather than the existing way of having to juggle the different frame time numbers on each node.)

Thanks for your consideration!

I know I’ve mentioned it before, but node-level light linking is definitely my most requested feature.

Using the For Each Light zone, I can’t help but wish that there was a way to specify only a collection of lights that should be affected in this specific way.

For example, I still like to use point lights to fake bounce lighting sometimes. In a situation where I could define a look, say toon shading, for the Spot Lights, and separate look, say soft lighting without attenuation, for the point lights, I’d be able to get so much closer to the look I’m going for. And of course, defining all those light looks without affecting the lighting and rendering of the other lights on the environment.