Here’s a topic for discussing T78606, the new hair object design.

Might also be a good place to discuss T78515.

From the task:

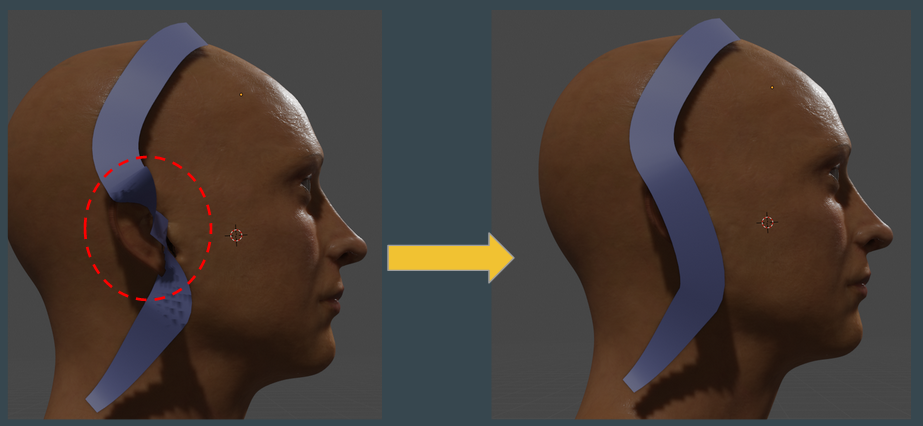

Sometimes the sampled normals from the surface is too noisy (ear area) and needs to be averaged based on neighboring hair curve vertex normals. The smoothing should propably be done with an operator that is triggered by the user.

I think the best solution to this case is actually to Keep It Simple, Stupid! Just make a seperate growth object that is a simplified head shape. It would be nice to have an operator for smooth-sampling the normal… but it would be better to just use an object with smooth normals in the first place, IMO.

Even if the detailed mesh is the growth object, the user can duplicate it and use custom normals for the growth object mesh.

I think it’s bad practice to try to do too many things with one object. I always make a separate mesh for hair emitters, for example.

EDIT: I’m also extremely thrilled to see the direction this feature is headed, and I can’t wait to help test it!

EDIT 2: Also, I am confused, is the proposal that the emitter object and the growth object are the same, or is it possible to emit from one object (e.g., just a scalp) and have the normals conform to another object (e.g., a head shape mesh)? I think two objects is the way to go here, or maybe even allow any number of objects and individual control over their influence.

Three things I wish for hairs:

-Hair guides need their own vertex paint brush or key paint brush, and user created paint layers or attribute layers, so they can be used with nodes/modifiers without the need to create vertex groups on the growth mesh. All the talk about normal, tangents, growth, emission, they should be handled with nodes/modifiers, as well as the usual kink, roughness, clump, etc. The color should be accesible through materials as well.

-Two different kinds of mesh binding targets. One for root, like currently, and a new one for keys. Currently hair can’t bend with the underlying mesh across hair length. This last one will help a lot with rigging as well. We could go further and add two binding sources, surface vs volume. That would mean even better rigs. Also, being able to choose when and where to bind to a mesh. I rather manually choose when to bind to different subdivision with multiple binding modifiers, rather than let the automated system ruin it for me.

-GPU children generation and modification. Yeah, it’s hard. But i’d be nice to have so much performace on the viewport😊

It’s important to distinguish between one time generation for hairs that will end up being binded to the mesh, because what’s dynamic is the deformation and not the generation, and dynamic generation of hair, like that of children hairs, which are more like modifiers or nodes. Normal and tangent generation could be done both ways, for the user to choose.

Hello everyone, is there any update regarding this new hair object development ?

Currently our animation studio is having Maya to Blender pipeline using alembic workflow, and for the last 2 years it works well, but recently we are exploring using hair and doing some test exporting hair curve from Maya to Blender via alembic, it works fine until we need to give this hair curve a children, that is when we think it is not possible.

There is addon ( hairnet ) which convert the curve to particle hair, but it can’t be used for animated alembic curve.

At least there are 2 major things that we think is not working with current hair implementation, which are :

- Curve can’t be rendered as hair so principled hair shader will not work properly.

- Animated hair curve from other app can’t be used to generate children particle in Blender

So currently we are under impression it is impossible to bring animated hair curve from other app and render it inside Blender, please cmiiw.