Hi,

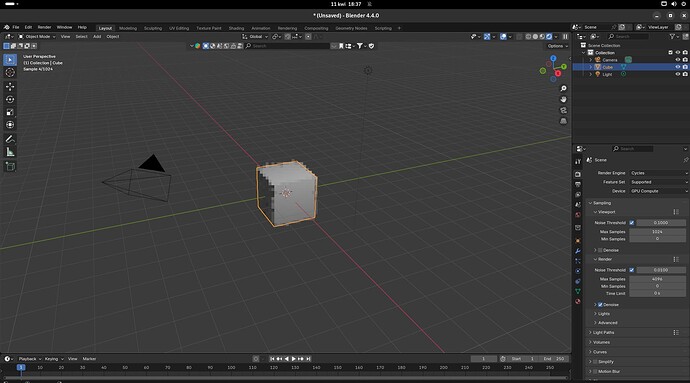

We are definitely interested in running Blender tests and benchmarks on variety of hardware. Currently we cover Apple/Metal and Linux/Nvidia, but we plan to expand it to AMD, Intel, and also cover NVidia. It does take a bit time from our side, but you can see status and progress there: #46 - Buildbot: GPU tests and benchmarking - blender-projects-platform - Blender Projects

This probably answers your first question.

Having more people running tests might improve quality and stability of final releases, but if its done by separate project or team I am not sure what could be more practical than treating it as regular reports submitted by users.

What versions of Blender should be tested - other than the ones officially packaged by Debian?

We are mainly focusing on bugs that can be reproduced in the official builds. We try our best working with the downstream people, but we rely on them to do initial investigation to ensure the issue is in Blender and not in some difference of the build/packaging.

And to ensure issues are fixed before release is done the testing should cover Beta stages and above of Blender.

Second question is about test coverage. What should be the final goal - full suite, GPU only (Cycles, EEVEE, Workbench), or Cycles only?

In an ideal world all renders should be tested, also don’t forget GPU compositor.

But there are all sorts of possible issues: texture filtering might be different on different hardware, or there might be some known GPU-specific failures in corner cases which we can not easily block list from the testing framework. So the reports needs to be read with some care.

Are there any glaringly obvious obstacles that could prevent the setting of automated Blender tests outside of Blender Institute? Was this done before?

Depends what you mean by that. Blender is used by some vendors to test drivers and SDK updates, and even we=hen implementing new features, and we have minimal involvement into it. But they are also closely participating in Blender development.

So the obstacles, additional load on our team, etc depends on how exactly you envision this testing to be implemented. If the process is implementing in the similar way then there are probably not that many obstacles.

However, if it is implemented in a way that some automated farm generates 10s of reports about hardware we don’t have access to, or using non-official Blender builds, or in an environment where we don’t have control of (drivers, SDKs do have bugs, and we are not really interested in maintaining all permutations of their versions) then it is quite a huge obstacle, and we’d probably say no thanks to this.

In a hypothetical scenario where such CI is operational how regressions, crashes and bugs should be triaged and reported? For example if there is a bug deep in HIP runtime - should it be filed directly in the official ROCm Github repository or should it be filed on here or in both places?

If its a Blender-side bug, or there is some actionable thing we can do to ease life of downstream developers it should be submitted to our bug tracker.

If the bug is in the compiler in a version we don’t use, or a bug in runtime it wouldn’t be very clear reason to have reports on our side (they wouldn’t be acitonable from our side). But communication about such findings in our communication channels could be a good idea.