It wasn’t my intention to be disrespectful. I am a big fan of Blender, the community, and it’s developers. I started with version 2.36 if I remember correctly and since many years I use Blender on a daily basis.

No disrespect towards the developers, they kick ass … but compositing in Blender (at the moment) is just painful and feels too broken to repair if not started from scratch.

I feel you. I am learning using the Compositor because I am learning Blender anyway and it’s very slow. But comments such as " … i mean come on." are not constructive at all.

Are you going to tackle optimizing the CPU side or go for the GPU approach?

On the other side consider that shader programming becomes fragmented to OpenGL4 / Vulkan / Metal makes the effort 3 times more difficult (3 backend implementations + 3 times more testing).

That way CPU code is 10 times better to be hacked and optimized in that respect because it will remain anti-corrupted forever (no moving parts - no vendor lock).

Though I could not exactly see how CPU code can stand a chance in the modern era of GPU computing. Very tough decision.

CPU still wins when it comes to scale. CPUs are cheaper than GPUs especially when doing cloud rendering.

On top of that GPU paradigm is built for brute force, render as many as possible as fast as possible. CPU on the other hand is primarily focused on optimization hacks.

Such as for example tiled rendering serves a purpose. To retain a block of pixels in working memory and eliminate far memory fetching. On top of that it might be super easy to run a block in a thread or send it to network.

I don’t see how you’re going to reach render-previewable compositing (which should be the goal) without doing some compositing operations in the GPU.

I think that, right now, you have some compositing operations that take time measured in seconds, and you have other operations that, implemented well, should take milliseconds-- like 1 or 2 ms for a 4k image. But when you have these two operations sitting next to each other:

a) People think that compositing is slow, because they don’t know which operations are slow and which are fast;

b) Developers don’t optimize the implementations of the fast compositing operations, because they think, “Heck, what’s shaving a few fractions of a ms going to mean when these other operations cost seconds? Compositing is just slow!”

I think, really, you need fast (previewable, GPU accelerated, parallel) operations, and you need slow, CPU operations. Maybe, with some fast-hack versions of the slow operations.

If accelerated, previewable compositing is ever a thing, I would love to see as many rendering options bundled into compositing as possible. That includes not just color curves, exposure, etc, but also things like treating Eevee shadow buffers as per-light render passes for us to mess with as we wish in compositing.

Beyond that:

- If we’re outputting a file, and reading that file as an image texture, can Blender please reload the file every frame? If we’re doing that, it’s because we’re trying to do some iterative rendering thing like hall-of-mirrors. **

- Can we have vector inputs for image textures so that we can use an image based tone map?

- Can we have a few vector math nodes? We’re doing velocity passes, normal passes; vector math is part of compositing. I mean, yeah, we can make them, but it’s kind of a pain, and out of keeping when there is so. much. sugar (probably more than there should be) in shader nodes.

** Edit: I realized I wasn’t doing this quite right before. Blender is loading an image sequence (not a movie) from properly named and referenced file outputs from previous frames. Although it is pretty clear that Blender is behaving strangely-- sometimes there’s a 1 frame offset, sometimes there’s not, with no apparent reason. Not designed for it, but doable.

Idea name: Improve “Levels Node” a bit, add “Min”/“Max” outputs

Brief description of the idea: This is small, but important improvement, since min-max is utterly needed for the task of normalization for specific masks, depths, etc. This is especially important when blender is used to generate specific image-like data (not real art/photorealiastic stuff), which may fit into big range initially (like emissions values or specific non-realistic depth) but is needed as normalized [0.0-1.0] gray map further the line.

Min and Max outputs will allow to automatically normalize masks and gradients in different manners (non-linear), which is very useful for wide range of postprocessing tasks

Additional notes: i suspect min/max already calculated for Mean, just not exposed to node… so this should be easy, i guess

This is a small one but can be really helpful for debugging and understanding the flow of a comp visually. It also has the benefit of making it more obvious where your viewer node is connected in a complex comp since it is diffrenciated. This suggestion can also be useful for all node systems in blender where you can view the node tree from different points in the tree.

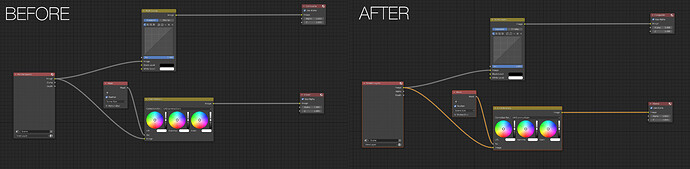

- Active Node Wires Highlighting - In many node based systems often all active upstream connections to the viewer have a visual indication. For example changing the wire colours or even highlighting active nodes.

Here’s a quick mockup of what this could look like in Blender.

Edit: Made feature suggestion more generalized after suggestions from myway880 and NunoConceicao.

Mentioning other proprietary software by name with screen shoots is not allowed for legal reasons.

Maybe you should revise this with a clear proposal that doesn’t involve copyrighted software …

You should use Natron for this kind of examples

Thanks for the suggestion. Made a little edit to make it more generalized.

Made a little mock up using Blender as an example.

Hi folks!

What is the stat on Compositor Up project? Is it planned to be implemented?

I was tasting a 4K some days ago with it. It was super fast and uses OpenCL accurately as far as I am seeing. Specially with scaling method implemented in property.

I think it has a bright future. Can anyone confirm? Want to do a demo in it.

Thanks!

I just discovered that the creator of the compositor up project is currently porting the compositor to a full frame workflow. Already ported a lot of nodes, so there is progress.

https://developer.blender.org/T88150

Thanks for the info! Will follow it!

This is really great to hear, glad to see that the fork got him the attention and commit rights!

!!! AI, AI, AI !!!

The most important idea is: Compositor add two nodes for AI.

General speaking, It is hard to support all AI functions, but i think add two nodes is easy:

1)one is input node, it use URL for getting file;

2)the another one is output node, it can send file to AI server.

Although they can implemented by addon, but not grace.

if Blender have these open interface, it can touch AI world, Right ?

We love Blender, also love AI !

!!! AI, AI, AI !!!

!!! Blender, Blender, Blender !!!

Come on, Please !

In a more general sense we could think of allowing the compositor load “third party nodes” written obviously as C++ DLLS because Python would not be the best choice in terms of speed.

Since Blender developers would not be able to support thousands of additional nodes in every given context. Is up to the community developers be able to extend compositor capabilities in additional ways.

That is unlikely to happen, supporting binary addons is listed in the Anti Features section of the development wiki. So i can’t see core devs getting on board with such a plan.

However we could consider how Cycles in terms of architecture, provided that someone wants to write very specialized shaders, allow you to bring your own shaders. This is what I have in mind for the Compositor as well.

Provided that the compositor sticks to 100% shader based plan it will allow users to drop their own shader effects in place.

However if there is a need for computing processing (network - AI) there is going to be a need for some sort of programming language support.

Say for example using native DLLs is out of the question, but allowing you to load Python scripts as Compositor addons as an alternative, is it feasible? In terms of licencing not speed.