A duplicate of Blender Archive - developer.blender.org to poke the devs and to collect feedbacks.

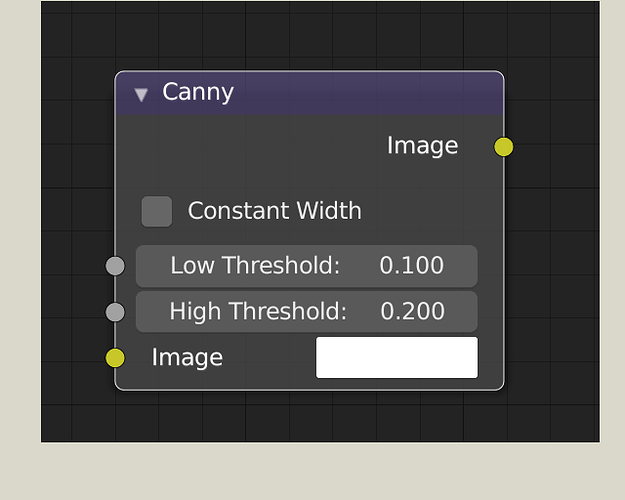

I made a Compositor for Canny Edge Detector.

It is placed in the filter menu.

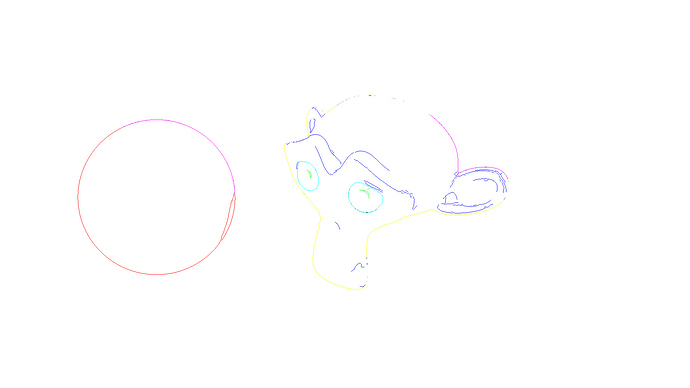

This can be useful to produce pictures such as the following.

This is basically the same as the following link.

https://docs.opencv.org/3.4/da/d22/tutorial_py_canny.html

However, there are 3 differences.

- No Noise Reduction

- High/Low Thresholds can be determined for each pixel

- Constant Width

1. No Noise Reduction

Compared to real images, CG images are free of noises. (especially on EEVEE)

In addition, Blender already has plenty of noise reduction filter.

This is why I decided to omit the noise reduction filter.

2. High/Low Thresholds can be determined for each pixel

The high/low threshold is 0.2/0.1 by default. This follows the python skimage default value.

In the original algorithm, the thresholds was something global around the image. However, the algorithm does not necessarily require a shared value, I decided to allow socket inputs.

Since the thresholds determines the sensitivity, one can detect edges densely in some areas while leaving the other areas sparse.

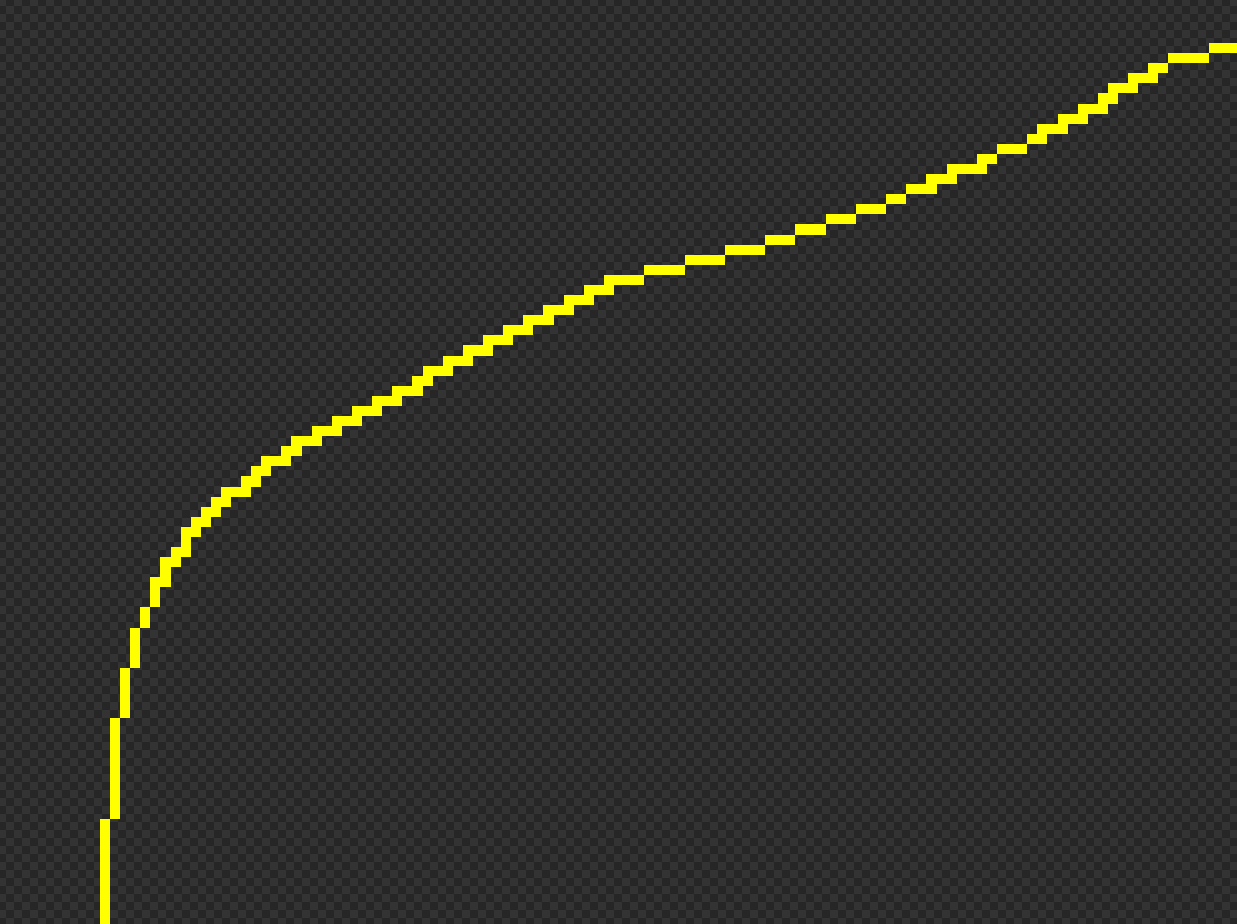

3. Constant Width

Constant Width Off (Original algorithm)VS

Constant Width OnWhen the Constant Width is on, the angles will be rounded to 0 or 90 (originally, one among 0,45,90 or 135)

I personally prefer the constant width on, but not quite sure what this causes. (I couldn’t find much differences in detected edges during my tests. However, there should be a good reason why the original algorithm runs this way)

There are several other changes in the actual calculation for speed up but the results should be the same.

demo file is available at Blender Archive - developer.blender.org