What is Blendit (Blender + Git) ?

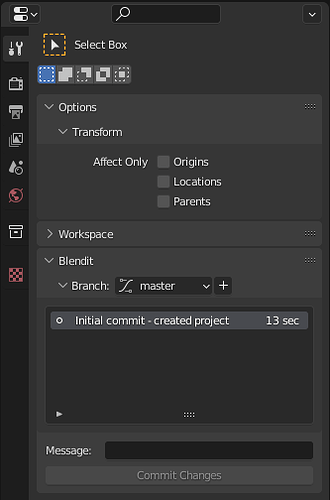

Blendit is an Application Template which brings Version Control to Blender.

What is Version Control ?

Version Control helps you track different versions of your project. Working on a project overtime you may want to keep track of which changes were made, by whom, and when those changes were made.

Version control has been standard practice in software development to keep track of changes made to source code for years now. However, when it comes to working with files other than textual files you usually out of luck.

While Git and other Version Control Systems (VCS) can track .blend (binary) files it does not make much sense as they are designed for textual files.

That said, according to @sybren on Blender Stack Exchange, Blender Institute uses Subversion.

At the Blender Institute / Blender Animation Studio we use Subversion for our projects. It works fine for blend files, but you have to make sure they are not compressed. Compression can cause the entire file to be different when only a single byte changed, whereas in the uncompressed blend file only that one byte will differ. As a result, binary diffs will be much smaller, and your repository will be faster to work with.

How is Blendit Different?

Instead of tracking the .blend (binary) file itself, Blendit tracks the changes you make to the Blender file in real time. It does so by keeping track of the Python commands, from the Blender API, used to make changes.

This way we only track a textual (.py) file as Git was intended to be used.

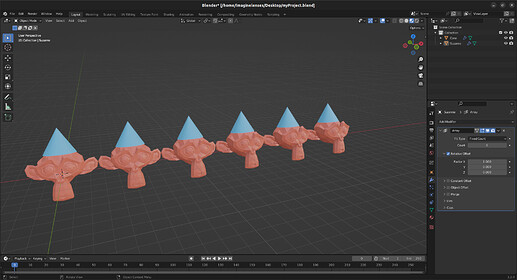

Each time you open a Blendit project, it regenerates the Blender file. This means you can delete the Blender file and still retain the project.

In theory the size of the entire project should be lower than using any other VSC.

With all that said this is just a proof of concept and far from production ready. As mentioned earlier Blender does not log all actions out of the box. There are ways to make Blender log all actions, but that would add huge overhead in the system. The way I get around this is by subscribing to required events using Blender’s Message Bus. However, the Message Bus is seemingly in its early stages and cannot subscribe to all types of events. See release notes to know more.

In Conclusion

If you find this interesting, check the website below and download Blendit - It is Free!

Star Blendit on GitHub! This is the easiest way to show support for the project. Do report issues or provide suggestions. Contributions are welcome.

Let me know what you think. If you have questions leave them below.