The standard doesn’t mean every application uses cm. The standard means that modeling software and engines that is used most of all (70% or more) on the market uses cm. Market share of just 2 modeling software is much bigger that all the other modeling software existing worldwide. And of course I mean software used by big studios. So the more similar to them Blender will behave the better chances that it will become one of the standard modeling software used by the big studios worldwide.

I seriously do not understand the issue. The scale settings in any 3D app - no matter if modeling and animation or game engine are merely an internal number. An arbitrary number. Any real world measurements are only there to give a relative sense of scale to the user and at import and export of objects are a muliplier factor. Usually a 10x multiplier.

The much bigger problem in Blender is that when setting the world scale factor to millimeters and actually working in a small millimeter range a lot of things can break modeling wise. Close range vertex merging and modifier settings - for example clipping and merging along the center axis. Mesh symmetry in sculpt mode - I have stil no idea if it is even possible to lower the marge factor far enough to symmetrize a model in the ~10mm range. Not to mention physics like cloth or that the world grid doesn’t scale lower than 1m automatically - it has to be set to a 0.01 factor to give a usable sense of scale in the 1 - 20cm range. And those are only the most obvious examples I stumble upon in my daily use.

But those are problems internal to Blender. This has nothing to do with import or export scale. Those are not incompatibility issues with Blender. The import and export factor are merely a multiplier and if possible world axis adjustment for dirrerent coordinate systems.

These are not compatibility issues these are most likey floting point precision problems internal to Blender. Or am I missing something here?

The reason that modeling things can break when using a really small (or, less often, really large) scale is the problem of epsilons. There are lots of places in modeling code where one wants to ask questions like: are these two vertices in the same place? is this angle close to 0 or 180 degrees? does this ray intersect this polygon? etc. Because of floating point, these are rarely exact comparisons but rather: within some small margin of error (the epsilon) are those things true or false?

The floating point data type used in Blender can only express things that are either the same or different by at least 1 part in 10 million (approximately). So if the scene has dimensions and positions that are both 100 meters and 10 micrometers, then Blender has little hope of making those comparisons properly. Now, if the largest dimensions and positions are all of the scale of 10mm, then it would be possible to make good decisions even about values that are 1 nanometer different. However two things make this not work as well as that ideal statement might make it seem.

- In practice, the epsilons may have to be as much as 1000 times bigger than the minimum distinguishable (by floating point) values. For instance, some bevel epsilons are that big to prevent strange “spikes”.

- Ideally, all epsilon decisions should be relative to the largest position or dimension in the scene. Doing that means that the code needs to go over the whole mesh to calculate that, and the expense and tediousness of that sometimes causes that step to be omitted and instead an absolute epsilon is used instead. Since testing is typically done against models with scales of about 1 Blender unit, we end up with a situation where the code works well for that scale but may (and likely will) fail for scenes with scales much larger or much smaller than 1.

As a coder who is sometimes guilty of #2, I apologize. Sometimes these things are easy to fix, but a more comprehensive fix that would not make me feel bad about performance, would be for Blender to incrementally keep track of the scale of the model at all times. That would force going into hundreds of places in Blender to do that, and would make modeling code more annoying to write and maintain.

Thank you for weighing in, @Howard_Trickey .

Keeping track of the de facto scale of the scene, while a nice idea, has heavy drawbacks as you’ve pointed out (and furthermore, having a dynamic value for epsilon might cause confusion for users… with models inexplicably breaking if a cube is accidentally translated really far away, etc.).

But perhaps a nice middle-ground would be to trust the user’ choice of units to give you an approximate sense of the scene’s scale? If the units are set to mm, scale the epsilons down by a factor of 1000, etc., and take the “Unit Scale” in account as well (if it isn’t already). That way Blender’s epsilons can remain fairly unit-agnostic, with no additional computational load.

What do you think?

This is a good idea. Going from that idea to actually finding all the cases in the code where there are epsilons, then applying this idea and thoroughly testing it, is a bunch of work. It doesn’t seem to me to be the highest priority thing to work on, sorry.

It is a good idea to follow CAD softwares’ convention: each document or project can have its unique unit setting along with a epsilon or precision setting (usually 0.001).

Definitely consider appending this on the roadmap. Thanks for your contribution Howard!

For some time I actually thought that this was how units were already working inside Blender when you adjust them inside the Units scale. At some point it could actually be a great idea to implement something like this.

I also like software showing the current grid factor in actual scale instead of weird scalars or (in case of the perspective view) even not at all.

For example: Working in mm scale a lot I always scale down my world grid to 0.01. I know one gridstep is 1cm / 1mm subscale but still it’s weird not seeing it in the viewport at all.

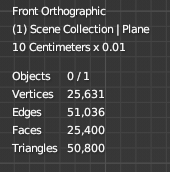

And in orthographic view it’s shown like this:

It’s beyond what was asked originally but I will still always find this weird and hope someone who can code will be annoyed enough like my to fix it at some point.

It’s not a big thing - it’s just … really strange and irritating.

Yes, floating point are rarely exact comparisons and hard to control accruncy without a standar rule. The most dangerous thing is the developer trimming too much for the original data or having comparisions with a big margin of error. Althoug the floating point data type used in Blender can express things that are either the same or different by 1 part in 10 million (approximately), a lot of developer have a habit of keeping two or three decimal places, it’s very danger for using metre as basic unit in this situation (or you need to standardize the decimal places reserved by the developer), 1cm(0.01m) already have two decimal place. In my recent project, there is a 1.2 metre tall humannoid character with 1.5 centimetre hair on its head and body. It is a very common sence (non-extreme conditions), right? I want to do hair animation simulation for it (enable hair dynamic). No matter how I adjust the parameters, the hair either disappears or explodes (blender version 3.3.0). When I search for tutorials about hair animation simulation on Youtube, what I found are all about long hair. When I scale every thing 10x up, everything is so easy and ok. I highly doupt that the developer trimming too much or having comparisions with a big margin of error.

I wonder how much (theoretically) adjusting the epsilon on a per-scene-basis could interfere with Mesh-linking and scene management, though.

This is a good point too.

You could also go the other way. Keep all epsilons the same and make the other scale dependent features flexible. Those are mainly physics scales and things like absorption coefficients.

It would be very nice if you could just say: “1 blender unit = 1 cm” and all the simulation/volumentrics/exporters and other scale dependent things would work in that scale. That way you could just keep the grids and epsilons as they are.

I think parts of blender already work this way, but I never really looked into it.

Yes, could work in this case - but keep in mind it’s not only the Physics, though. They are part of it but it’s absolutely not limited to it. Merging distances for modeling or retopo in small scales is also a problem as mentioned above. In smaller scales auto-merge, symmetry clipping, or other modeling tasks with a small value can go wrong just as well. It’s just slower and can be caught sooner than physics but it’s just as problematic during work.

And yes, like with physics simulations, in some cases you can simply scale up all meshes for the work at hand and then scale them down again. But it’s (a) a hack and (b) still problematic when changes have to be made or it’s more than just a few objects.

Merging distances for modeling or retopo in small scales is also a problem as mentioned above.

Why would that be a problem? If you tel blender “hey 1 b.u. equals 1 mm” you’ll have no problem modeling things on the millimeter scale. All your values get multiplied by 1000 compared to working in meters. Th auto merging will still work on (for example) 0.001 b.u., but that is now 1/1000th of a mm.

The whole point is that you prevent the values from becoming too small for a float to handle, while still exporting stuff to millimeters (or centimeters or whatever) and handling the physics correctly.

That would not be necessary in the system I describe. Because all your values are in ranges that work well in blender with the current epsilons. This is also how blender is supposed to work (and more or less works) currently, were it not for some parts of blender ignoring the unit setting and doing calculations assuming 1 b.u. == 1m .

Ah yes. Sorry, I misread your post. You are proposing that the worldscale adjusts not only the epsilon or the physics alone but basically all other scale relevant values in a blend file, right? Like Physics, grid scale, epsilons, exporters and every other place around a file. Yes, if that was possible without causing problems in referenced data it would be fantastic.

For the longest time I thought Blender would already work that way actually, when I learned about world scale for the first time, actually.

Not really no. I propose it only changed the scale dependent stuff like physics, SSS . Keep the grids and epsilons the same (in blender units, that is. So obviously different in real world units).

So if you set the world scale so 1 bu = 1KM, the grid will still be 1 bu wide, and the epsilon will still be (for example ) 0.001bu. But that now represents 1 KM and 1M instead of 1M and 1 mm. As long as physics and SSS do their calculations with 1bu means 1 KM everything should work as expected, no?