Don’t know if you have noticed it, Filmic is currently very friendly for first timers who just downloaded Blender and just wanted to import some models and put some lamps around, trying to get something that looks nice out of the box. Filmic is very friendly for that. That default washed out color just looks nice in majority of the time, and after a while if they want to have higher contrast they can just use the “Look” mode to adjust.

And now I installed ACES, the color on the lamps just behaves strangely, when I try to adjust the brightness, the hue somehow changes, and it seems like it is making the colors more saturated by default, with images you can adjust the color space to counter act that, but with colors in like lamps, like procedural materials, the result is very strange, the only way to fix it is to manually turn the saturation of each color down, which makes it not fair to compare it with a Filmic version of the same scene, because the color HSVs are different, it is really not fair to compare.

Now look at Filmic, which works completely fine by default, with a wide enough dynamic range, and with the “Look” mode to adjust the contrast, it is just way easier and way more intuitive to use Filmic.

It seems a lot of people are reading my comment the wrong way.

Yes, Blender has OCIO/Rec709/Filmic as a basis, and out of the box it works fine. Although there are a lot of people who think Filmic looks a bit off and too desaturated. But that’s a different discussion.

What I meant is that ACES has become a way to deal with anything (video)footage.

From digital archiving (DP0 with high DR) to anything display related (DP1), ACES has become the glue in between so to speak. Even with some issues as discussed above.

If Blender, for whatever reason doesn’t fully support this internally, it could slow down adoption in certain fields.

So from your sRGB monitor to any modern TV to digital movie projectors, ACES has something to do with it in production. Even CG/VFX work has it’s own colorspace, ACEScg.

Also ACEScg has a much larger ‘range’, so colors have more ‘room’ to work with, hence will look more saturated.

For your setup in Blender, be sure to set all inputs & output to monitor correctly, or you will get odd results.

Again, this website is very good in explaining a lot of what’s going on with/in ACES.

It’s uses a wider gamut working space, and exactly why it forms absolutely unacceptable imagery by default; it is missing crucial gamut mapping.

The bottom line is that all displays are limited. They have three specific lights they can project out of, and a range of emissions of those lights. ACES simply does a brute force 3x3 and a transfer function, and all values are clipped to the displays gamut.

This means flesh tones turn rat piss yellow, skies skew to cyan, and the typical digital RGB gaudy looking broken / skewed colours abound.

These sorts of things are not challenging to identify. Try them. Try using saturated AP1 primaries and watch the wretched imagery unfold.

Way back when, we tried wider gamut working spaces such as BT.2020 for Filmic. It sucked without comprehensive gamut mapping. So much so that I was flatly against releasing anything that generates ass looking imagery by default.

Above and beyond all of the glaring downsides of ACES and the poorly implemented image formation, Blender is not yet ready to properly implement wider gamut working spaces until it is fully managed.

So even if you happen to suffer vision deficiencies and desperately want a digital RGB hideous Instagram look via ACES, Blender isn’t capable yet. The weakness in the UI is prohibitive, not that any of the folks interested likely care because it’s pretty clear they aren’t looking at the imagery at all.

Newsflash; your display has limitations. It’s not like you can use some magical beans and make the imagery display more saturated than what it is capable of. What you are likely seeing is skewed digital RGB broken ass colour rendering.

I’m trying to grok the gist of your post here. I’ve read through your CM guide as closely as I think I could (recently even) and those limitation links above.

Is the summary of your post basically the following?

- A wider working space (the scene referred data) doesn’t help because there doesn’t exist[1] a gamut mapping capable of making the transition to the other spaces correctly for display.

- The only correct situation today, given all displays have limitations, is to ensure that both the scene referred and display referred primaries are the same. i.e. Use P3 primaries for the scene if going to be displaying on P3 displays.

If that’s the case, what can you do if your content does need to be displayed on different displays? Pick the scene referred space in the middle somehow? Pick the widest one for the scene and just deal with it? Render out specifically for each display?

Obviously there’s thousands of hours of good vfx out there already. A decent subset of those hours may even be using ACES. What are those folks doing differently?

[1] Is it that they don’t exist today or that it’s not possible to implement within the confines of the ACES system even if they existed?

These are terrific questions and I hope more image makers ask more like them.

There are some very high level image formation questions at work here, so apologies in advance if this is a text bomb.

If we think about a camera capture or a render, we have the beginning of an image, but we should be careful to consider it the fully formed image. That is, it’s closer to light data at this point. Any output essentially becomes the medium, and we have to pay close attention to it. To quote Ansel Adams…

The negative is the equivalent of the composer’s score, and the print the performance.

If we think about some extreme such as perhaps some sort of on / off display, we have a transformation that requires some thought to form the image.

Think about a series of lasers displaying colours on a wall; they are the most saturated and intense versions of pure spectra. Now imagine trying to paint a watercolour image of those lasers. Given the limitations of the output medium watercolours and paper, we need to figure out how to form the image that is completely inexpressible on a 1:1 basis.

This is a similar problem to the gamut mapping conundrum. A display may have a more limited range of intensities (SDR vs EDR for example) and a different or more limited range of saturation and hues that can be expressed. This is a nontrivial problem to solve.

There are a plethora of approaches to gamut mapping out there. But even when we have the same primaries, we still have a huge problem with how the limited dynamic range of a display cannot fully express the ratios in the colour rendering volume of the rendering space. Filmic is one half assed attempt to resolve this, and it includes a gamut compression of the higher values down into the smaller gamut of the display.

This is what many people completey erroneously believe a “tone map” does, but it simply is not the case. If we think about a super crazy bright BT.709 pure red primary, which is just another colour, how does it get expressed with a tone map? Answer? It just gets mapped “down” to a fully emissive red diode. Not quite what the cultural vernacular expects such as with imagery like…

The key questions that an image maker needs to ask herself is if the red ‘getting stuck’ desirable? If not, what does she want to happen with respect to the piece she is crafting?

These are the sorts of lower level image formation questions that few imagers are exposed to, but should be asking. They form the fundamentals of image formation that previous generations were not entirely exposed to in the manner they are today.

Ask yourself what your expectation is first. If your display is sRGB and you rendered using lasers like BT.2020, do you expect the image to be fully formed and well crafted or have disruptions of saturation and hue, as well as quantisation and posterization problems? Would you expect an sRGB image to be a fully formed image in its own right?

Using decent tooling, it is possible to gamut map down, it’s just that if you crawl the millions of hours of YouTube videos and the web at large, you aren’t likely to find a crap ton of information on gamut mapping, let alone things that present it in a manner that can excite image makers. It should however.

Usually it’s the latter, again with gamut considerations taken into account. There’s no magic bullet, and frankly, this still feels like a horizon that is a rather new frontier in many ways. It’s certainly not a hot topic on image maker boards. The Spectral Cycles thread here has quite a few great renders that help to nail the problems down, but solutions are darn tricky at best, and spectral makes it even more complex.

Remember that at studios they have the upside of having a colourist. That is, not just one but often a colorist and an assistant, whose sole job is to hammer the colours into shape based on the guidance of the director of photography.

One person armies of the sort that linger around these parts? Even though they run the gamut (pun intended) of extremely capable image makers, they sadly don’t have a colorist at their disposal to mop up the pieces. Let alone the duress of perhaps having to hammer out work on a deadline with only one person doing all of the grunting.

It just isn’t there. At all.

In Ed Giorgianni’s and Thomas Madden’s Digital Color Management: Encoding Solutions book that was largely the precursor to the sort of pipeline outlined via something like ACES, there are many different facets tackled, including a reoccurring theme of gamut mapping. Sadly, none of it ended up in ACES, for whatever reason.

If you want to see a deeper dive into gamut mapping aspects such as described here, check out Jed Smith’s post over on ACESCentral. It has plenty of amazing videos with it too! Even some uh… interesting… vantages.

The bottom line is that all displays are limited…

Newsflash; your display has limitations.

I know, that’s one of the reasons why ACES has all those output transforms.

But thanks for the info, and I’ve done my two cents on this one.

You still aren’t listening however. They are brute force 3x3 and transfer function, with no gamut mapping. It is plausible to refer to a 3x3 as perhaps the lowest form of gamut mapping, and that is what is being used.

There is no accounting for volume, nor area, and everything just clips.

It’s basically sRGB Mark II, with some additional sweeteners.

I told my friends about what you said regarding ACES, but they told me those problems are because we downloaded the official ACES config from Github, which is not optimized for Blender.

They gave me a version that was optimized specifically for Blender, and it seems to be much better than the Github one. What do you think about this?

This is the blender-optimized version of ACES colormanagement files:

https://drive.google.com/file/d/1dtE1jlFrCsn6a6EcDnXYmswmudr4mnaN/view?usp=sharing

Your friend needs to start using their eyes.

Feel free to crawl the ACES Central posts and see the discussions around gamut volume and skew.

I am curious as to what the difference is, but your link requires users to request access.

Oh my bad, is it fixed now?

It is, thanks! I’ll take a look in a bit.

I do mean it when I say “Use your eyes”. It’s not a brush off.

The problems associated are rather profound, and sadly the idea of a gamut volume is extremely elusive for some folks who may not have been exposed to such things.

Thankfully there are quite a few examples out there as to where our assumptions around imagery go sideways. The solutions are nuanced and not simple!

In the end, I’m fine with any system that enables image makers to tune an image to the limits of the particular medium provide. I firmly believe that we haven’t quite solved this issue with BT.709 based gamuts. Wider gamuts? Much more of a problem.

It is a very fascinating dive, with issues that even a casual layperson can see. Well worth an investment of one’s time.

I’ve been trying to keep up with the threads on that forum since my last reply. I’ve come away with more understanding of the problem now that I see images and not arcane words, but I’ve also come away with even more despair either from more missing knowledge or from the realization that this isn’t even close to being a solved problem  If y’all experts can’t agree on things (whether to want/don’t want hue skew even!), or figure out ways to address it, or speak with common terms that everyone is on the same page - what chance do I have?

If y’all experts can’t agree on things (whether to want/don’t want hue skew even!), or figure out ways to address it, or speak with common terms that everyone is on the same page - what chance do I have?

Anxiously awaiting items 26 through 1000 on your hg2c blog as I may be more confused now than before I read the blog and that forum :-/ So many questions…

Far be it from “expert” here, so read the following with a boatload of salt!

What I will say is that the historical “convention” of per channel is a mechanism that does not work as many folks believe. In doing so, the mechanism has two side effects that might seem desirable at first blush, but under scrutiny, fall apart.

First, it imbues all work with what can be considered a “Digital RGB” look. Feel free to explore the examples. Yellow skin, cyan skies, etc.

Second, it distorts the colour mixtures. In some cases, this means that values collapse together, and in that way, it is sort of a hackish “compression”.

However, in doing so, both effects ignore the actual mixtures, and obfuscate the idea that the working space values mean something. Worse, that Digital RGB effect, actually makes it near to fully impossible to mix some colours at the display.

A chromaticity-linear approach to gamut compression, on the other hand, means that the block of information chosen in the radiometric-open domain, makes its way out to the display! Even though a mixture might appear lighter in the gamut compressed output due to a “proper” gamut compression, it remains as compressed data!

Once compressed to a “lower frequency” in the display linear output range, an image maker is then free to selectively re-expand the values as she wishes.

In the end, there is much clutching of pearls, but the imagery somewhat speaks for itself. Hence the “use your own eyes” and process the imagery!

Hope this helps to shine a bit of a light on a rather important shift in thinking.

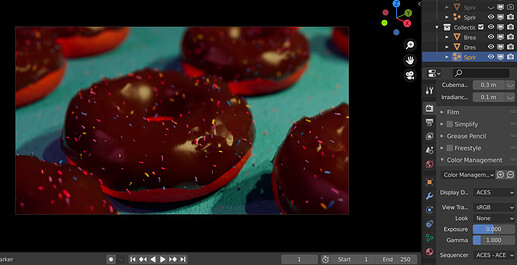

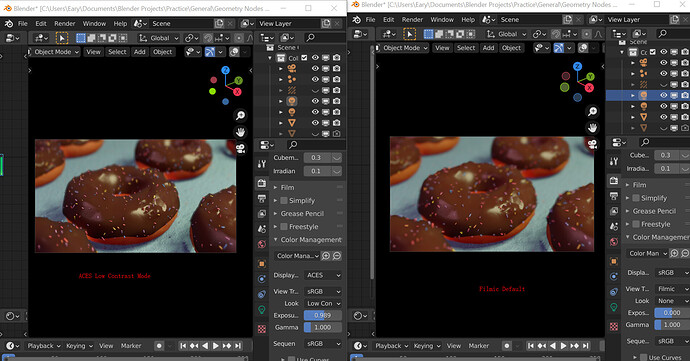

Well for me this version does seem to solve some of my problems, including what you said about the clipped over saturated color, this is what I got with the original version on GitHub, this disgusting over saturated color:

This is why I believe what you say. But now with this optimized version, I got this:

It just seems the problem is solved. But according to what you said, it shoud be a much bigger problem, that’s why I am asking for your opinion. I think I still don’t really understand.

If you are using sRGB mixtures, then the problem is, while still present, obfuscated a bit. But of course, if you are using sRGB mixtures, there’s no significant reason to use a wider gamut.

If something works wonderfully for you, go nuts!

My concern is only with folks who can see the issues, and if they encounter them, try to explain what they are and why they happen. For folks looking to move to different systems, it is valuable to be well informed of potential issues, as sometimes it requires investments of time, and often, money.

Also, when it comes to ACES, use only the officially sanctioned configuration unless you know the person who has modified it and the extent of their understanding. TL;DR: Be darn careful!

The person that released this config is a famous tutorial maker in the Chinese Blender community, almost all Chinese Blender user would know about him, his is like the Blender Guru in China. So I think it is fine.

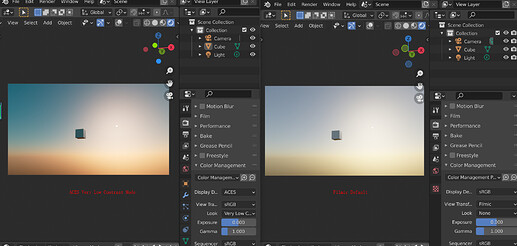

It works for this scene, but not another though. That scene uses Nishita sky, it seems this version of ACES still has problem with Nishita for some reason:

What is sRGB mixture?

Might be worth trying https://hg2dc.com as I would likely be repeating much of what is there.

For me the only issue I have with Blender’s current color management is that it’s hard to work outside of Blender.

I don’t really care if X or Y color management thing gives “better color”, I always end up tweaking everything at some point anyway. What I want though, is to preserve my work when exporting and importing.

I often do animations rendering, outputing EXR files because of its unbeatable write/read speeds and I can do heavy edits later.

But because of how Blender write the colors, it’s impossible for me to use those files in another software with the same look, unless that program allows me to literally import Blender’s OCIO. Otherwise I either have to eyball it or use a custom Blender with ACES.

Ideally I’d want to be able to just render my animations in EXR files and run a ffmpeg script to convert that sequence into a video file in seconds like I always do with Maya or UE4.

But With Blender’s EXR files I just can’t keep the colors right.

Right now if I want to do that in Blender, the fastest way for me is to export the final rush as a damn BMP sequence…