Guys, I want to ask a question. I found that EEVEE-Next usually takes a lot of time to render when I press F12, on my computer it takes 1~10s, even sometimes the rendering time is slower than cycles.

But as soon as the animation is rendered, it is much, much faster. I’m wondering if this is a bug that needs to be reported, or if it’s just an intractable problem with the renderer?

In my example I used 256x128 map and it still had enough of fine detail. Even if the sunspot is very tiny, the great thing about HDR data is that scaling down to lowest mip makes the extreme pixel values quickly bleed over to surrounding pixels, which is great in this case because even small but very bright sun disk won’t get lost as sub-pixel detail during scaling down.

I don’t have much experience with compute shaders. I know it would not be trivial, but I was making an assumption that there are existing known algorithms that would be able to split images into contiguous islands. I mean it’s what most of 2D image editors do for many operations, so I thought that some existing implementation could be used.

Then each of these rectangles could be extracted as small separate bitmap and its contents evaluated. If you had let’s say that 256x128 mip representation of the HDRI and extracted let’s say ~60 rectangular patches from it (those rectangles would also serve as the bounds for size and direction calculation), and each took on average 0.5 percent of the resolution, you’d end up with like 9800 total pixels to evaluate, but you would have to evaluate them not all against each other, but against those in the same patch/rectangle. Then you’d get those 1-3 winners.

I am sure it’s not simple but I think it doesn’t have to be expensive to work.

Lastly, I am fine with the size parameter being there if it’s temporary. I just hope it won’t be the usual “There’s nothing more permanent than a temporary solution” kind of thing.

I tried the newer build that doesn’t crash anymore, and it seems to work relatively fine, except the directional light to sky light ratio is consistently off by about 50% compared to cycles reference. The Eevee next one directional light is consistently almost always half as intense. So if the math that calculates the extracted light’s luminosity really checks out, I’d still put 2* multiplier somewhere in there for good measure. Or whatever it takes to have it matching Cycles as a reference.

Thanks, I looked it up and there was indeed a mistake. I was wrongly dividing the power by two.

Yes, please report the exact steps to reproduce the issue. There might be some bugs in the render code.

@fclem sorry to insist but is the light transmission supossed to make the beam of light visible inside refractive objects? because now it doesn’t work if thats it’s intended use

In fact it seems to be working but in a strange way, seems to have a spherical shape clipped by the falloff

or I’m just not getting it.

No, it isn’t supposed to. I believe Cycles doesn’t show anything here either (except if you have a volume scattering inside).

Maybe what you thought was a beam was just the cone intersecting with the side of the cylinder with a rough refraction. That might look like the beam is visible. Also the behavior changed a bit at some point, the shaded point used to be offseted inside the object and that might have actually behave like you describe.

ah, OK then, I was clueless about what it was supposed to do

That is how spot lights works under EEVEE. For cycles it’s doing the cone falloff on the sampled position of the light. For now, in EEVEE, it always apply the falloff from the light center.

would it make sense to have a multiplier for the GI, for artistic purposes? I guess bounced light equations are pretty much standard and well, just real physics but I find somehow the bounced light a bit too subtle, that’s completely my subjective apreciation but I think it would be nice sometimes to be able to pump it up or down, depending on the scene or use case.

Why not just increase saturation on compositor or color space presets… will do just the same.

honestly, have been trying that with little luck, I don’t know if it’s my fault or Blender’s color management pipeline. Besides, a GI multiplier would allow for more flexibility for artists but I guess that would be entering the realms of NPR, so maybe I’m digressing

You can already increase the intensity of indirect light inside each light probe. But for raytracing I would avoid it at all cost as there is chances that this will break the rendering equation and make the image just blow out and never converge (fireflies, noise etc…).

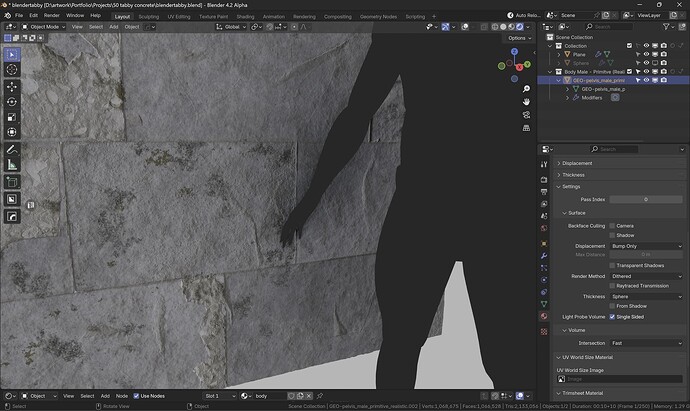

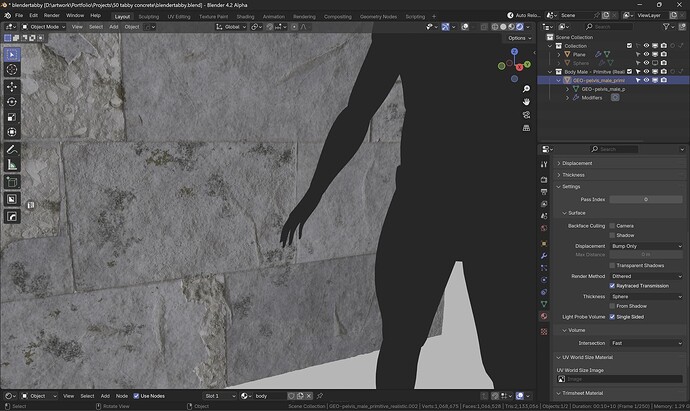

As I was playing with EEVEE Next, I wanted to have a mesh with flat shading (using an emissive shader) that does not at all influence the surrounding environment. I’ve found that ticking “Raytraced Transmission” achieves that as a side effect, even if that is not what it is meant for.

Without Raytraced Transmission:

With Raytraced Transmission:

As I find this pretty useful, I think perhaps there could be a dedicated checkbox that makes a surface not contribute to the lighting of any other surface? Or perhaps that checkbox could be renamed? Or is there any other way of achieving this that I am not realizing? ![]()

Fair enough, really good reasons to not touch it ![]()

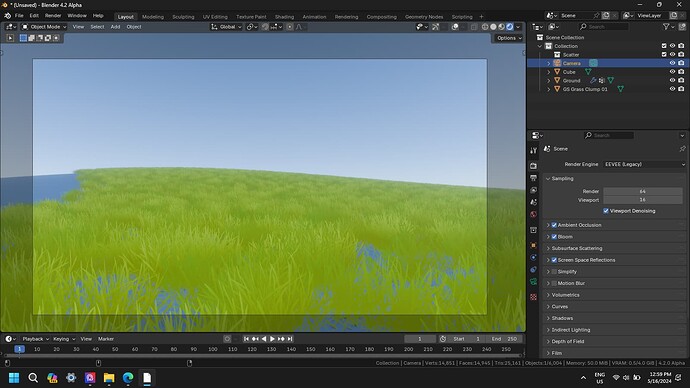

I downloaded the latest 4.2 build today. I don’t know if this is something to be worried. I’m testing a simple stylize scene. I noticed a big usage of VRAM when using Eevee-next, I know next has more advanced features and is more improved as a real time engine. Here’s a test I made. I have RTX 3050.

Eevee-Next: VRAM 1.1/4.0 GB

Eevee-Legacy: 0.5/4.0 GB

I’m thinking what if I have more objects in my scene, will it goes up to full 4gb and can’t preview the scene?

Followup to using Eevee-Next in VR:

The latest builds are somewhat better, but still not really useable.

- With temporal reprojection: grainy, flickering

- Without temporal reprojection: grainy, the grains are frozen in place

The worst are reflections. The reflection itself flickers (even without temporal reprojection) and it’s grainy.

How to test without a VR headset: just click middle mouse button and keep on very slightly moving the mouse.

Turning off raytracing solves the grainess. This is the only way to use VR with Eevee Next at this time. However, the quality is worse than normal Eevee.

- There is no denoising

- No indirect/HDR lighting. Not even with light probes.

Here’s how it looks:

Eevee legacy

Eevee Next Raytracing with Screen-Trace

Eevee Next Raytracing with Light Probe

Eevee Next without raytracing

This is very important, I´ve been hoping Eevee Next will be better at realtime viewport playback for reasons of VR and other interactive use cases. So far it has been worse as pointed in the above post.

What has worked for me quite well is intoduction of anti-aliasing node for realtime compositor + legacy Eevee - so far Im sticking with that.

Is there newer build to test corrected extracted world light intensity?

The latest Alpha daily build should already contain it.